What is happening with the attack against OpenPGP infrastructure constitutes a disaster, according to the affected people who maintain the protocol. Robert J. Hansen, who communicated the incident, has literally described the attacker as a ‘son of a bitch’, since public certificates are being vandalized by taking advantage of essentially two functionalities that have become serious problems.

A little of background knowledge

On peer-to-peer public certificate networks, where anyone may find the PGP public key of someone else, nothing is ever deleted. This was decided in the 90s by design in order to withstand potential attacks from those governments wishing to censure. Let us remember that the whole free encryption movement was born as an expression of ‘rebelliousness’, precisely due to the highest’s circles attempt to dominate cryptography.

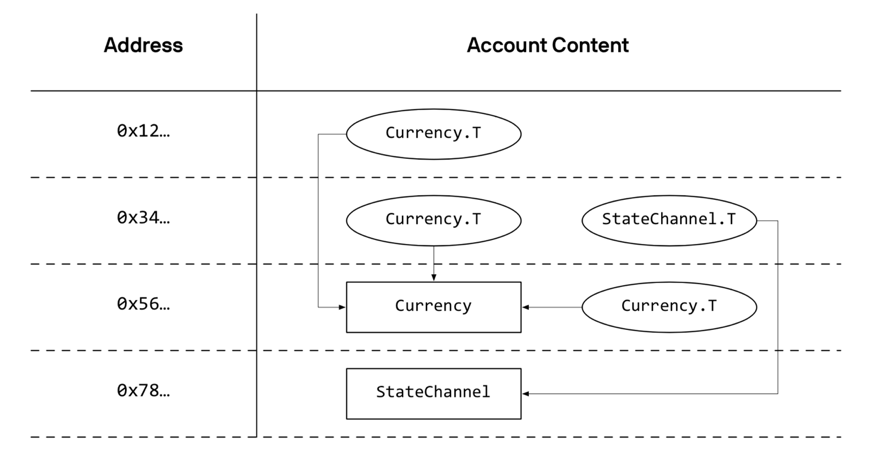

Since there is no centralized certification authority, PGP is based on something much more horizontal. They are the users themselves who sign a certificate attesting that it belongs to the user in question. Anyone can a sign a public certificate, attesting that it belongs to who it states to belong. By the 90s, people met to swap floppy disks with their signatures so that others may sign their public keys, whether they knew each other or not. The spread of the Internet brought along a server network hosting public keys. There, you may find the keys and, if appropriate, also sign one attesting that it belongs to the individual in question. Anyone can sign them an unlimited number of times, attesting with its own signature that the certificate belongs to who it states to belong. This attaches a signature of a given number of bytes to the certificate. Forever.

The attack

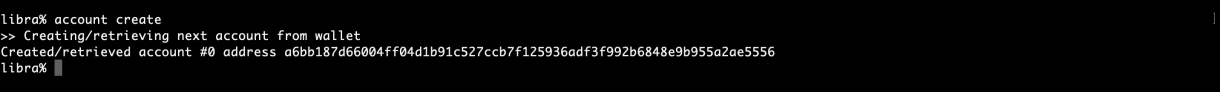

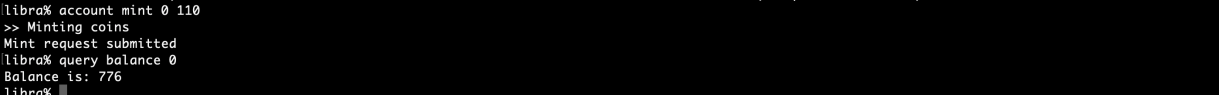

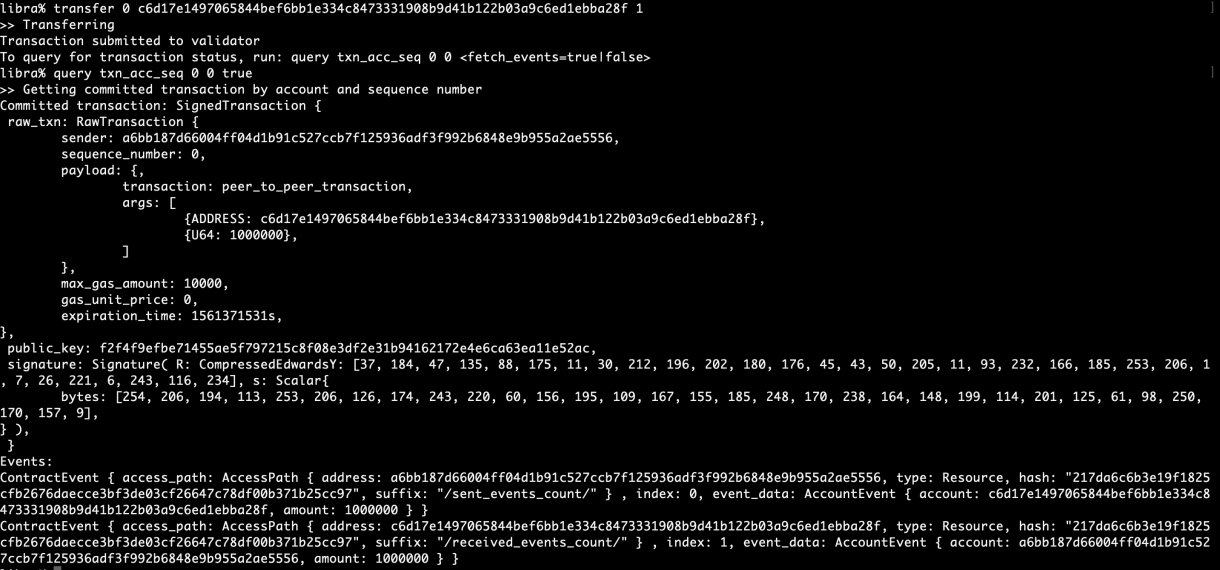

The attack that is being performed consists in signing thousands of times (up to 150,000 signatures per hour) public certificates and uploading them to the peer-to-peer certificate networks, from where they will never be deleted. In practice, valid certificates start to have a size of several tens of megabytes soon after. They are signed without data, as you can see in the following imagen. So far they have been focused on attacking two relevant persons from the OpenPGP movement guild: Robert J. Hansen and Daniel Kahn Gillmor.

The problem here is that under these circumstances, Enigmail and any OpenPGP implementation simply stop working or take very long time to process such oversized certificates (several tens of megabytes slow down the process up to tens of minutes). For example, 55,000 signatures for a certificate of 17 megabytes. In practice, this disables the keyring. Anyone wishing to verify Daniel or Robert’s signatures will break their installation while importing them.

Consequently, the attack takes advantage of two circumstances difficult to be addressed (they are features by design), so it’s pure vandalism:

- The fact that there is no limitation on the number of signatures. If there were any, it will pose a problem as well, since the attacker may reach the limit of trusting signatures of a certificate and this way prevent anyone from trusting it again.

- SKS servers replicate the content, and achieving it is part of the design in case an agency may intervene. This way, what is made cannot be deleted.

Why has not it been fixed?

The synchronizing network system called Synchronizing Key Server is open source, but in practice it is unmaintained. It was created as the keystone of Yaron Minsky’s Ph.D thesis, and it is written in an programming language called OCaml. As strange as it may sound, no one knows how it works so it would be necessary not only to address it, but to question the design itself as well.

Solutions are being reported: don’t refresh the affected keys and other mitigations, or refresh them from the server keys.openpgp.org, that implements a number of constraints on the problem in exchange for losing other functionalities. According to Hansen himself: the current network is unlikely to be saved.

But the worst may be yet to come. Software packages in distribution repositories are usually signed with OpenPGP. What if they start to attack these certificates? Software updates from distributions may become really slow and useless. It endangers the updating of systems that may be critical. This may suppose a call effect for other attackers, since exploiting the flaw is relatively simple.

Conclusions?

It was already known that the network could be misused, but no one expected such a wanton vandalized action. According to the affected people, they cannot understand its purpose if it’s not destroying the altruist work of people that attempt to make encryption an unlimited right. Defeatism may be perceived from affected people’s messages, where they show their frustration, anger and somewhat pessimism, with sentences such as “this is a disaster that could be foreseen”, “there is no solution”, etc. This final sentence is devastating:

But if you get hit by a bus while crossing the street, I’ll tell the driver everyone deserves a mulligan once in a while.

You fool. You absolute, unmitigated, unadulterated, complete and utter, fool.

Peace to everyone — including you, you son of a bitch. (Mulligan refers to a “second opportunity” in the golf jargon).

A number of points have caught our attention:

- The fact that the core code of the network is written in a such an unknown language, and that it has been hardly ever maintained since then, precisely because of its perfect functioning.

- The fact that gnuPG (the OpenPGP implementation) or Enigmail (that after all uses genuPG) cannot work with certificates of several megabytes is at least surprising. Their capacity to handle databases is quite poor. It reminds us what happened with OpenSSL after HeartBleed. Tens of defects started to be detected within the code, so the programmers admitted in some way that they could not spend more time on auditing the code. Solutions such as LibreSSL were born to attempt to program a more secure TLS implementation. Daniel Kahn himself admits it: “As an engineering community, we failed”.

- This damages the image of PGP in general, that is not in very good health. And this strong blow only endangers its whole image, not only its servers (these ones being the excuse for the attack).

It is an interesting issue due to several reasons that lead to a number of unknowns: What is going to happen with OpenPGP in general; with protocol itself; with its more common implementations; with the servers… And above all, what the attacker’s plan is (or the call effect that may cause more attacks): if it will go from personal certificates to those that sign packages, or how distributions will react.