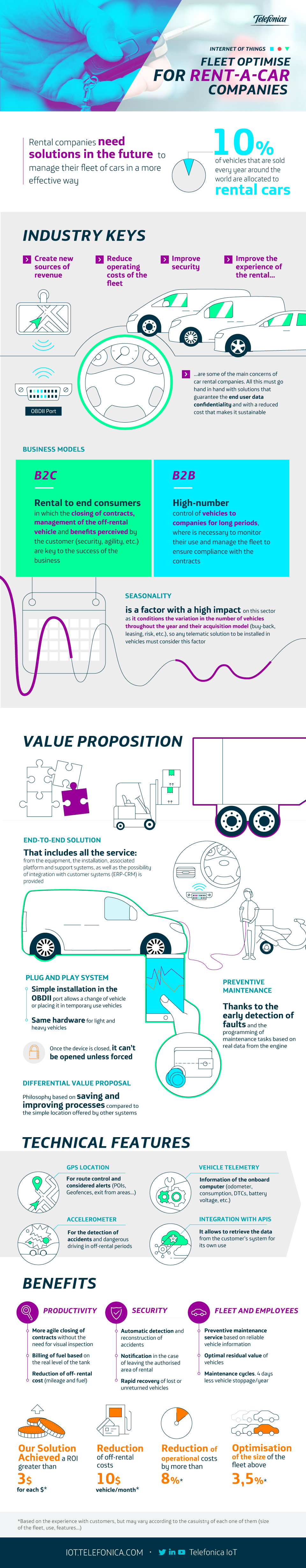

Fleet Optimize enables car rental companies to create new revenue streams, reduce the operating costs of vehicle fleets and improves the safety and customer experience.

Fleet Optimize enables car rental companies to create new revenue streams, reduce the operating costs of vehicle fleets and improves the safety and customer experience.

The London AI Summit attracted thousands of visitors; so many more than when AI was still a niche thing in the 90-ies. As always with such big events, it was a mixture of really cool things, hyped promises, important issues, real use cases, fun apps, and some cool startups.

Among the cool stuff was an RPA (Robotics Process Automation) demo that in 15 minutes programmed a bot where a handwritten message was sent to an email as photo; transcribed to text (no errors); pasted into a browser after first opening it; clicked on the tweet button and finally published the text on Twitter. And all without any human intervention!

Hyped promises were made several times, one presentation mentioned that “if it is written in Python, it’s probably Machine Learning, if it is written in PowerPoint, it’s probably AI”.

One of the overpromises was that AI will make health care accessible and affordable for everybody on earth. While this is a great vision to work with, achieving it involves so many other things besides AI.

I was happy to see that there was a talk on data ethics and the ethics of AI algorithms. Rather than ticking the boxes in checklists, for aligning organization values with practice, it is paramount to have meaningful conversations.

An example of a real use case was to use AI to evolve the customer relationship, and especially to do this across an organization in a scalable way across multiple channels and business processes. The pillar is a common data format which is turned into value through the combination of NLP, Machine Learning and RPA to improve the customer experience and increase efficiency. Another example of a use case was how AI can help pharmaceutical companies to fight the decline of the ROI on their R&D.

A fun app was TasteFace that uses facial recognition to estimate how much you (dis)like Marmite, a typical UK thing for breakfast.

A start-up with a purpose was Marhub which aims to build a platform to support refugees through the use of chatbots. Another social start-up was Access Earth who aims to provide – by crowdsourcing and AI – a world map of accessibility of public places for people with reduced mobility.

And London remains a “hip” place, not only for colored socks, but also for shiny shoes.

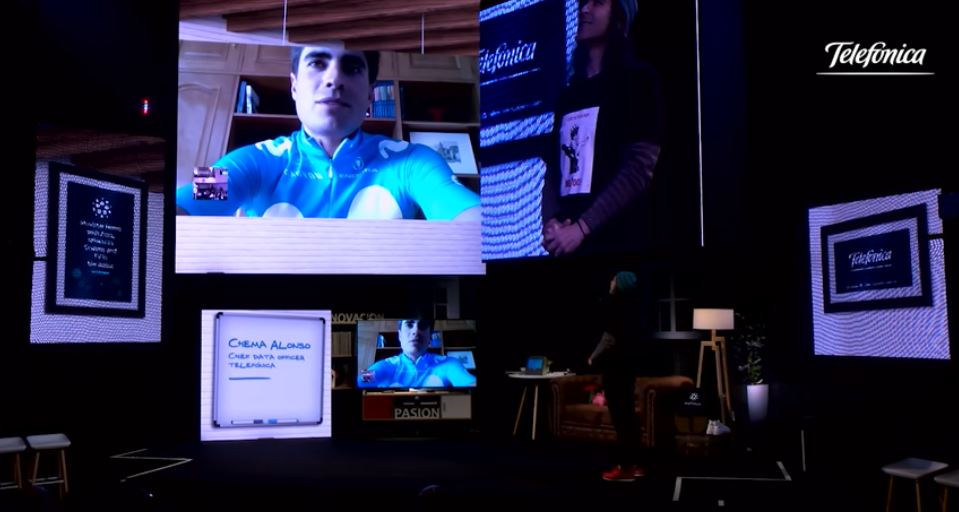

Last week Chema Alonso, Chief Data Officer of Telefónica, and Irene Gómez, Director of Telefónica Aura, gave a keynote speech about the company’s vision and ongoing projects on data & Artificial Intelligence at Telefónica’s Industry Analyst Day event held in Madrid.

“Telefónica’s transformation is already happening and is based on intelligent connectivity, new digital services and experiences built on top with Artificial Intelligence” commented at the beginning of the keynote.

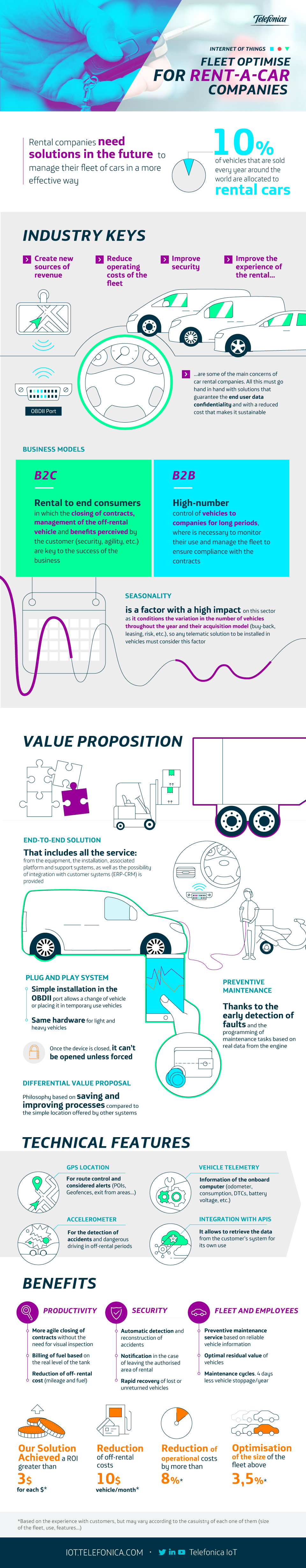

From 4 Platform to Home as a Computer (HaaC)

Irene gave a recap on the different highlights from Mobile World Congress 2017 (MWC’17) up to the event held this year in Barcelona. She said the Company announced on the MWC’17 event it’s four platforms data strategy and on top of the Fourth Platform they decided to build Aura, its AI.

“Aura is a new customer relationship model. You can speak with it in real time through natural language and voice interfaces. It can be experienced in different channels and brings personalization and customized experiences thanks to its adaptative learning.” stated Irene.

The executive also told its audience that during the MWC’ 18 event Aura was launched in six countries (Spain, Brazil, Argentina, Germany, UK and Chile) and Movistar Home, Telefónica’s smart home device with Aura by design, was presented. Only 8 months later, Movistar Home was launched to the Spanish market.

Irene Gómez: “We embarked on a project that was our first step to bringing AI to our customers’ Home Ecosystem. A project that was part of a much more ambitious vision: Home as a Computer (HaaC).”

She also explained that HaaC project, presented some months ago by Chema during the 2019 event, will enable new AI-powered in-home experiences for Telefónica customers in Spain.

Telefónica’s Aura Director also discussed some Aura highlights like the new country where it is available, Ecuador, or that Telefónica’s AI is now active in more channels like Whatsapp or various webs, among others. It also has more use cases, skills and capabilities. According to her, this has helped a lot resulting in the monthly active users growing up to 71% in the first four months of the year.

Home as a Computer (HaaC): AI-powered new in-home experiences

Chema continued afterwards and said that Movistar Home device is having great success since 9 out 10 customers in Spain would recommend it to friends & family. Furthermore, it is updating with new features like:

He also explained HaaC strategy and why the home is becoming more important for the Company.

Chema Alonso: “Our mission is to deliver AI-powered new in-home experiences, to help us gain relevance in the lives of our customers. Home is a key part of our lives and Telefónica is already the number 1 provider of home technology in most of our markets. We’re bringing together the best connectivity, new home devices, voice assisted AI and best services to deliver new powerful digital experiences.”

To conclude, he gave some examples of the experience that Telefónica is going to deliver to its customers, opening a new ecosystem for third parties to develop Movistar Living Apps. The attendees could see two videos of Movistar Living Apps: Air Europa airline and Smart Wi-Fi service created by Movistar.

If you want to know all about Aura, don’t miss out “Aura Story Book” which describes the vision, process of creation of Aura and the most important highlights in its first year of life.

There are many Jobs in which protecting your head is vital to avoid serious accidents, but can you imagine being protected beyond the blows that a helmet prevents? And have all the information of your working environment in real time? It is not necessary to imagine it. It´s already possible with the connected helmet of Engidi.

Work accidents are a serious issue that threatens the health of hundreds of thousands of workers around the world. In Spain, 652 peopledied for this reason in 2018, while at European level an average of 10 workers die every day (more than 3.800 people according to the latest data published by Eurostat).

Engidiis a startup dedicated to the design of wearables based on Internet of Things technology. Aiming at offering an effective solution to increase job securityin companies, Engidi has created an IoT device that integrates non-invasively into a standard work helmet. This smart helmet is responsible for collecting information on working conditions to ensure good safety management in the workplace.

The solution stands on an intelligent device that monitors the safety of workers. It consists on multiple sensors, an NB-IoT connectivity module and a location system. All this is integrated in a non-invasive way inside the homologated protective helmet.

The digital sensors monitor variables such as thermal stress conditions, height or the use of the helmet itself. The system also alerts impacts and falls with the location of the affected worker, it has an emergency call button and controls the numbers of operators in a certain area, warning of excessive concentration of people in dangerous areas.

NB-IoT connectivity makes it possible to send the data to a digital platform for real-time visualization by the occupational risk control units, thus being able to study the behavior patterns to implement more efficient work routines from a safer point of view. All of this helps prevent mishaps and allows reducing reaction times and intervention to accidents, something that can be decisive to save the lives of people.

Engidi is financed by Wayraand has the technological support of Telefónica. The NB-IoT solution of the connected helmet is being tested in itsThe Thinxlaboratories. In these tests they emulate real situation and environments, such as the networks that can be found in different countries of Latin America.

The implementation of the connected helmet in professional environments can benefit millions of people around the world. In Europe alone there are 35 million workers who wear helmets for personal protection in their work. Sectors such as industry, mining, navy, construction or forestry can benefit from greater job security thanks to this IoT solution.

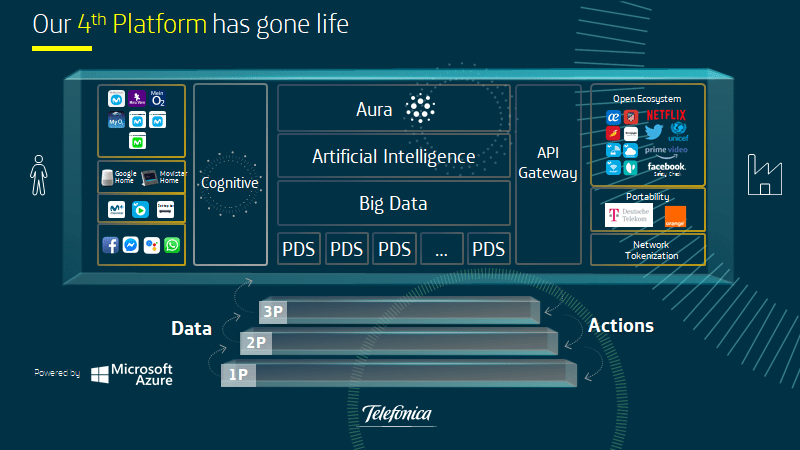

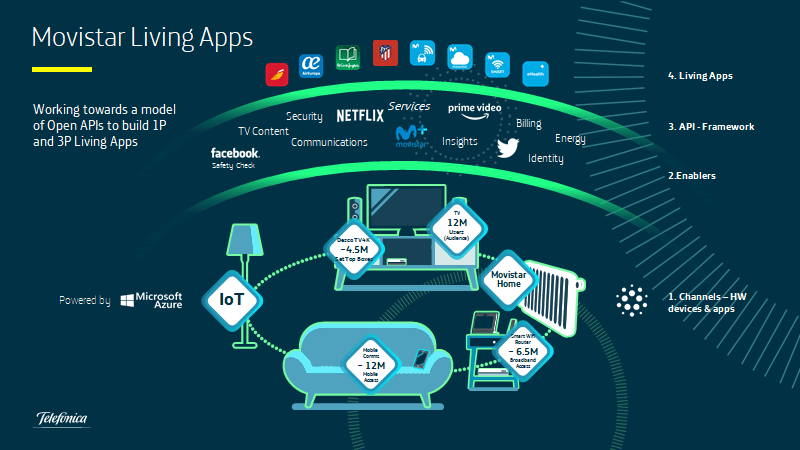

Telefónica is the leading telecommunications company in terms of innovation and a forerunner in introducing the latest Artificial Intelligence technology to people’s daily lives. This is why we have gone one step further, converting the home into a genuine computer, smart and open, able to provide third-party services in order to enhance the users’ consumer experience. This is what we refer to as Home as a Computer (HaaC).

Drawing an analogy between the home ecosystem and a computer, it is as if the UHD decoder, which uses HDMI to provide content in the form of television channels, is our access to a computer monitor, but in the living room.

The Wi-Fi router, with its signal boosters, which connects us to the IoT world in the form of lights, boilers and other home devices, would be the equivalent of the computer’s peripheral devices. Now, thanks to Movistar Home, we provide the user with a complete keyboard, speakers and a microphone so they can connect to Telefónica’s services through Aura, Telfónica’s artificial intelligence.

In order to complete this ecosystem, we have apps on Smart-TVs and mobile devices which connect to the home Wi-Fi and which are included in the same service contract, with direct control of the mobile devices’ SIMs. And not only that, like any good operating system we also have a wide range of default services for the home, such as ID verification by reviewing the documents of the people taking out the Telefónica services, a landline and mobile telephone number as a digital identification mechanism and third-party billing of services provided by our partners.

These are some examples of our services, from technical support in the home to Contact Centre services, as well as remote support for devices at home and security services such as “Secure Connection”, Movistar Car and Movistar Cloud. All this technology makes Telefónica the telecommunications company that brings the most technological services to its clients’ homes.

Therefore, thanks to the installation of Aura on the mobile apps and Movistar Home connected to the home Wi-Fi network, we have achieved a unique technological ecosystem which allows third-party products and services to be added in order to enhance our clients’ experiences. This is the precise point at which we implement the concept of the Living Apps, applications developed by external companies and incorporated into our ecosystem in order to improve our clients’ consumer experience in the home.

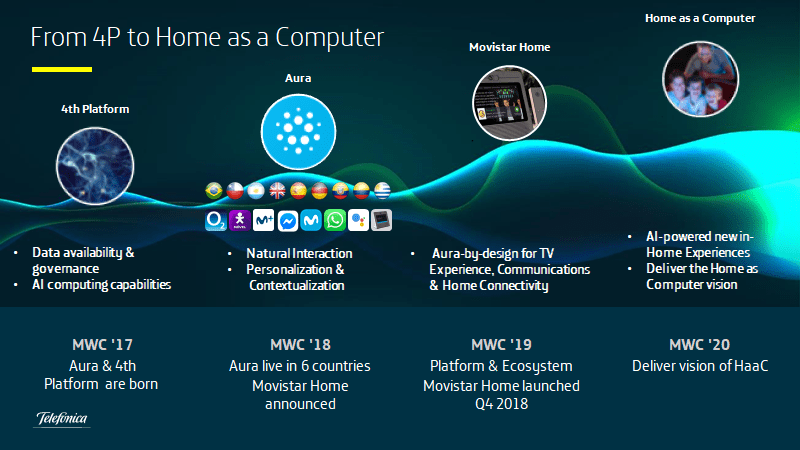

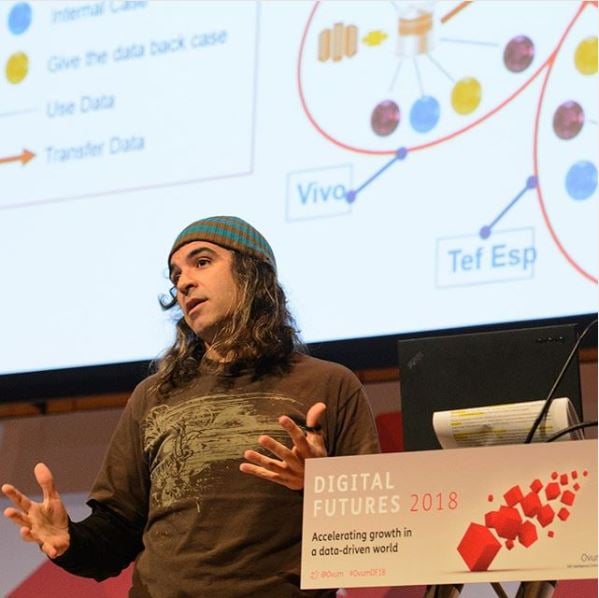

When I became Chief Data Officer at Telefónica, I was faced with new challenges and possibilities as I began carrying out this new role in the company. One of these first steps was the building of something which we internally called the Fourth Platform. To explain this new project, I always use the famous “Daisy strategy”, which explains how we intended to normalise data in a URM model in order to build standardised technology across the group. Normalised data encouraged by use cases which would get the best out of Machine Learning and Data Scientists. What’s more, we had the task of launching the Big Data & Artificial Intelligence unit for the Telefónica group: LUCA. This was the first step towards the birth of Aura and its presentation at Microsoft.

The whole project concerning the Fourth Platform which was about to be born required building a complete system that had the users’ data plus their Personal Data Spaces, with their generated data and their insights, which would allow a PIMS (Personal Information Management System) to be created which would support GDPR starting with the design, with the option of data portability, consent management, and which required that every interaction could be done with Natural Language using Cognitive Services and AI models. And that is how Y.O.T. (You On Telefónica) was born. The name Aura was yet to take hold. But that was it, essentially. I put all this onto a drawing in which you could see that we needed APIs, ETL processes, to normalise data with URM, create a governance framework, etc.

In order to be able to proceed with the creation of LUCA as well as of the Fourth Platform, I rearranged the CDO unit with the cross-sectoral Brand Experience and CTO teams with the teams that did technology with Big Data and Machine Learning which were revenue generators in LUCA and those who did data jobs within the Fourth Platform. All of this in tandem with ElevenPaths managing the company’s cybersecurity.

In August 2016 a group of colleagues from Telefónica and I travelled to Silicon Valley and Seattle to find out how the world of technology was moving that summer. Those trips to the USA helped us a lot. In a week we learned, we defined and we drew up future plans, building the foundations on which all the architecture of the Fourth Platform was to be based.

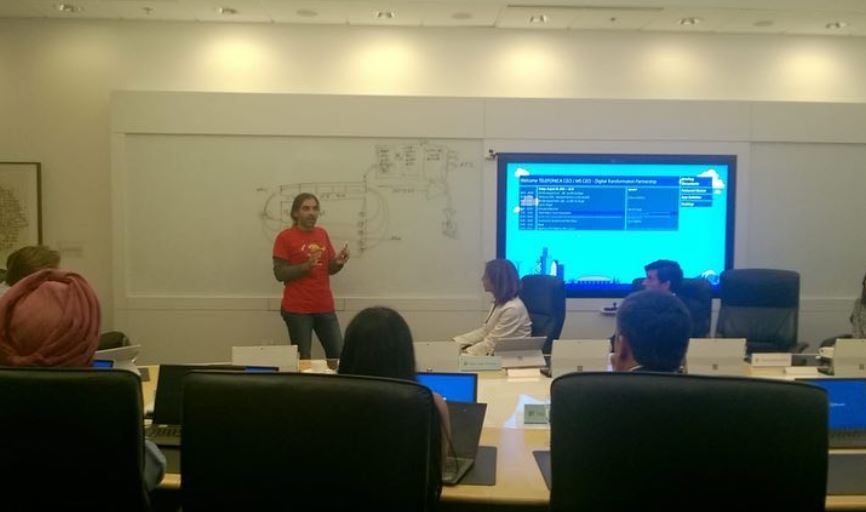

One of the companies we visited decided to invest in us and to help us. It all came out of a meeting that I will never forget because of what it meant for us. It was a meeting in Redmond, in Microsoft’s general headquarters, with Satya Nadella and his team, which led to the collaboration we needed to build Aura. At the 2015 Mobile World Congress, we had dinner with Satya Nadella. That night we talked about technology, data, what the future held for A.I. and enhanced reality, mobile devices and the telecommunications industry.

At the meeting we had in August 2016, we were accompanied by José María, José Cerdán – who not many people know is one of the intellectual fathers of LUCA and who is responsible for its name as well as the fact that we made it -, the incredible and incomparable Guenia who always provides the innovation to whatever we’re doing, and myself, who had to talk about what we were going to do. The Microsoft account team also came from Spain, led by Pilar López, who put her heart and soul into this project; Juan Troytiño, who helped us to pull out all the stops to maintain the relationship between the two companies despite the difficulties that two such large companies can often encounter; and David Cervigón, a friend and Cloud architect, with a unique vision. He knew where and how they could help us.

Company heavyweights from Microsoft Corp came, but Satya Nadella himself was to the fore, along with Peggy Johnson, Lili Cheng and the great Gurpreet Pall. To give you an idea, Peggy supported us during the launch of the 2017 MWC, and Gurpreet Pall in 2018. And everything happened at that meeting I’m telling you about in August 2016. In that moment, I had 10 minutes to talk in front of Sadya Nadella and tell him about what we were going to do, when we were going to do it and what we needed from Microsoft.

I explained to him what we were going to build in the same way I told my colleagues, with my famous daisy diagram. I drew it on a white board in the room and I explained each thing. I didn’t bring a Power Point presentation or a video to help me, I just drew and told him about what we were intending to launch at Telefónica. I explained the technological parts, the abstraction layers, the data normalisation, the part about the bot, the part about the cognitive services, where the insights were going to be managed, etc.

He liked the idea and believed that we would do it, so Satya Nadella told us we could count on them for the 2017 MWC. Peggy Johnson helped us with anything we needed along the way, Pilar López called up her engineers so they could lend us a hand, Juan Troytiño helped us with the HoloLens and David Cervigón helped us with LUIS, Bot Framework, etc.

As a result of this first contact, we met up again in August 2017 and August 2018 for more meetings, but on different topics. In the last two meetings we talked about Movistar Home, Privacy, Data Economy, Network Tokenisation, Contact Centres, BlockChain, Quantum Computing… Out of all of this, one phrase that Satya Nadella said to us in the last meeting has stayed with me:

“You guys say you are going to do something, and you do it” … And for me, everything began with the drawing I used to explain Aura to Satya Nadella.

*Source: El lado del Mal

At the 2018 edition of MWC, we announced that we were working on Movistar Home, a device aimed at reinventing the home incorporating Aura, Telefónica’s artificial intelligence, for its launch at the end of last year. This launch formed part of a company strategy based on bringing Telefónica’s artificial intelligence to users’ homes, using Movistar Home as a launching pad and converting the ecosystem of Telefónica’s home-installed devices into a “super computer”, which we have named Home as a Computer (HaaC).

At first, we focused on Telefónica’s core home services, Wi-Fi connectivity, television and communications, although we also anticipated the direction we were going to take with this project, incorporating areas such as family games and smart homes.

From the beginning, we were clear that the device would have to have two essential features: a touch screen and the incorporation of natural language in its interaction with our users. This would allow us to develop a key device when it comes to enhancing access to digital services from the home.

As well as the enhancement of these services, the hardware and software capacities of the device also enable us to provide our homes with:

– Second Screen: A second screen that enhances the user experience when enjoying content played on the main television screen, from supplementing what’s being viewed with information to creating interactive and smart advertising models which connect us to what is known as TV-Commerce.

– Hyper-Edge Computing: From the beginning, Movistar Home was designed with the ability to integrate micro-services. This would complement Telefónica’s Edge Computing strategy with a device that could help us to improve the implementation of services which require a minimum latency, such as gaming.

– Access to services from a third-party ecosystem: The device was created with the aim of enabling the opening up of the home ecosystem to services from third parties, which would provide other companies the opportunity to enhance access to their services from the home, as we have done with Telefónica’s services.

Its location in the living room, the heart of family life, facilitates interaction with Movistar+ content on the television and with calls and video calls. This is why Movistar Home has these elements built-in from the very beginning.

Among the technical characteristics we wanted the device to have, the most important is privacy, thanks to the ability to cover the webcam and a button to turn off the microphone at a hardware level, and the “always on” feature, which allows the device to be constantly on in order to encourage the user to use it and in order to not worry about whether or not it has battery life when they need to use it.

IoT technology makes the Wanda Metropolitano football stadium one of the most advanced in the world, thanks to its great connectivity capacity and the audiovisual experience and unique lighting it offers to the spectators.

In recent years much progress has been made in solving complex problems thanks to Artificial Intelligence algorithms. These algorithms need a large volume of information to discover and learn, continuously, hidden patterns in the data. However, this is not the way the human mind learns. A person does not require millions of data and multiple iterations to solve a particular problem, since all they need are some examples to solve it. In this context, techniques such as semi-supervised learning or semi-supervised learning are playing an important role nowadays.

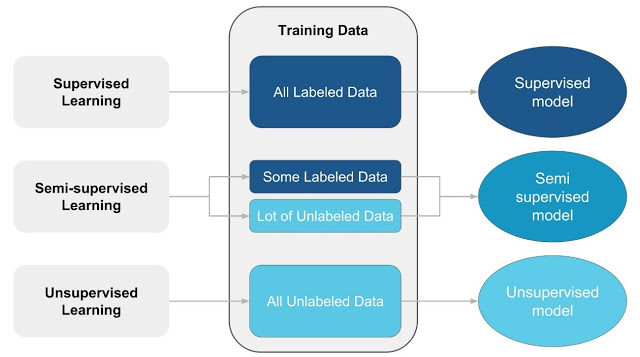

Within Machine Learning techniques, we can find several well-differentiated approaches (see Figure 1). The supervised algorithms deal with labeled data sets and their objective is to construct predictive models, either classification (estimating a class) or regression (estimating a numerical value). These models are generated from tagged data and, subsequently, make predictions about the non-tagged data. However, unsupervised algorithms use unlabelled data and their objective, among others, is to group them, depending on the similarity of their characteristics, in a set of clusters. Unlike the two more traditional approaches (supervised learning and unsupervised learning), semi-supervised algorithms employ few tagged data and many unlabelled data as part of the training set. These algorithms try to explore the structural information contained in the non-tagged data in order to generate predictive models that work better than those that only use tagged data.

Semi-supervised learning models are increasingly used today. A classic example in which the value provided by these models is observed is the analysis of conversations recorded in a call center. With the aim of automatically inferring characteristics of the interlocutors (gender, age, geography, …), their moods (happy, angry, surprised, …), the reasons for the call (error in the invoice, level of service, quality problems, …), among others, it is necessary to have a high volume of cases already labeled on which to learn the patterns of each type of call. The labeling of these cases is an arduous task to achieve, since labeling audio files, in general, is a task that requires time and a lot of human intervention. In these situations where labeling of cases is scarce, either because it is expensive, requires a long collection time, requires a lot of human intervention or simply because it is completely unknown, the semi-supervised learning algorithms are very useful thanks to its operating characteristics. However, not all problems can be addressed directly with these techniques, since there are some essential characteristics that must be present in the problems to be able to solve them, effectively, using this typology of algorithms.

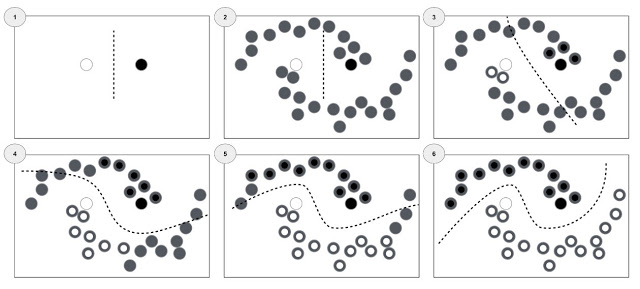

Probably the first approach on the use of unlabelled data to construct a classification model is the Self-Learning method, also known as self-training, self-labeling, or decision-directed learning. Self-learning is a very simple wrapper method and one of the most used methods in practice. The first phase of this algorithm is to learn a classifier with the few data labeled. Subsequently, the classifier is used to predict unlabelled data and its predictions of higher reliability are added to the training set. Finally, the classifier is retrained with the new training set. This process (see Figure 2) is repeated until no new data can be added to the training set.

In the semi-supervised approach a certain structure is assumed in the

underlying distribution of the data, that is, the data closest to each other

are assumed to have the same label. Figure 3 shows how the semi-supervised

algorithms adjust, iteration after iteration, the decision boundary between the

labels. If only labeled data is available, the decision boundary is very

different from the boundary learned when incorporating the underlying structure

information of all untagged data.

Another situation in which semi-labeled data is useful is in the detection of anomalies, since it is a typical problem in which it is difficult to have a large amount of tagged data. This type of problem can be approached with an unsupervised approach. The objective of this approach is to identify, based on the characteristics of the data, those cases that differ greatly from the usual pattern of behavior. In this context, the subset of tagged data can help to evaluate the different iterations of the algorithm, and thus, guide the search for the optimal parameters of the analyzed algorithm.

Finally, with the examples above, it is demonstrated that the use of non-tagged data together with a small amount of tagged data can greatly improve the accuracy of both supervised and unsupervised models.

Written by Alfonso Ibañez and Rubén Granados

Don’t miss out on a single post. Subscribe to LUCA Data Speaks.

Virtual Assistants are more and more relevant in people’s daily lives, especially when it comes to requesting traffic information, reminders, weather reports… However, when it comes to interacting with service providers such as a mobile phone company, users tend to prefer traditional channels, such as making phone calls or visiting stores. Two questions therefore arise: How could large companies implement new technologies to improve the customer experience? In this regard, do Virtual Assistants improve the user experience with telcos?

Almost all large companies have huge amounts of data about their customers. This data is often difficult to handle because it is not properly organised to provide a good personalised experience. When data is not managed correctly, the consequence for customers is a negative experience and low levels of satisfaction.

In response to this problem faced by many companies, the solution would be for Virtual Assistants, through the organised provision of customer data, to be able to give personalised answers to users. In this context Telefónica has developed Aura, its Artificial Intelligence (AI), which was created with the goal of improving the relationship between its customers and the company.

Virtual Assistants (VAs) which are based on data can save the user a lot of time when making arrangements, facilitating access to information by providing a single point of contact, via voice or text, regardless of the service. Furthermore, using AI often generates personal satisfaction because the user feels that they are making better use of new technologies.

On the other hand, for telecommunication companies, the use of data-driven AVs results in an improvement in the way the customer perceives a service by providing a personalised, fast and efficient experience.

By achieving this improvement, the company differentiates itself from its competitors, increases customer loyalty, and is able to attract new users.

All of this opens a door to a future where users will consider the use of data-driven AVs as a continuously evolving technological trend that simplifies, strengthens, and improves the relationship between telecommunications providers and their customers.