While the discussion and excitement around the metaverse is growing, there are also feelings of doubt, fear, concern and uncertainty about the potential risks in an environment where the boundaries between the physical and virtual worlds will become increasingly blurred.

The metaverse, to put it simply, can be thought of as the next iteration of the internet, which began as a set of independent and isolated online destinations that, over time, have evolved into a shared virtual space, similar to how a metaverse will evolve.

Metaverse is a shared virtual collective space created by the convergence of physical and enhanced digital reality” —Gartner.

Is the metaverse betting on Cyber Security?

The WEF (World Economic Forum) argues that the metaverse is a persistent and interconnected virtual environment in which social and economic elements mirror reality. Users can interact with it and with each other through immersive devices and technologies, while also interacting with digital assets and properties.

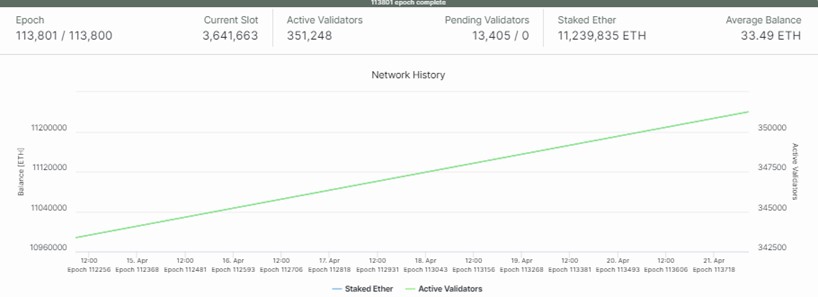

The metaverse is neither device-independent nor owned by a single provider. It is an independent virtual economy, enabled by digital currencies and non-fungible tokens (NFT).

As a combinatorial innovation, for a metaverse to work, multiple technologies and trends need to be applied.

These include virtual reality (VR), augmented reality (AR), flexible work styles, HMD viewers, Cloud, IoT (Internet of Things), 5G connectivity and programmable networks, Artificial Intelligence (AI)…and, of course, Cyber Security.

Challenges of the metaverse for organisations

The Cyber Security challenges that organisations face when operating in the metaverse can have significant implications for the security and privacy of their assets and users.

These challenges include:

- The theft of virtual assets can lead to significant financial losses for organisations.

- Identity theft can compromise sensitive information and resources.

- Malware attacks can infect entire virtual environments, causing widespread damage,

- Social engineering attempts to trick users into revealing confidential information or performing unauthorised actions.

- The lack of standardisation in the metaverse can make it difficult for organisations to develop consistent security protocols and ensure interoperability between different virtual environments.

- The novelty of the metaverse and the low security awareness of users can lead to poor security practices, making them more vulnerable to cyber-attacks.

The Metaverse Alliance suggests that, in traditional internet use, users do not have a complete digital identity that belongs to them. Instead, they provide their personal information to websites and applications that can use it for a variety of purposes, including making money from it.

In the metaverse, however, users will need a single, complete digital identity that they control and can use across platforms. This will require new systems and rules to ensure users’ privacy and security.

In the metaverse, users will need a unique and comprehensive digital identity that they control and can use across different platforms.

In short, users need to own and control their digital identity in the metaverse, rather than leaving it to third-party websites and applications. It is very worrying that most internet users do not have a digital identity of their own.

Understanding the new virtual environment and its risks

Statista expects the metaverse market to grow significantly in the coming years. It expects revenues generated by the metaverse market to grow at a compound annual growth rate (CAGR) of more than 40% between 2021 and 2025.

It also expects growth to be driven by the increasing adoption of virtual and augmented reality (VR and AR) technologies, with gaming and eSports industries dominating, and growing interest in virtual social experiences.

In the aftermath of the Covid-19 pandemic, the shift towards digital and virtual experiences has accelerated, further driving growth in the metaverse market.

These forecasts present significant opportunities for companies and investors, particularly in the entertainment and social networking sectors. However, the market also poses several challenges in terms of regulation, Cyber Security and standardisation that need to be addressed to ensure its sustainable growth.

The metaverse is not immune to cyberthreats

The metaverse, like any technology, is not immune to risks and vulnerabilities. Here are some of the technological and cyber risks associated with the metaverse:

- In the metaverse, users will create and share large amounts of personal data. This includes information such as biometric data, personal preferences and behavioural patterns. Ensuring the security and privacy of this data will be critical to prevent leaks and unauthorised access to sensitive information.

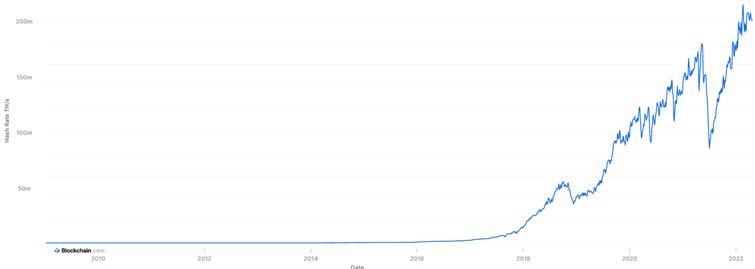

- As the metaverse becomes more popular, it will attract the attention of cybercriminals. Cybercrimes such as distributed denial of service (DDoS) attacks, malware and phishing scams could compromise the security of the virtual world and its users.

- The metaverse is likely to involve the exchange of virtual currencies and assets. If these assets are not adequately protected, they could be vulnerable to theft, fraud, and hacking.

- With the immersive nature of the metaverse, users may become addicted and spend excessive time in the virtual world. This could lead to physical and mental health problems, as well as social isolation.

- The metaverse could become dominated by a few powerful companies or individuals, resulting in a centralised and controlled virtual world. This could limit users’ freedom and innovation.

As we will see in the next article within this series, it is essential to develop robust security protocols and regulations to prevent these risks and ensure that the metaverse remains a safe environment for all users.

Featured photo: Julien Tromeur / Unsplash