In our success story today, we tell you about one of our key projects in the use of Big Data to improve communication between a business and its audience, in the OOH advertising sector. This time, we worked with Exterion Media, transforming their digital strategy with the latest technological trends, such as Big Data and IoT, to better identify their target audience and design high-impact campaigns on the London Underground network.

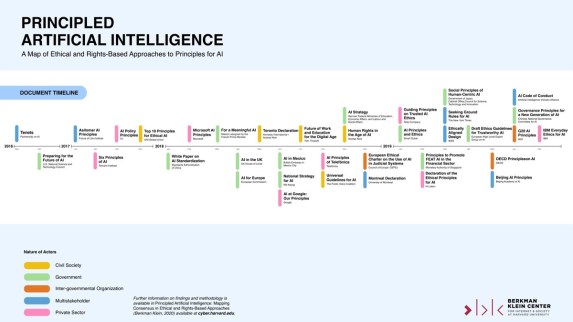

The investment in OOH is expected to increase to £1 billion by 2021. But current market metrics did not allow Exterion Media to profile audiences in offline environments as well as online. So, to ensure the expected growth, outdoor advertising companies are looking for ways to embrace the latest technological trends in a process of change in advertising, that is more audience focused.

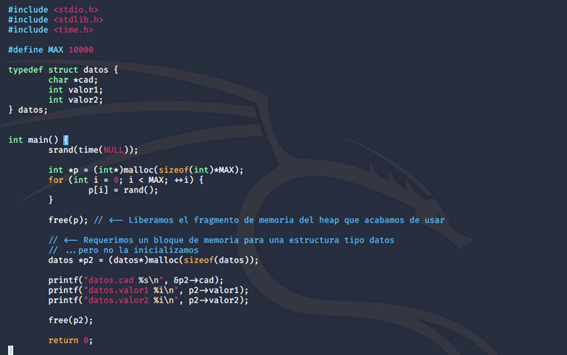

Therefore, both with the more traditional sources of information (panels and surveys), as well as data generated from mobile devices, they can offer a unique opportunity for those companies seeking to better understand their users who, in 90% of cases, have a mobile phone at their fingertips 24 hours a day. Thanks to the information of the anonymized and aggregated data, obtained through our Crowd Analytics platform, they provide a better understanding of the target audience.

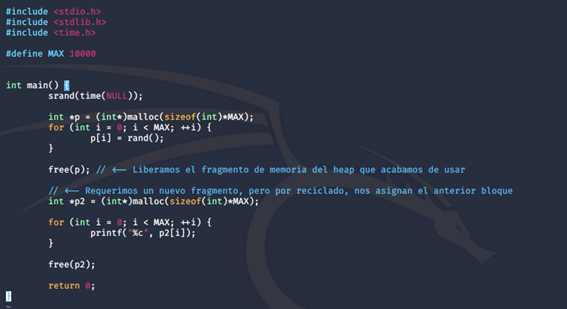

Our team of Data Scientists and Data Engineers in the UK use the mobility analysis platform to process and analyse over 4 million pieces of mobile data every day. Through this analysis of extrapolated data, insights are gained into the mobility patterns of the London Underground audience, based on the most frequented locations and peak times. This information is very relevant for companies in the retail sector that are looking for the ideal places and moments to capture their audience.

Mick Ridley, director of data strategy at Exterion Media, says:

After this project we can speak with confidence about how we capture audiences through advertising, and offer our clients the ability to better define their target audience

Mick Ridley

Now, Exterion Media has knowledge of how audiences move and access to Big Data’s analytical tools that allow them to improve the sales discourse with their clients. Thanks to Big Data, we can transform information into value, to allow data-driven decisions.

To stay up to date with LUCA, visit our Webpage, subscribe to LUCA Data Speaks and follow us on Twitter, LinkedIn o YouTube.