Did you know that there is a 100% secure encryption algorithm? It is known as Vernam cipher (or one-time pad). In 1949, Claude Shannon proved mathematically that this algorithm achieves the perfect secret. And did you know that it is (almost) never used? Well, maybe things will change after a group of Chinese researchers has broken the record for quantum transmission of keys between two stations separated by 1120 km. We are one step closer to reaching the Holy Grail of cryptography.

The Paradox of Perfect Encryption That Cannot Be Used in Practice

How is it possible that the only 100% secure encryption is not used? In cryptography things are never simple. To begin with, Vernam’s cipher is 100% secure as long as these four conditions are met:

- The encryption key is generated in a truly random way.

- The key is as long as the message to be encrypted.

- The key is never reused.

- The key is kept secret, being known only by the sender and receiver.

Let us look at the first condition. A truly random bit generator requires a natural source of randomness. The problem is that designing a hardware device to exploit this randomness and produce a bit sequence free of biases and correlations is a very difficult task.

Then, another even more formidable challenge arises: How to securely share keys as long as the message to be encrypted? Think about it, if you need to encrypt information it is because you do not trust the communication channel. So, which channel can you trust to send the encryption key? You could encrypt it in turn, but with what key? And how do you share it? We get into an endless loop.

The Key to Perfect Security Lies in Quantum Mechanics

Quantum key distribution brilliantly solves all Vernam cipher issues at one stroke: It allows you creating random keys of the desired length without any attacker being able to intercept them. Let us see how it does it.

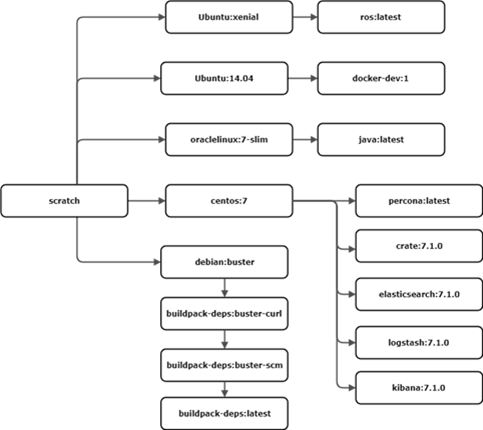

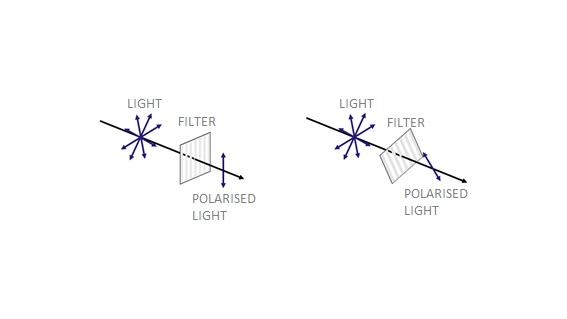

As you may remember from your physics lessons at school, light is an electromagnetic radiation composed of photons. These photons travel vibrating with a certain intensity, wavelength and one or many directions of polarisation. If you are a photography enthusiast, you may have heard of polarising filters. Their function is to eliminate all the oscillation directions of the light except one, as explained in the following figure:

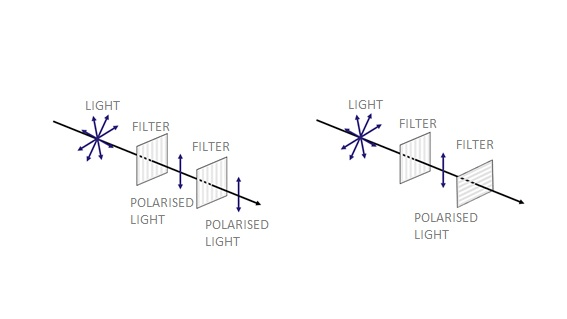

Now you get into the physics laboratory and send one by one photons that can be polarised in one of four different directions: Vertical (|), horizontal (-), diagonal to the left (\) or diagonal to the right (/). These four polarisations form two orthogonal bases: On the one hand, | and -, which we will call base (+); and, on the other, / and \, which we will call (×).

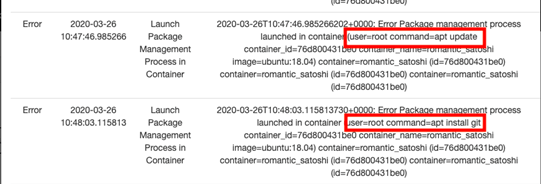

The receiver of your photons uses a filter, for example, vertical (|). It is clear that vertically-polarised photons will pass as they are, while horizontally-polarised photons, and therefore perpendicular to the filter, will not pass.

Surprisingly, half of the diagonally-polarised ones will pass through the vertical filter and be reoriented vertically! Therefore, if a photon is sent and passes through the filter, it cannot be known whether it had vertical or diagonal polarization, both \ and /. Similarly, if it does not pass, it cannot be said to be horizontally or diagonally polarised. In both cases, a diagonally-polarised photon might or might not pass with equal probability.

And the paradoxes of the quantum world do not end here.

The Spooky Action at a Distance That Einstein Abhorred

Quantum entanglement occurs when a pair of particles, like two photons, interact physically. A laser beam fired through a certain type of crystal can cause individual photons A and B to split into pairs of entangled photons. Both photons can be separated by a great distance, as great as you want. And here comes the good part: When photon A adopts a direction of polarisation, the photon B entangled with A adopts the same state as photon A, no matter how far away it is from A. This is the phenomenon that Albert Einstein sceptically called “spooky action at a distance “.

In 1991, the physicist Artur Ekert thought of using this quantum property of entanglement to devise a system for transmitting random keys that would be impossible for an attacker to intercept without being detected.

Quantum Key Distribution Using Quantum Entanglement

Let us suppose that Alice and Bob want to agree on a random encryption key as long as the message, n bits long. First, they need to agree on a convention to represent the ones and zeros of the key using the polarisation directions of the photons, for example:

| State/Base | + | x |

| 0 | – | / |

| 1 | | | \ |

Step 1: A sequence of entangled photons is generated and sent, so that Alice and Bob receive the photons of each pair one by one. Anyone can generate this sequence: Alice, Bob or even a third party (trusted or not).

Step 2: Alice and Bob choose a random sequence of measurement bases, + or x, and measure the polarisation state of the photons coming, no matter who measures first. When Alice or Bob measure the polarization state of a photon, its state correlates perfectly with that of its entangled partner. From this moment on, both are observing the same photon.

Step 3: Alice and Bob publicly compare which bases they have used and keep only those bits that were measured on the same base. If everything has worked well, Alice and Bob share exactly the same key: as each pair of measured photons are entangled, they must necessarily obtain the same result if they both measure by using the same base. On average, the measurement bases will have matched 50% of the times. Therefore, the key obtained will be of length n/2. The following would be an example of the scheme of the procedure:

| Step 1 | ||||||||||||

| Position in sequence | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| Step 2 | ||||||||||||

| Alice’s random bases | X | X | + | + | X | + | X | + | + | X | + | X |

| Alice’s remarks | / | \ | | | | | / | – | \ | | | – | \ | – | / |

| Bob’s random bases | X | + | + | X | X | + | + | + | + | X | X | + |

| Bob’s remarks | / | – | | | / | / | – | | | | | – | \ | \ | – |

| Step 3 | ||||||||||||

| Matching bases | Sí | No | Sí | No | Sí | Sí | No | Sí | Sí | Sí | No | No |

| Key obtained | 0 | 1 | 0 | 0 | 1 | 0 | 1 |

But what if an attacker was intercepting these photons? Wouldn’t he or she also know the secret key generated and distributed? What if there are transmission errors and the photons are disentangled along the way?

To solve these issues, Alice and Bob randomly select half of the bits from the obtained key and compare them publicly. If they match, then they know there has been no error. They discard these bits and assume that the rest of the bits obtained are valid, meaning that a final n/4-bit long key has been agreed upon. If a considerable part does not match, then either there were too many random transmission errors, or an attacker intercepted the photons and measured them on his or her own. In either case, the whole sequence is discarded, and they must start again. As observed, if the message is n bits long, on average 4n entangled photons must be generated and sent so that the key is the same length.

And couldn’t an attacker measure a photon and resend it without being noticed? Impossible because, once measured, it is in a defined state, not an overlapping state. If he or she sends it out after observing it, it will no longer be a quantum object, but a classic definite-state object. As a result, the receiver will correctly measure the state value only 50% of the times. Thanks to the key reconciliation mechanism just described, the presence of an attacker within the channel can be detected. In the quantum world, it is impossible to observe without leaving a trace.

It goes without saying that Ekert’s original protocol is more sophisticated, but with this simplified description the experiment conducted by Chinese researchers in collaboration with Ekert himself can be understood.

China Beats Record for Quantum Key Distribution

The Chinese research team led by Jian-Wei Pan succeeded in distributing keys at 1120 km using entangled photons. This feat represents another major step in the race towards a totally secure quantum Internet for long distances.

So far, experiments on quantum key distribution have been carried out through fibre optics at distances of just over 100 km. The most obvious alternative, i.e. sending them through the air from a satellite, is not an easy task, as water and dust particles in the atmosphere quickly disentangle the photons. Conventional methods could not get more than one in six million photons from the satellite to the ground-based telescope, clearly not enough to transmit keys.

In contrast, the system created by the Chinese research team at Hefei University of Science and Technology managed to transmit a key at a speed of 0.12 bits per second between two stations 1120 km apart. Since the satellite can view both stations simultaneously for 285 seconds a day, it can transmit keys to them using the quantum entanglement method at a rate of 34 bits/day and an error rate of 0.045. This is a modest figure, but a promising development, considering that it improves previous efficiency by 11 orders of magnitude.

According to Ekert’s own words: “Entanglement provides almost ultimate security”. The next step is to overcome all the technological barriers. The race to build an attack-proof quantum information Internet has only just begun, with China leading the challenge and well ahead of the pack.