1. Harnessing the data

2. Preparing for the big day

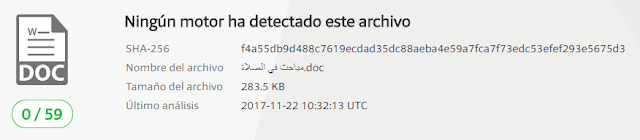

Months of preparation will take place before Black Friday arrives, due to its importance for retailers. With the data-driven technologies mentioned previously, important decisions relating to stock management and the hiring of seasonal workers can be made with more confidence. Each year, the NRF (National Retail Federation) in the United States carries out a number of surveys, one of which revealed that an expected 500,000 to 550,000 seasonal workers will be hired this year. By using the Big Data available to them, stores are able to predict the number of shoppers and thus hire the appropriate number of workers; therefore improving their operational efficiency. Efficiency is also a key word when it comes to stock management. Here, it is important to have sufficient quantities of the best-selling products so that customers don’t leave empty handed. Stores also want to avoid having large amounts of left over stock. Whilst an important part of Black Friday is the buzz generated by limited stock, firms should (and do) use data-driven modeling techniques to prepare themselves for the influx of shoppers.

|

| Figure 2 : It is important for stores to have sufficient stock to match the demand from customers. |

3. Setting the right price

4. Reaching the right customers

5. Improving the shopping experience

Don’t miss out on a single post. Subscribe to LUCA Data Speaks.