We are pleased to announce the latest version of FARO, our open-source tool for detecting sensitive information, which we will briefly introduce in the following post.

Nowadays, any organisation can generate and manage a considerable amount of documentation directly related to its daily activity. It is common for a significant part of these documents to be of a strategic or confidential nature: contracts, agreements, invoices, profit and loss accounts, budgets, employees’ personal data, etc. These are all examples of documentation that, if poorly guarded, can pose a major reputational and security problem for the organisation.

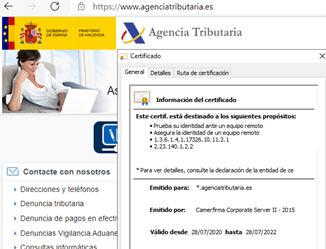

From our cyber security R&D centre TEGRA in Galicia, we have developed a tool called FARO, capable of detecting and classifying sensitive information in different types of documents such as: office, text, zipped files, html, emails, etc. In addition, thanks to its OCR technology, it can also detect information in images or scanned documents. All this to contribute to greater control of the sensitive data of our organisation.

In this new version we continue to add new features and improvements, among which we would like to highlight the plugin system with multilingual support. It is now possible to create simple plugins so that FARO can detect new entities with sensitive information.

“FARO is a tool open to the community and invites anyone interested in its development or evolution to access the repository and leave their feedback or any other input that may contribute to its future development”.

How to Use FARO

To use FARO, (after cloning it from Github and installing its dependencies), just launch it with the appropriate options.

FARO will generate an output folder in the root directory of the project with two output files.

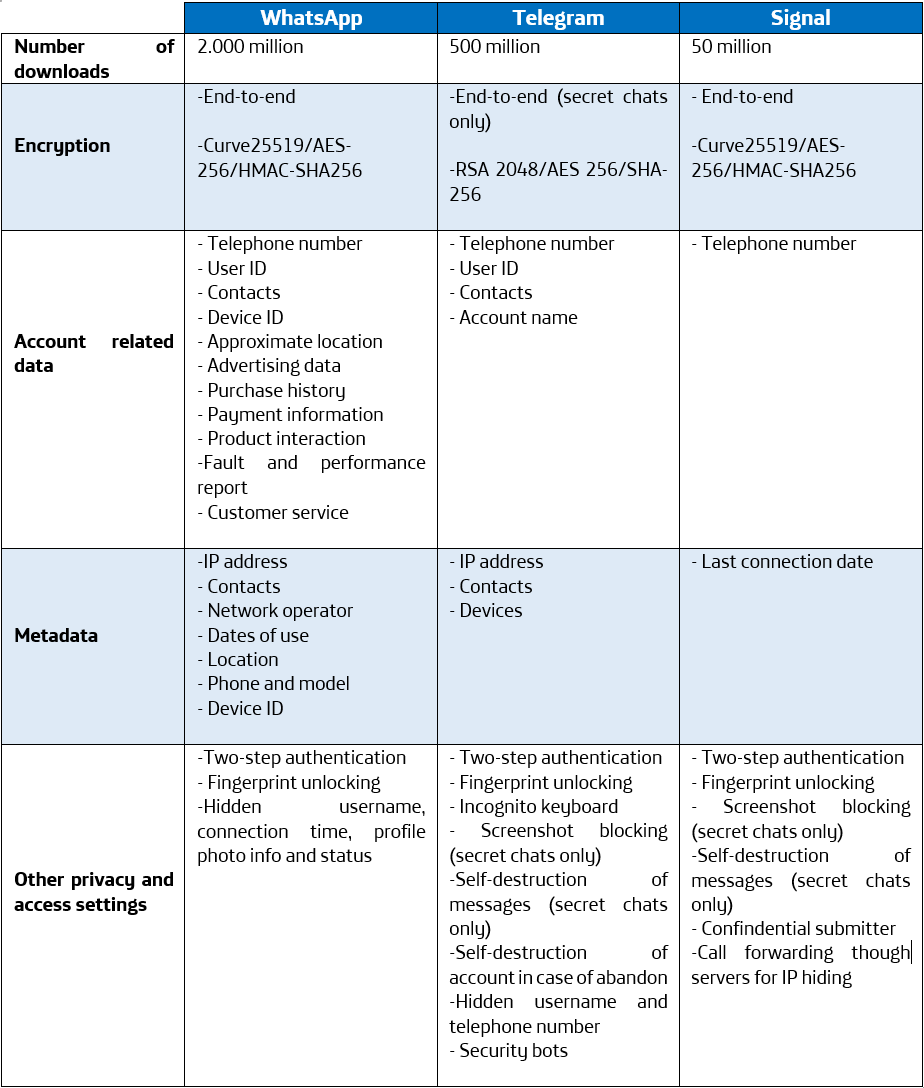

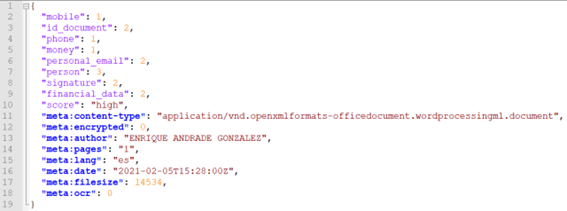

- output/scan.$CURRENT_TIME.csv: execution summary file with the final score of each document and the number of occurrences of each entity type.

- output/scan.$CURRENT_TIME.entity: json format detail file with a list of the entities detected for each source document.

Multilingual Plugin System

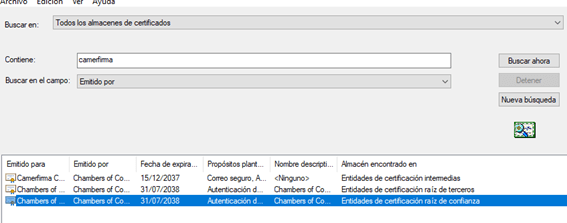

Thanks to FARO’s new modular architecture and plugin system, it is possible to detect new sensitive information without any in-depth knowledge of the tool’s inner workings. It will only be necessary to focus on the definition of patterns for the detection of sensitive information and to incorporate configuration for validation and context.

Two types of patterns have been defined for each plugin. The first pattern is used when the entity to be located is very specific and therefore we can detect it with a very high accuracy, generating a low false positive rate.

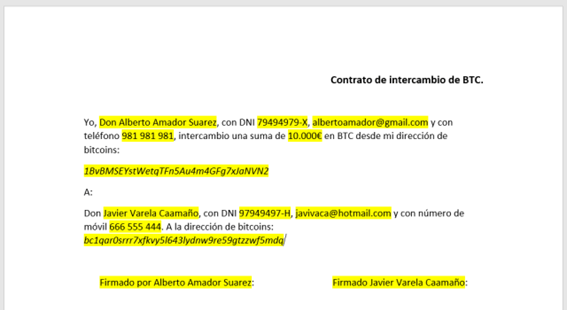

"BITCOIN_P2PKH_P2SH_ADDRESS": r"[13][a-km-zA-HJ-NP-Z0-9]{26,33}"Pattern 1 –Example of detection pattern for BTC addresses

The second pattern, however, is more generalist and could generate a higher number of false positives. Within each FARO plugin, a context can be added to increase the accuracy of the detection in order to avoid these false positives. This context is based on dictionaries of words that are searched before or after the potential entities detected, in order to confirm the decision.

"MOVIL_ESPAÑA": r"[67](\s+|-\.)?([0-9](\s+|-|\.)?){8}"Pattern 2 – Example of mobile phone number detection pattern

In addition, the plugins in FARO allow you to add an automatic validation if there is, for example, a digit control of a bank account and thus considerably increase the certainty that it is the information we want to detect.

Finally, each plugin can be defined for multiple languages by customising the context and the pattern to be localised according to the original language of the document.

In the wiki of the project you will find all the technical information for the development of plugins. We encourage all of you to participate, either by contributing new plugins to improve the tool or by testing FARO in your organisation and sending us feedback via Github.

TEGRA cybersecurity centre is part of the joint research unit in cyber security IRMAS (Information Rights Management Advanced Systems), which is co-financed by the European Union, within the framework of the Galicia ERDF Operational Programme 2014-2020, to promote technological development, innovation and quality research.