In the previous article we talked about the basic concepts of the blockchain, and now we will focus on the current technologies to implement a blockchain solution.

Blockchain and Smart Contract started in the 90s conceptually, but it has been in the last 9 years when different technological solutions have appeared, at this moment there are many implementations, but we can highlight three:

On the one hand, we have Corda R3, which is a blockchain created in 2014, focused around the finance sector, where a monetary concept does not exist, and a notary concept does.

We also have Hyperledger, a blockchain born in 2015. It´s use is more general, with a modular design, without money, and where the chain or ledger that we create is stuck to the design of our smart contract.

And finally, there´s Ethereum, which is a blockchain also established in 2015, with a much more general design, with its own money, ether, and where the network is focused on the design of Dapp applications (a decentralised application), to allow us to create decentralised applications that can be executed within Ethereum.

In the interest of technology, we will focus on Ethereum. For example, we can build an application in blockchain, which is already a flexible network and, moreover, it has public test environments, where we can construct our applications with practically no cost.

To begin, we should talk about Ethereum, a network with its own currency and ether, which is the key element for the whole system. In order to execute the smart contracts, we need to have Gas at our disposal, which we get through Ether, which comes with our Ethereum accounts, to be able to pay the miners, that mine for us or execute our smart contract.

Furthermore, other than Ether, Ethereum also has the second basic component in the network, the Ethereum Virtual Machine (EVM), which is the software that carriers out all the network nodes, and that allows us to safely execute a smart contract. This EVM can be implemented in different languages, it simply needs to comply with the specifications of the Ethereum Yellow Paper.

At this time two large implementations exist, on the one hand Geth, which is the implementation in Go of the EVM, and on the other we have Parity, which is the Rust implementation. The biggest advantages of the EVM are:

1. It´s deterministic. It always gives us the same result, for the same data and the same operations, like 2+2 always equals 4.

2. Its terminable: Our operations can´t be indefinitely executed, as all the execute instructions in the EVM have a gas cost and we have to limit the spending on each smart contract.

3. It´s isolated. No one can manipulate the execution of the smart contract.

The nodes and the ether form the base of the system, and now we can already deploy our smart contracts, which are programs that can be developed in distinct languages although they are all carried out in the same network. Nowadays, in Ethereum Solidity is the main programming language of Smart Contract, which is a mix between C and Javascript.

We now have all the elements in place to build our applications in Ethereum, a network with nodes to carry out the contracts, currency to pay for the transition, and a language to construct the smart contract. Now the question arises, what type of blockchain do I want to use?

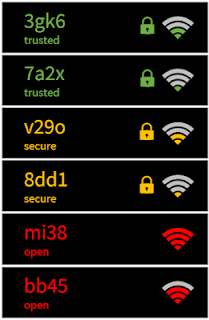

When we talk about networks within Blockchain, we have 3 types, depending on the privacy of each one:

– Public networks. These are the networks that anyone can join. In the case of Ethereum, its denominated by Mainnet, which also offers public tests like the network Ropsten. Its main characteristic is that the information on the network will be public.

These types of networks conform to public agreements, certificates or whichever type of functionality is necessary to be in the public domain. Economic costs are also higher with these types of networks.

– Semi-public networks. This type of network is orientated around the sharing of information between distinct organisations, and where the smart contract is assigned to manage the relationships between the members (automatically and without supervision), which improves productivity of the processes.

One of the advantages of this network is that it doesn’t need as many resources as the public one. There is already an implementation of Ethereum which is Quorum, of JP Morgan, specified for semi-public networks.

In Spain, they have access to Alastria which is a semi-public network implemented through Quorum.

– Private networks. The last type of network, which would be used within a business that doesn’t need to communicate outside of itself, where we like to simplify processes and where we have all the information audited.

As we can see, we have distinct types of networks for different uses that, depending on our use case, we should select a specific type of network.

And all of this is happening today, but we need to be ready for future changes and the future of the best technologies that exist, as for example, future changed to Ethereum. At Ethereum, there are a variety of projects designed to improve the network. Like Plasma, which is a project that improves Ethereum’s capabilities of carrying out more transactions per second. There´s also Casper, which was born from the idea to diminish network consumption with a new protocol that uses the algorithms Proof-Of-Stake (PoS) and Proof-of-Work (PoW).

As we have seen in this article, blockchain is still a relatively young technology, that continues to improve and change with time, but that has come to stay and modify the solutions we can give in many cases, just as with Big Data technologies.

Don’t miss out on a single post. Subscribe to LUCA Data Speaks.