Parking your car has turned into a difficult task in many cities. Looking for a space is a constant source of stress for citizens. Luckily, Internet of Things was born to make life easier for people. Their solutions have already been applied every day in cities to help people park more efficiently.

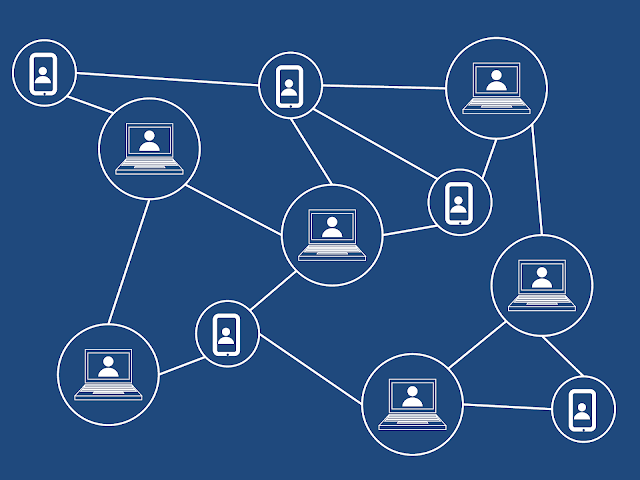

On the one hand, IoT parking solutions work thanks to sensor frameworks that collect information about the environment related to mobility, such as free parking spaces, the state of traffic or roads.

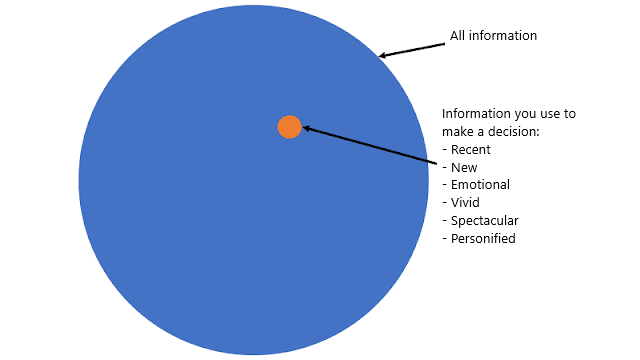

On the other hand, intelligent parking uses Big Data technologies to optimally analyse the large amount of data collected, with the aim of offering personalized solutions for each user in real time.

Intelligent parking has become a key element to improve mobility on the streets. Spanish cities such as Madrid, Barcelona, Santander and Malaga have sensor systems that help their inhabitants to park much easily.

Intelligent parking has become a key element to improve mobility on the streets

The increase in pollution is causing more and more cities to consider limiting the circulation of vehicles. An example of this is the Madrid Central project, the restriction of traffic in the central area of the capital of Spain, which establishes that polluting cars can only access the downtown area to park in a parking lot. In these circumstances, intelligent parking search becomes a very useful tool for drivers. In fact, the City of Madrid itself offers through an app real-time data of the available free spaces.

The IoT parking solutions provide the following benefits:

–Reduction of the time citizens spend in the car. This contributes to improving productivity in our lives, as it decreases delays in arriving to work or class. The lower volume of vehicles circulating also helps reduce the number of road accidents.

–Fighting against pollution: the circulation of vehicles at a slow speed, in search of parking, supposes a congestion of the traffic that is translated in high levels of gas emissions that are harmful to the public health. A more fluid traffic would lessen these effects and avoid the excess fuel consumption that involves going around the same place in search of parking.

–Improving society´s welfare. The reduction of traffic jams leads to a reduction in the stress of standing with the car in motion without moving towards the destination. Likewise, it implies more time available to enjoy leisure and rest.

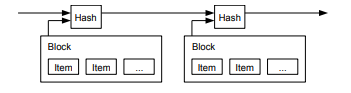

Urbiótica Smart Parking sensor technology is an example of autonomous and wireless parking based on the new NB-IoT communication standard, which allows direct communication of the sensor with the cloud without the need to install dedicated gateways. It has an integral network of sensors that are installed underground in just 10 minutes without cables and with minimum work, and is powered by batteries with a duration of up to 7 years. It is automatically calibrated and can be located on the curb or sidewalk, without the need to remove parked vehicles and greatly facilitating the deployment of the solution.

The system works using magnetic detection, its sensors read the variations that occur in the magnetic field when the mass of metal of a car parks. The entrance and exit of the vehicles in the parking spaces and the duration of their parking are detected in real time. This data is sent to an application so that drivers have updated information on their smartphone about the free parking spaces at all times, thus being able to find parking spaces quickly and efficiently. This reduces the time lost in parking search and the pollution generated.

The parking of the future is here thanks to IoT

A solution that takes one step further in the connected parking is Automated valet parking, a system designed by Bosch in which the car itself is connected to the smartphone and the sensors of the parking spaces. With this solution, it is the car and not the driver who receives the information from the sensors and decides where to park. Upon entering the parking lot, the driver gets out of the car and presses a button on an app that orders the vehicle to park. Then, the vehicle receives the information of the parking spaces and automatically moves to one of the available places.

The development of connected solutions applied to parking has already a leading role in many people´s daily lives, favouring a more sustainable circulation in Smart Cities. The parking of the future is here thanks to IoT.