![]()

Content Written by David Nowicki CMO and Head of Business Development at Datami and published through LinkedIn.

50 Years ago this month, retail banking changed forever with the introduction of the first Automated Teller Machines. Designed to serve customers outside of traditional banking hours, and outside of the branch, the ATM enabled a far higher number of transactions, at a fraction of the cost of in-branch services.

The sophisticated app-based smartphone banking experience many of us enjoy today as part of the digital transformation of retail banking is both a direct descendant of the ATM and destined to replace it.

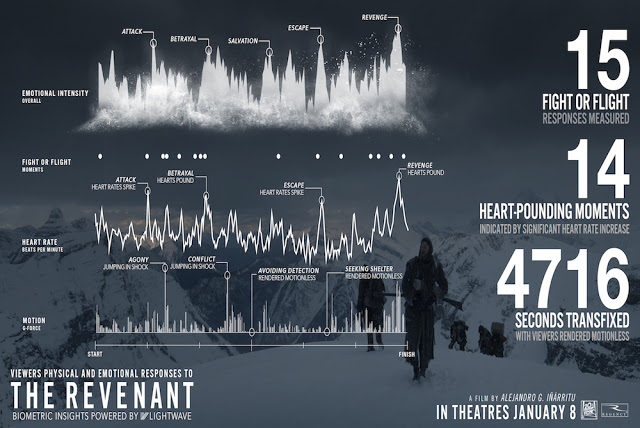

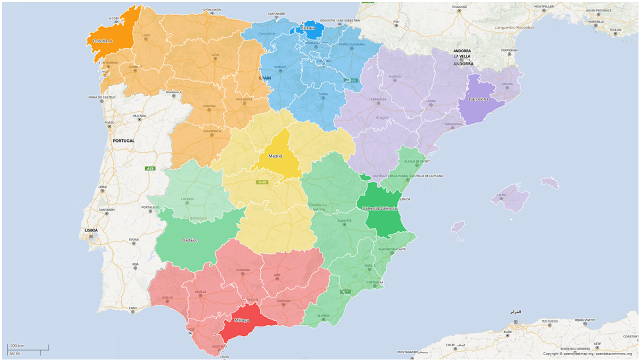

|

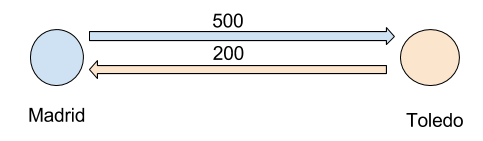

| Figure 1: The cost of mobile banking transactions can be as little as five percent of comparable in-person transactions. |

But to drive change in behavior and generate economic efficiencies, technology must be accessible. Not until banks had built ATM networks that reached significant numbers of their customers did the machines begin to deliver returns.

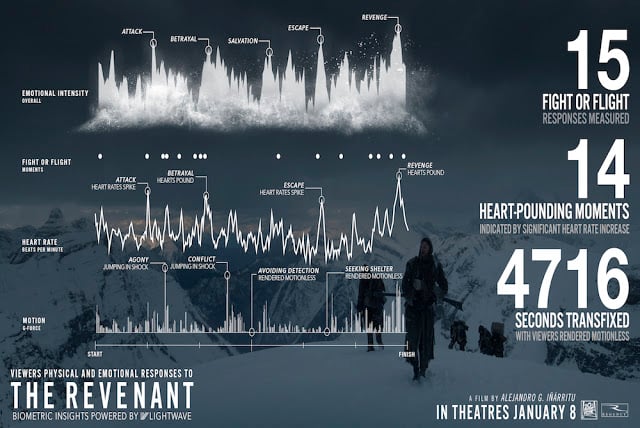

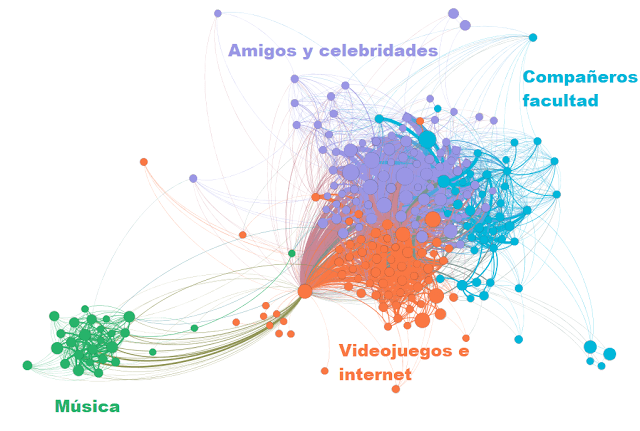

In a little over a year following launch, the number of mobile banking customers increased from three million to seven million, with mobile banking growing to represent 29% of all transactions, according to Frost and Sullivan. Bradesco reported ROI of 3x within the same period, also revealing that—by enabling more customers to check their balance using their mobile phone—average ATM visit length has been reduced by 25 seconds.

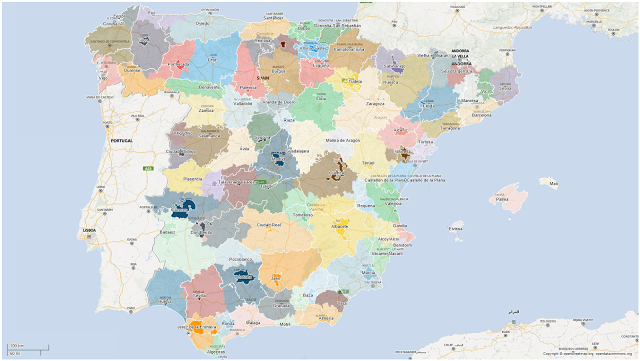

|

| Figure 2: Mobile banking represents 29% of all transactions. |

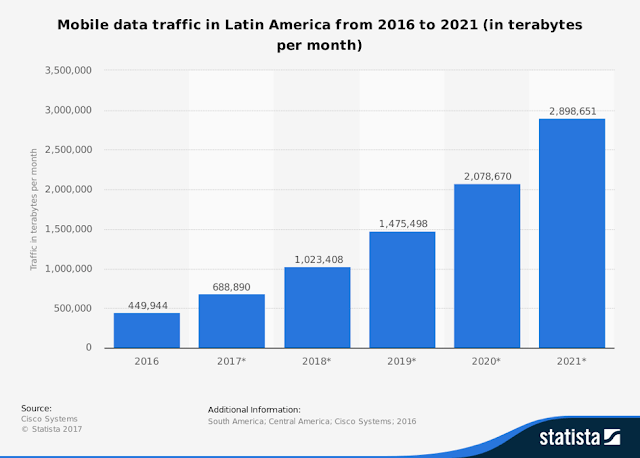

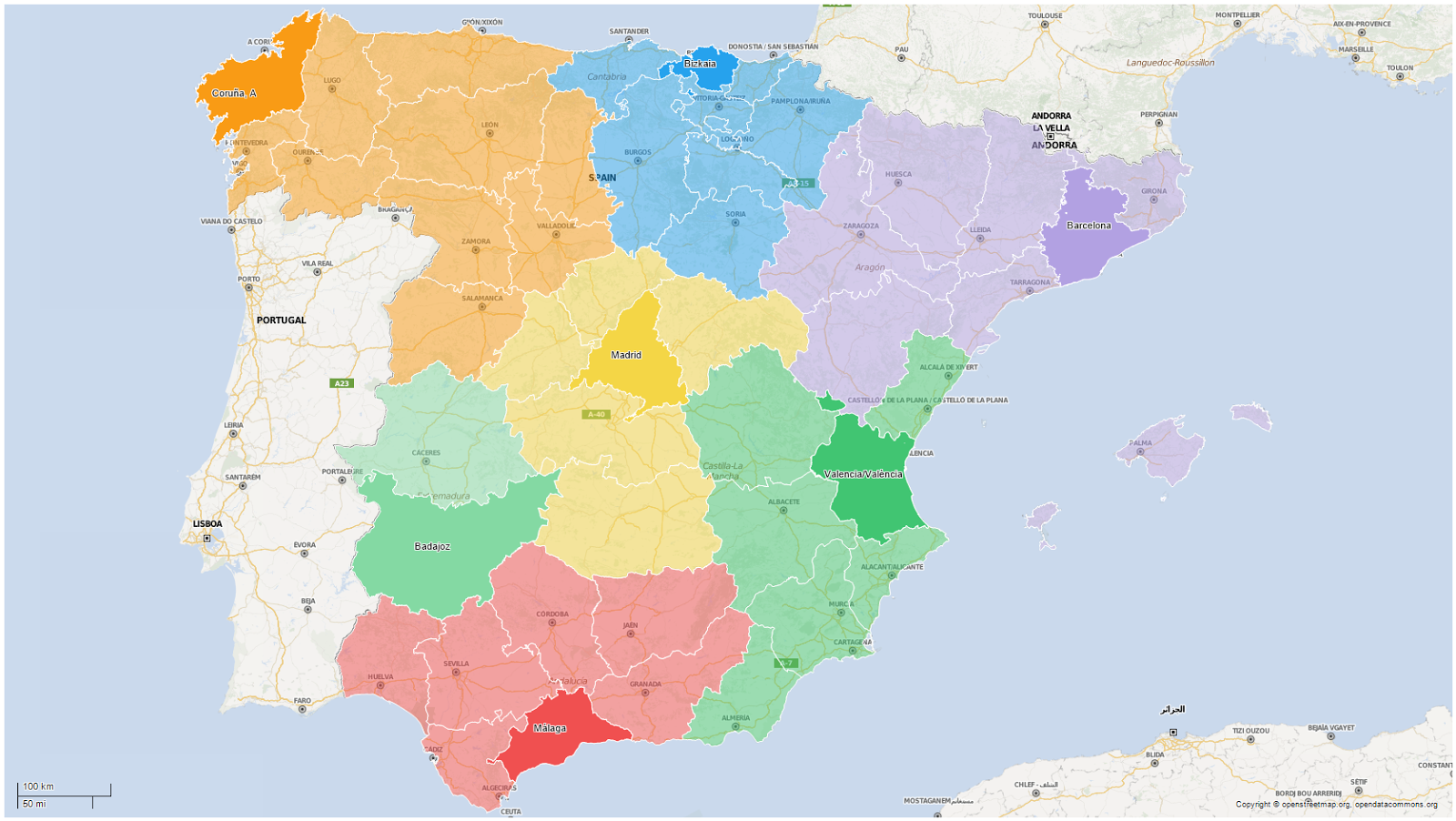

The past two years have seen some of the largest and most influential banks in the world embrace sponsored data as part of their digital transformation and financial inclusion strategies, with a particular emphasis on the Latin American region. For example, Santander in Brazil, BBVA Bancomer in Mexico, and Davivienda in Colombia have all made significant early moves to enable customers to use their mobile apps without using their mobile data, pushing financial services to the customer, wherever they might be.