The Intelligent MSSP

ElevenPaths 15 June, 2017

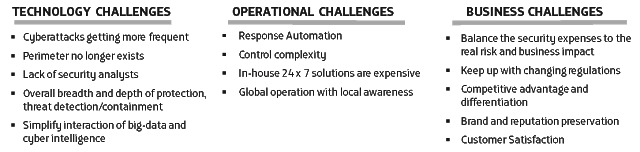

Compelling factors by pushing the MSS evolution

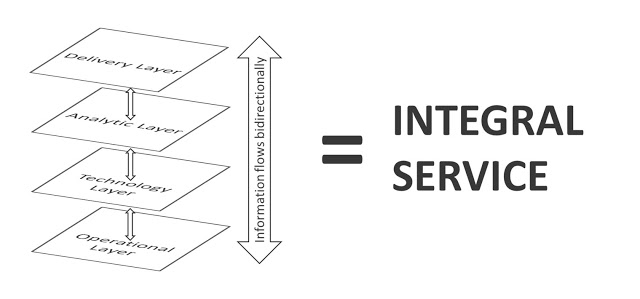

The Four-Layer-Framework for MSS

- Operational layer: process, people and tools in charge of the operation and automated response. We refer what some analyst have come to call the Intelligence-driven Security Operational Centre (ISOC). ISOC includes the capabilities of the previous ones –device management, security monitoring– and the distinctiveness of itself –data-driven security, adaptive response, forensic, post-analysis for threat intelligence and dynamic risk management. This operational layer and specifically the SOCs should fulfil the current recommendation directives from relevant advisory firms. We refer, for instance: operate as a program rather than a single project; full collaboration in all phases; information tools adequate for the job, providing full visibility and control; implement standardize and applicable processes and to conclude, and maybe the most important, an experienced team with the adequate skills and a low-rotation level.

- Technology layer: this level comprises the technology pieces that are in charge of the specific security prevention and protection, from on premise firewalls to security services such as Clean Pipes over Next Generation Firewall or CASBs. The originality of the proposal is to represent them as isolated elements that requires from the backbone capabilities to be part of a MSS offering. The main backbone capabilities included in the layer are the interaction modules, which act like a collector to transmit events to the rest of the levels and an actuator with the responsibility to trigger the response in form of policy management.

- Analytic Layer: this layer is associated to the brain of the whole system, the element in charge of the massive event processing which allows the data-driven security. We refer to the big data analytics platform to uncover hidden attacks patterns and carry out advanced threat management and response. Additionally, analytic layer includes some backbone capabilities such as cross calculation of KPIs for general security status, real-time risk management meter, event collection and storage and threat intelligence prosumer.

- Delivery Layer: level on top concerns how clients consume the managed security, with direct implication in customer service perception. This layer comprises unified visibility and control and the real-time risk management and compliance. We compact everything under the layer of Business Security. Not only does security a technology issue or an exclusive area for IT departments, but also it is becoming a relevant factor of the business performance of the organizations. There is a great consensus about the need of increasing implication of business areas and boards in security matter, and for them is not valid a technology language but a business language. This layer makes understandable and actionable the security information for business and C-level. Then, some important element is the integral security portal and the included dashboards, with the precise granularity to satisfy the different organizational roles, security as a glance, real time risk level and SLAs performance for boards or specific day-to-day incident and threat intelligence information for experts security analyst.

Conclusion

Case Study: LUCA Store and DK Management, driving footfall and increasing convertion

AI of Things 14 June, 2017

ElevenPaths and BitSight deliver enhanced visibility into supply chain risk with continuous monitoring

ElevenPaths 13 June, 2017

- Objective, outside-in ratings measuring the security performance of individual organizations within the supply chain.

- Comprehensive insight into the aggregate cybersecurity risk of the entire supply chain, with the ability to quickly generate context around emerging risks.

- Actionable information included in Security Ratings that can be used to communicate with third parties and mitigate identified risks.

» Download the press release “ElevenPaths and BitSight deliver enhanced visibility into supply chain risk with continuous monitoring.“

Wannacry chronicles: Messi, korean, bitcoins and ransomware last hours

ElevenPaths 12 June, 2017

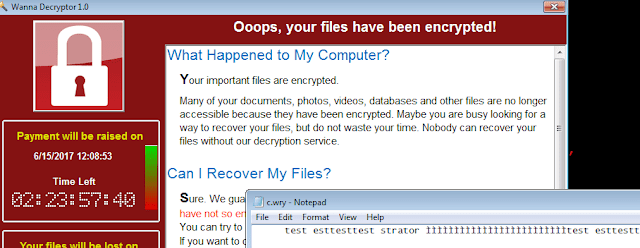

It is hard to say something new about Wannacry, (the ransomware itself, not the attack). But it is worth investigating how the attacker worked during last hours before the attack. It does not let us uncover the creator, but for sure makes him a little “more human”, opens up a question about his mother language, location and last hours creating the attack.

It is hard to say something new about Wannacry, (the ransomware itself, not the attack). But it is worth investigating how the attacker worked during last hours before the attack. It does not let us uncover the creator, but for sure makes him a little “more human”, opens up a question about his mother language, location and last hours creating the attack.

Wannacry (the ransomware again, not the attack) is a very easy to reverse malware. No obfuscation, no anti-debugging, not a single mechanism to make life harder for reversers. Aside from the code, some companies have even tried linguistic analysis (it has been widely used recently) to try to know where the author comes from (although it turns out to be from China, “more than often”). Result is usually “maybe English native speaker, maybe not, maybe native Chinese trying to mislead analysis…” who knows. But one thing we may know for sure: he likes football, is not greedy and usually types in Korean language.

Metadata to the rescue

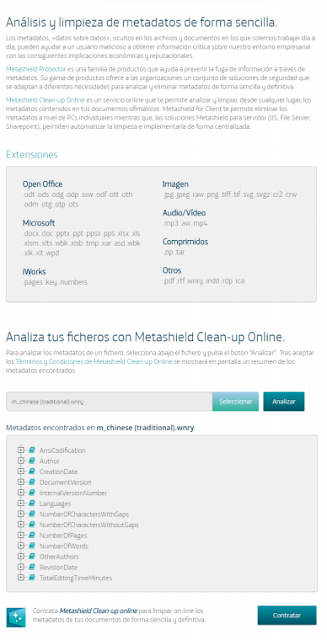

It has been proved, during recent years, how useful is to analyze and extract metadata and hidden information from files. Data is the new oil.

Not only sensitive information about the user or organization, software, emails, paths… but others like dates, titles, geopositioning, etc. We have heard about spying, politics scandals because of altered documents, insurance frauds…, and everything revealed thanks to metadata.

|

| https://metashieldclean-up.elevenpaths.com |

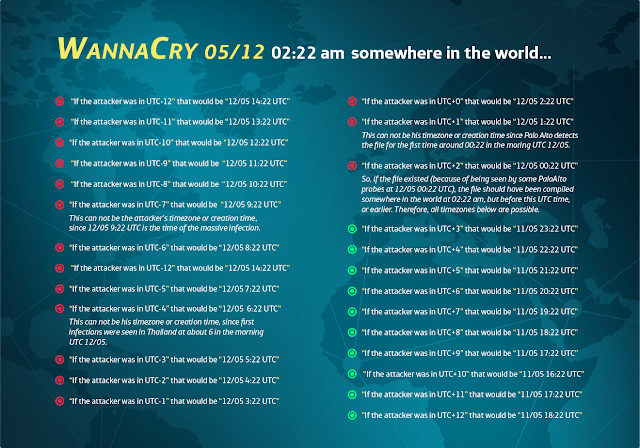

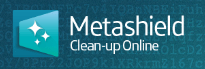

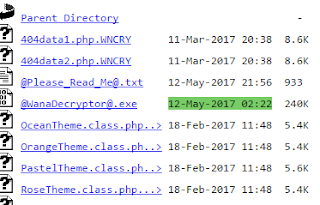

Wannacry 2.0 uses some text files with the instructions to show in a textbox. At first sight, extension WNRY does not mean anything, but they actually are RTF files. And that gives some space to work with metadata. From this analysis using our new tool Metashield Clean-up Online, we could find “Messi” as a user in the system, and, more interestingly, languages, dates and editing times. About Messi, we discovered that some user already mentioned it a few days ago. But let’s go further.

|

| This is version 1.0, less known that its own evolution |

There was a Wannacry 1.0 version, around March. It only contained a

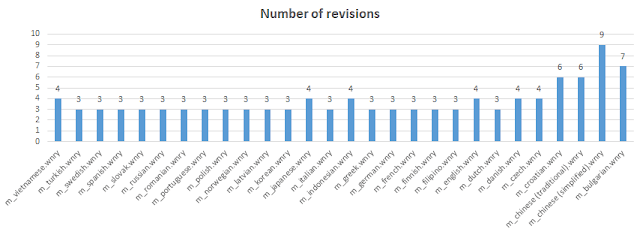

language file, m.wry, that was a RTF and was available only in English. This file was created 2017/03/04 at 13:33:00, and modified for 2.6 hours until 17:37:00. These are local timezones, wherever in the world the attacker was. The Internal Version Number was 32775. This is a number that Word (or any other RTF creator, although Word specifically uses 5 digits) adds when a RTF file is created, but is not officially properly documented by Microsoft and seems to be unique. But this Wannacry version was unnoticed in a world full of ransomwares. Then Wannacry 2.0 and a wormable attack came through. This was a slightly improved version, with lots of different languages, aside from English. What about their metadata?

It is at least very interesting to know that the attacker created (in his harddisk, at least, maybe copying it from somewhere else) all the documents between 13:52 and 13:57, just a day before the attack (11/05/2017). Again, this is local time wherever part of the world he was. He opened English file first, at 14:42.

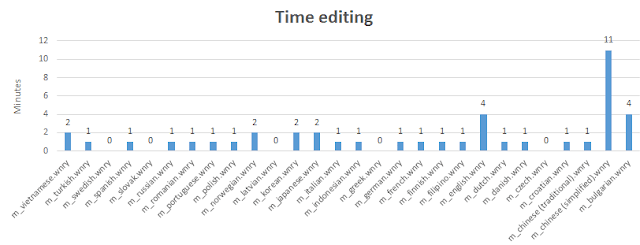

After that, most of the others files were sequentially created (or again, dumped from some zip file or disk) until 15:00. Chinese (traditional) and Chinese (simplified) where the last ones, opened almost 4 hours later, at 18:55. He spent 11 minutes editing the simplified version, but just one minute with traditional. Prior to that, he spent 4 minutes editing English file. Japanese and Vietnamese took him 4 minutes of editing as well. Vietnamese was the last file to open from the first round, maybe because files where alphabetically sorted. It did not even edit Swedish, Latvian, Czech or Greek language files.

Author field for RTF files was set to the word “Messi”, as in WannaCry version 1.0. That means, that is the user name is configured in its Office Word version and probably his Windows user too. He may like football. Internal Version Number was again 32775 which may mean he reused the template.

|

| Metashield Clean-up Online |

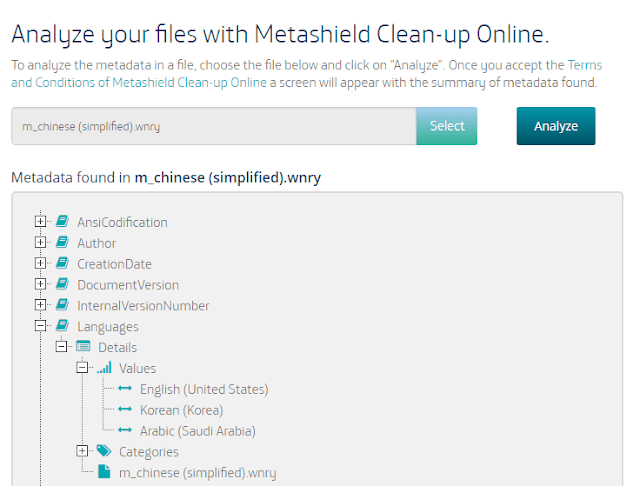

A very insteresting metadata is language.

All the files, no matter the language they are written in, uses English, Korean and Arabic as metadata “Language”. These are the language that Word expects you to write in, and are filtered trhough Word to RTF files. Why this three? Does he uses all of them? No.

- Arabic is present if you have a specific version of Word (deflangfe is the keyword for “Default language ID for Asian versions of Word“.) It does not mean this language is configured as predeterminded in Word for corrections. It means you use a specific kind of EMEA version of Word, very common. You can tell if your RTF files have adeflang1025 in them.

- Korean is the language in deflang which defines the default language used in the document used with a plain control word. That means, he has Korean and English as default “usual” languages in Word

- English is present because of how Word works. If you set your Word with Korean as deafult language, it will always be set with English as well. Seems like a Word feature.

This is the raw data “inside” the RTF.

rtf1adeflang1025ansiansicpg1252uc2adeff31507deff0stshfdbch31505stshfloch31506stshfhich31506stshfbi0deflang1033deflangfe1042

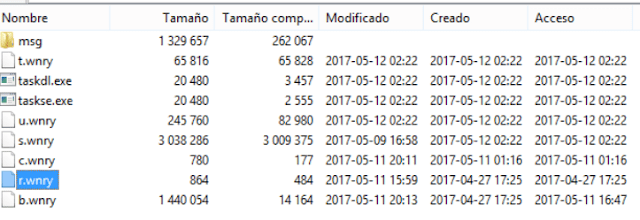

An interesting point is that he uses a zip file that contains the Wannacry ransomware (again, the ransomware itself, not the tool) to inject into the wormable system. This is (in version 2.0 and some versions 1.0) a password protected file (1.0 was wcry@2016, and 2.0 WNcry@2ol7). As we know, from our previous research with Gmtcheck for Android, zip files store the Last Access Time, and Create Date from the (supposed) NTFS filesystem of the attacker, with his own timezone applied. So, the dates in a zip file, are the dates in the attacker timezone. It means that, analyzing the zip, we could deduce the following:

- 2017-04-27 17:25 (attacker local time): The attacker created b.wnry, a BMP for the desktop background and r.wnry, which contains the readme file.

- 2017-05-09 16:57: The attacker creates another zip with tools to connect to Tor Network (and negotiate the ransom). They were downloaded in the attacker filesystem 16:57, 09/05/2017, attacker’s local time. They were zipped and introduced in a new zip as s.wnry.

- 2017-05-10 01:16: The attacker creates in his system c.wnry, which contains onion domains and a wallet.

- 2017-05-11 15:59: Creates r.wnry that contains remove instructions.

- 2017-05-11 16:47: Edits b.wnry.

- 2017-05-11 20:11: Edits c.wnry again.

- 2017-05-11 20:13: Adds b.wnry in the zip and starts its creation (with password).

- 2017-05-12 02:22: Adds the exe files: u.wnry and t.wnry. The attacker zips and sets the password. The payload of the worm is ready. This is a “magic” hour as it is stablished as the time of infection in every affected (infected) system.

Even servers, because is the time stored in the zip file, and when the malware extract its own files, this same value goes with it. That is why this value of “last time accessed” is in every affected system, because it comes directly from the unzipping action that the worm makes.

|

| A random Windows server affected. The same time and date. |

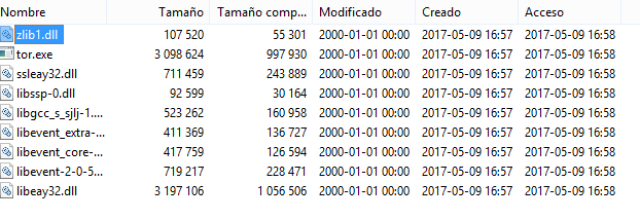

Since we have no UTC reference, we may create this table. Each line represents a hypothesis: Let us suppose the local time the zip is created (12/05/2017 2:22), occurs in all possible and different GMT timezones. We have added some “facts” that we know happened for sure in a specific UTC time. For example:

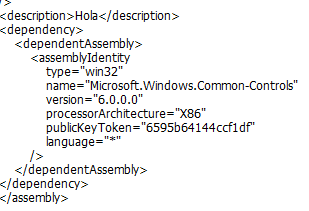

So, let us imagine. If the attacker was in UTC+9 (Korea), as they say, that would be 11/05 17:22 UTC. He created the ransomware, went to sleep (it was 2:22 morning for him, and spent the whole day “working”… ) and 7 hours later (around 12/05 00:00 UTC), the first sample was seen somewhere in the world. Does it make sense? Or maybe, he spent that hours joining the ransomware to the EternalBlue exploit. Or maybe he was a Spanish guy (UTC+2 at the time) and, just when ready, he let the file go and Palo Alto detected it right away… Remember the word “Hola” is embedded in the assembly.

Regarding interesting strings… another from version 1.0, is this “test esttesttest strator” that appears in c.wry, where some .onion and dropbox URLs are… draw your own conclusions.

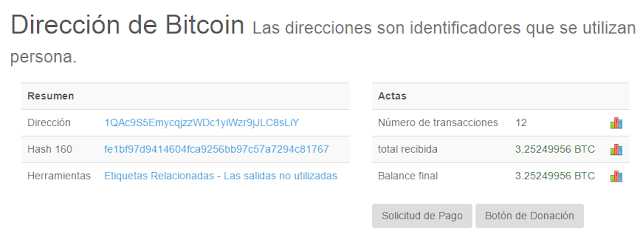

And last but not least. We all know the attacker did not take the money out of the wallets in version 2.0. Too risky, maybe. But just checking the wallets in version 1.0, we can tell he did not get the money from victims of version 1.0, days before the “world attack”. These are just two of the wallets in version 1.0, with transactions from early May… and the money is still there.

|

| Just two of the wallets in version 1.0, with transactions from early May… and the money is still there |

Conclusions?

Playing attribution is risky.

These times and hours may be legitimate, or directly fake. He may like

or hate Messi. The problem is, since these are all local times, we have not found an UTC reference that enables us to know the precise timezone of the attacker. We may suspect it is UTC +9 (Korea time). However, the real thing is that, we can only make some assumptions.

- The attacker starts the attack the 9th.

- He spends the 10th and 12th creating it, almost for all day long.

- For some reason, he spends more time editing Chinese language files.

- Word is configured with Korean as default languge.

- His timezone may be between UTC+2 and UTC+12

Innovación y laboratorio

What does it take to become a top class Smart City?

AI of Things 9 June, 2017

Singapore

|

| Figure 2: Government initiatives to lead to a tech-led future. |

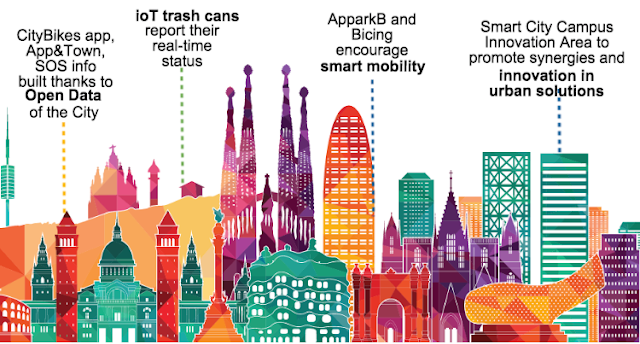

Barcelona

|

| Figure 3: Just some of the ways Barcelona are making smart decisions. |

Data rewards are the new consumer engagement tool & Gigabytes are the new currency

AI of Things 31 May, 2017

Today’s consumers are fairly savvy about companies selling to them. As they are constantly bombarded with an increasing number of calls to action, these engagement attempts are often viewed as a nuisance at best. Or at worst, they create a negative association with the company in the consumer’s mind.

What if there were a way to create a win-win consumer engagement scenario for consumers and companies? One in which consumers gained perceived benefits from the engagement and companies were able to meet their marketing and advertising objectives.

This is where Data Rewards come in. Data Rewards serve the interests of both consumers and companies, making it an ideal tool to add to any marketing mix.

Essentially, this new tool rewards customers with small amounts of one-time use data in exchange for completing a certain desired action, such as watching video, taking a survey or downloading an app. Similar to a toll-free phone line or offering free shipping, companies absorb the cost of these services in order to pass on a better customer experience to their consumers. In the case of Data Rewards, companies cover the cost of gigabytes of data and then pass those gigabytes along as currency to pay consumers in exchange for taking desired actions.

This tool is successful because it meets a felt need in key markets, particularly in the LATAM region. Despite high levels of smart phone penetration, which is expected to reach 68% in 2020, only about 48% of users have a regular data plan. The rest of smart phone users rely on a pay-as-you-go plan, which means they are more conscious about their data usage. However, the opportunity for Data Rewards is not limited only to these data-restricted customers. Even users on a data plan are conscious of their data usages, as those plans do not always include unlimited data.

In developing markets, including LATAM, over 30% of consumers run out of data each month. These consumers are reluctant to purchase extra data, so they are highly motivated by offers of free data. Data Rewards target this untapped market by offering as a reward the data that these consumers are seeking.

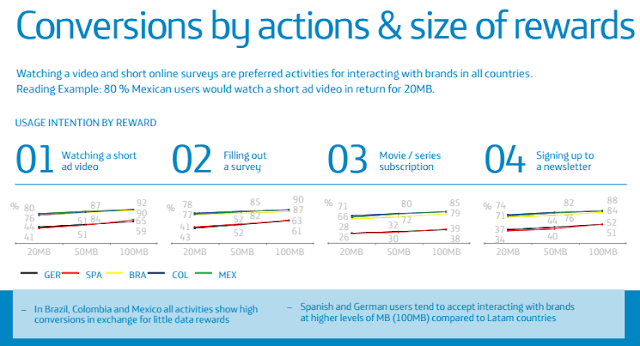

The motivation factor is evidenced by the high participation rates. A September 2016 Telefónica survey looked at consumer engagement rate with four different Data Rewards-incentivized calls to action: watching a short video ad, filling out a survey, subscribing to a movie or a series and signing up to a newsletter. The study found significant engagement levels in the LATAM market in particular. For example, on average between 65% – 80% of customers in Brazil, Mexico and Colombia engage with all four rewarded offers in exchange for only small amounts of data.

High levels of engagement associated with Data Rewards also are an ideal scenario for companies. An 80% engagement rate with standard advertising is almost unheard of, even for the most catchy, viral advertisements. Using gigabytes as a currency attracts and motivates customers, which allows companies to meet their objectives. Putting a product or service before more sets of eyes both increases brand awareness and potentially increases sales, particularly as these consumers are likely to view the brand more favorably for rewarding them with what they actually need.

A great example of the success of this tool is Vivo’s use of Data Rewards in Brazil. The company ran a R$ 2 million campaign (about $630,000 USD), which resulted in 330,000 leads generated in 45 days. Users who filled out a form completely were rewarded with data. Furthermore, Vivo gets not only leads through this tool, but is also able to gain more precise information about these leads, particularly if the call to action they are using is a survey.

The possibilities for using Data Rewards are endless. Companies can choose to target ads based on consumer habits or needs, and also can customize the amount of data offered for specific actions. Data Rewards ads are also able to be customized with unique company branding. The win-win factor of using Data Rewards makes them attractive to companies and customers alike.

To learn more about how our Data Rewards tool can enhance your advertising efforts, visit our website.

ElevenPaths announces that its security platform complies with the new european data protection regulation one year earlier than required

ElevenPaths 31 May, 2017

-

The European regulations will enter into force in May 2018, when entities that do not comply can be penalized with fines of up to 4% of their annual turnover.

-

ElevenPaths introduces new technology integrations with strategic partners such as Check Point and OpenCloud Factory, with Michael Shaulov, Director of Check Point Product, Mobile Security and Cloud, who will be the special guest of ElevenPaths annual event. ElevenPaths also works with Wayra, Telefónica’s corporate start-up accelerator.

-

ElevenPaths collaborates with the CyberThreat Alliance to improve and advance the development of solutions that fight cybercrime.

In corporate environments, this authentication method is ideal for managing guest user access and personal business user devices.

Furthermore, ElevenPaths and the CyberThreat Alliance have strengthened their commitment to fight cybercrime, working together, completing and expanding the image of attacks, providing better protection against major global attacks as well as targeted threats.

ElevenPaths has also launched for the first time this year a session in collaboration with Wayra Spain – the Telefónica Open Future accelerator – in order to find the most disruptive solutions in this area, as well as to provide continuity to other security-focused entrepreneurial initiatives, invested in by companies including 4iQ, Logtrust and Countercraft.

» Download the press release “ElevenPaths announces that its security platform now complies with the new european data protection regulation.“

Machine Learning to analyze League of Legends

AI of Things 25 May, 2017

Written by David Heras and Paula Montero, LUCA Interns, and Javier Carro Data Scientist at LUCA

Data and Planning

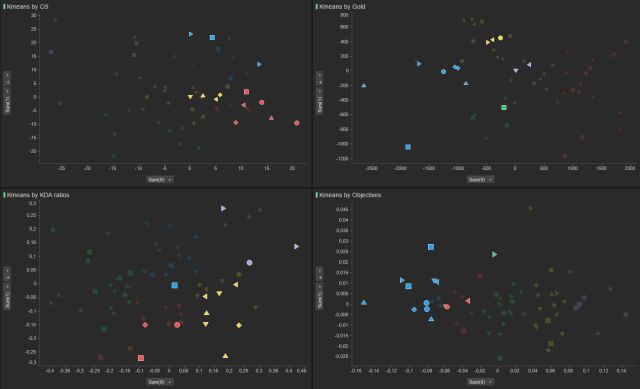

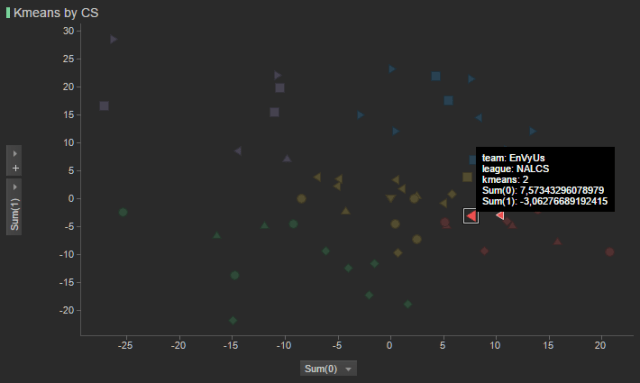

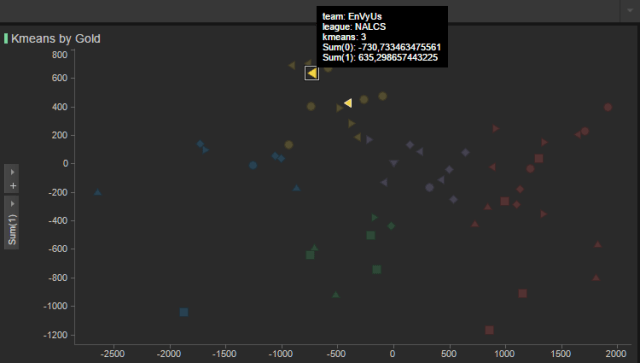

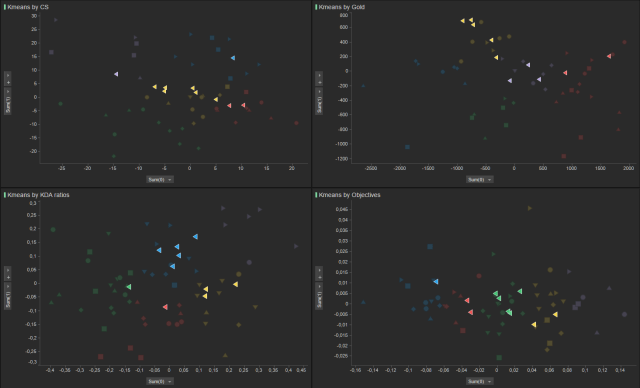

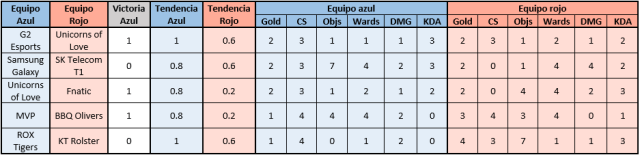

- Completing a non-supervised classification of each team through grouping variables thematically: Gold, CS (“farming”), Wards, Objectives and KDA ratios.

- Add the trends for victory of the team for their last five matches, taking into account whether they play on the blue or red side.

- Team Data:

- Data Gaps:

- Redundancy:

- Temporary untagging:

Characterising the teams

- The teams with the best results tend to group together in the clusters. The same pattern is noted with the teams with the worst results. This means that the algorithm effectively classified each team despite the loss of information with the reductions of the dimensions of the PCA.

|

| Figure1: Highlighting the teams with a lower ranking in the competitions to see how it coincides with the data clusters. |

|

| Figure2: Highlight the teams with the highest rankings to see how they coincide with the data clusters. |

- There are some rare cases like the EnVyUs (they finished last in the North American league) that when looked at through the CS or Gold recovered categories they are amongst the best of their league like the SoloMid team. However, in all of the other areas that were analysed (KDA ratios and Objectives), they maintained their position as one of the worst teams amongst the other leagues.

|

| Figura 3: The special case for the EnVyUs team, as they were part of the best teams with regards to CS. |

|

| Figura 4: The special case of EnVyUs, this time amongst the worst cluster in terms of gold. |

- It should be noted that in the case of some leagues, their participants remain close. In the North American league, the teams stay close in all areas analyzed. This trend does not seem to happen in the European or Turkish leagues.

|

| Figure 5: Measuring the proximity of teams that play in the same league. |

Trends

Final Dataset

- The teams who will compete against one another

- The clusters refer to a group of variables that each team belongs to based on their number of games played.

- The winning trend in the last five games played by the blue team as a blue team.

- The winning trend in the last five games played by the red team as a red team.

|

| Figura 6: An example of the final data format. |

Results

- Decision Tree: 58%

- Gradient Boosting Classifier: 66%

- Support Vector Machine (SVM): 61%

Miércoles 10 de mayo de 2017

vs

vs

vs

vs

vs

vs

vs

vs

Acierto

Acierto

Acierto

Fallo

|

Miércoles 10 de mayo de 2017

|

|||

vs

|

vs

|

vs

|

vs

|

|

Acierto

|

Acierto

|

Acierto

|

Fallo

|

| Jueves 11 de mayo de 2017 | |||||

vs

|

vs

|

vs

|

vs

|

vs

|

vs

|

|

Acierto

|

Fallo

|

Acierto

|

Fallo

|

Acierto

|

Acierto

|

| Viernes 12 de mayo de 2017 | |||||

vs

|

vs

|

vs

|

vs

|

vs

|

vs

|

|

Fallo

|

Acierto

|

Acierto

|

Acierto

|

Acierto

|

Fallo

|

Special Case

|

| Figure 7: Cluster representations for both G2 (circle and Flash Wolves (triangle) |

A World-Changing Combination: Dr. Claire Melamed on Big Data, Collaboration and the SDGs

AI of Things 24 May, 2017

Dr. Claire Melamed is the Executive Director of Global Partnership for Sustainable Development Data (GPSDD), where she leads efforts to collaborate on leveraging data to meet the UN’s Sustainable Development Goals. In 2014, she was seconded to the UN Secretary General’s Office to be the Head of Secretariat and lead author for “A World That Counts,” the UNSG’s Independent Expert Advisory Group on The Data Revolution.

Dr. Claire Melamed is the Executive Director of Global Partnership for Sustainable Development Data (GPSDD), where she leads efforts to collaborate on leveraging data to meet the UN’s Sustainable Development Goals. In 2014, she was seconded to the UN Secretary General’s Office to be the Head of Secretariat and lead author for “A World That Counts,” the UNSG’s Independent Expert Advisory Group on The Data Revolution.

So Claire, how important is having mobile data to the GPSDD?

|

| Figure 1: Global collaboration using Big Data is crucial for reaching the SDGs. |

What characteristics do you look for in the telcos that you work with as key factors in success for the partnership? Such as having a CDO, a data monetization process already in place or other factors.

But what we really look for is just a desire to engage and a willingness to experiment. One of the things which is so exciting is the range of models that are emerging for that experimentation to take place.

There has been a lot of talk in the media about the SDGs being under threat with everything going on politically in the world right now. Do you think these movements that we’re seeing around the world will slow down the push towards more open data and more data awareness in the political realm?

|

| Figure 2: GPSDD works to leverage data around the world in support of the SDGs. |

What were you doing before you joined GPSDD?

You joined GPSDD in October 2016. What are you proudest of in your time there so far?

Both of those trips left me with a really strong sense of the power of the global brokering network and what it is we can do with it in working with partners.

Would you say that the partnerships you can form through GPSDD have more value for developing countries, or is it rather a matter of different use cases for each country?

|

| Figure 3: The East Africa Open Data Conference is an example of GPSDD’s work (photo: GPSDD) |

Does this leapfrogging tendency that you are seeing have to do with cost, speed or for the sake of innovation?

One of the huge benefits of Big Data, and telco data in particular, is speed and being able to know what is happening now.

Traditionally, many low-income countries have relied on survey data to track outcomes such as health outcomes, population movement and things like that. But when you do a survey, sometimes you don’t get the results for two or three years. So some of the experiments that are being done to address this are using mobile phone top ups as a proxy for poverty data, meaning that you can get a reasonably accurate map of poverty in your country every day, whereas traditionally governments are used to seeing a two- to three-year timeline on that.

Finally, what would be your wish in going forward with partnerships in order to make things happen faster and more effectively?

What are the institutional, legal, and regulatory changes that can be made to help that data flow faster? There are also the investment challenges. What are the political arguments that can be used to encourage governments to invest in the capacity they need?

When I was in Kenya, some of the government departments that I met had really constrained capacity. They only had one or two highly qualified statisticians in the central department and even fewer out in the districts. So in those cases, even if they did get access to loads and loads of data, it would not be massively useful because they would not be able to actually get the insights from it.