|

| Figure 1: Lewis Hamilton has beat the previous record with 41 wins from pole position |

|

| Figure 2: Technology and processes used in F1 can be applied to smart cities and medical care |

|

| Figure 1: Lewis Hamilton has beat the previous record with 41 wins from pole position |

|

| Figure 2: Technology and processes used in F1 can be applied to smart cities and medical care |

Amongst the ransomware plague, Banking Trojans are still alive. ElevenPaths has analyzed N40, which is an evolving malware that is quite interesting, in relation to the way it tries to bypass detection systems. The trojan is, in some ways, a classical Brazilian banking malware that steals credentials from several Chilean banks, but what makes it even more interesting are some of the features it includes, which are not that common in this kind of malware.

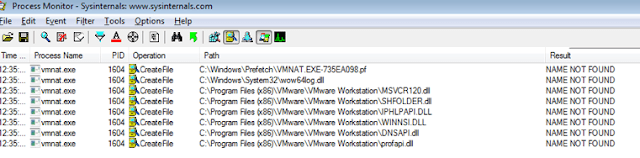

DLL Hijacking

DLL hijacking has been known for years now. Basically it consists of a program which does not check the path properly of where the DLLs is loaded from. This would allow an attacker which has the ability to replace or plant a new DLL in some of these paths, to then execute arbitrary code when the legitimate program is launched. This is a known problem and used technique, yet we are aware that not all of the DLL hijacking problems are equally as serious as each other. Some problems are mitigated by the different ways and search order in which DLLs are loaded, the way in which the permissions are set where the executable file lies, etc. This malware is aware of this, and it has turned “less serious DLL hijacking problems” into an advantage for the attackers to avoid detection systems and, in turn, a powerful tool for malware developers. This will probably force a lot of developers to check again the way in which they load DLL from the system, if they do not want to be used as a “malware launcher”.

|

| Some of the DLL that may be used for DLL hijacking |

What makes this malware really remarkable, is that it consists of two different stages.

In this case, the malware abuses a DLL hijacking problem in VMnat.exe, which is an independent program that comes with several VMware software packages. VMnat.exe (like many other programs) tries to load a system DLL called shfolder.dll (it specifically needs the SHGetFolderPathw function from it). It firstly tries to load it from the same path in which VMnat.exe is called; if it is not found, it will check in the system folder. What the malware does is it places both, the legitimate VMnat.exe and a malicious file renamed shfolder.dll (which is the malware itself signed with a certificate) in the same folder. VMnat.exe is then launched by the “first stage malware”, which first finds the malicious sfhfolder.dll and then loads it into its memory. The system is now infected, but what SmartScreen perceives is that something has executed a reputable file.

Through this innovative movement the attacker can:

More interesting features

This malware, of course, uses some other interesting (but previously known) techniques. It is strongly prepared to bypass static signatures (at least temporarily) and uses “real time string decoding”. When it is launched, it keeps every single encrypted string in its memory, and only decrypts it when strictly necessary. This allows them to hide even when the raw memory is dumped by an analyst or sandbox.

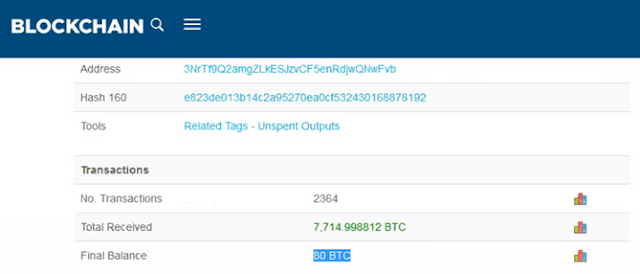

Clipboard cryptohijacking is an interesting attack vector as well. The malware is continuously checking the victim’s clipboard. If a bitcoin wallet is detected, it quickly replaces it with this wallet 1CMGiEZ7shf179HzXBq5KKWVG3BzKMKQgS. When the victim wants to make a bitcoin transfer, he or she will usually copy and paste the destination address if it is switched “on the fly” by the malware, the attacker expects that the user will unwittingly trust in the clipboard action and confirm the transaction to his own wallet. This is a new bitcoin stealing technique that is starting to become a trend. In this bitcoin address, we have seen 20 bitcoins in the past, some of these funds have been transferred directly to another bitcoin address (supposedly owned by the creators) with 80 bitcoins. This means that the attackers have a lot of resources and success.

|

| Wallet in malware sends the bitcoins to this other wallet, with 80 bitcoins |

Conclusions

This malware comes from Brazil, but targets most of popular Chilean banks. It uses previously unknown weaknesses within known software in order to bypass some detection techniques; it is an interesting step forward in the way malware is executed in the victim’s computer. VMware has been alerted about this and has quickly improved its security. Yet, this is not a specific VMware problem, any other reputable program with any DLL hijacking weaknesses, which there are many of, may be used as a “malware launcher”. This gives a lot of space for malware makers to use legitimate and signed malware as a less noisy execution technique..

It uses many other cutting edge techniques such as the clipboard cryptohijacking, communicating with command and control over nonstandard ports which rely on dynamic DNS systems and decrypting memory strings only when it is strictly necessary, etc. All of this makes it a very interesting piece of malware for taking into account how attackers are evolving to avoid detection; even a step ahead of the Russian school who are traditionally more “innovative” within the malware field.

In a nutshell: This is an interesting evolution of Brazilian malware that contains very advanced technique (aside from the usuals not mentioned but which are standard in current malware) against the analysts, antiviruses and effectives against bank entities. Main points are:

In the following report you may find more information and IOCs about this threat, with specific IOCs.

Content originally written by Carmen Rodríguez, intern at LUCA, and Javier Carro, Data Scientist within LUCA’s Big Data for Social Good area.

What would you say if we told you that data can help save lives? And if we could use it to help minimize the consequences of a natural disaster?

In LUCA’s Big Data for Social Good area, with have an area of research that focuses on the analysis of data relating to natural disasters (earthquakes, floods etc) with the aim of managing them better. You can watch an example of this work in this post about our collaboration with UNICEF.

The repercussions of such events shows itself in the way we communicate in their aftermath. We call for help from emergency services, we call our friends to see if they are okay, and let our family though that we are safe. These human reactions are reflected in the mobile data from telephone networks and once suitably anonymized and aggregated, can be used to help manage such events.

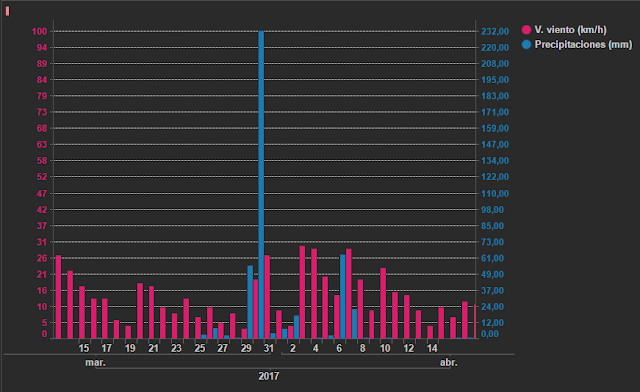

On this occasion, we have studied the impact of the storm that took place in the Golfo de San Jorge region of Argentina between the 29th March and the 7th April 2017. This event had widespread news coverage for a number of days. Comodoro Rivadavia and Rada Tilly are two regions that are located in the basins of various rivers and their drainage systems. The storm dropped around 232mm on rain on the 29th, in a month where the average rainfall in Comodoro Rivadavia is a mere 20.7mm. This intense rainfall, when combined with the bursting of river banks that flow into the Atlantic Ocean, caused large floods in the city and led to the evacuation of thousands of people.

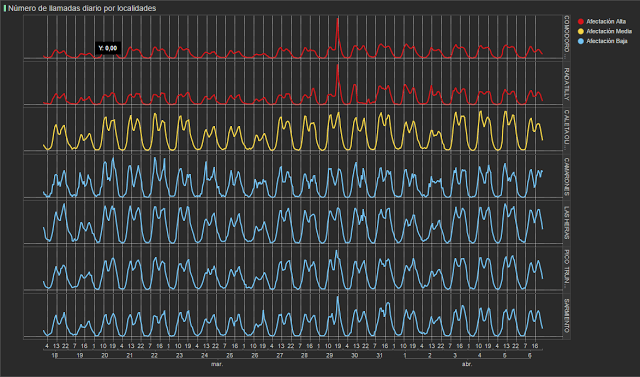

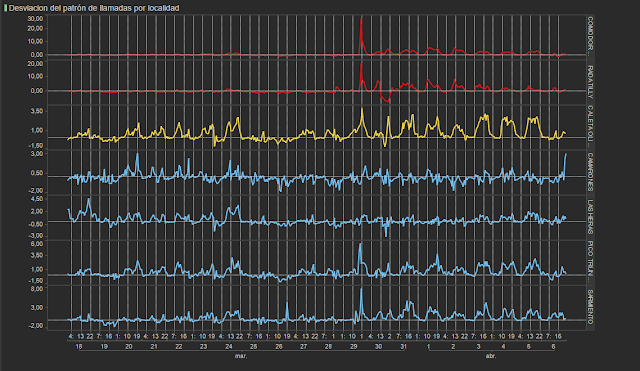

In order to carry out analysis, we used hourly call data from different municipalities. Due to the different volumes of calls that were made we were able to group regions as shown in figure 2. In red, we have the highly affected areas (Comodoro Rivadavia and Rada Tilly), yellow shows Caleta Olivia which was moderately affected and blue represents the low impact areas (Camarones, Sarmiento, Las Heras and Pico Truncado).

The following graph shows the number of calls per hour in each of these zones. At first glance, we can already see that for the red lines (ground zero of the catastrophe), there is a sharp peak of calls on the 29th March at 6pm.

We can also see an increase in calls in the regions of Sarmiento and Pico Truncado, which shows us that the storms impacted zones that are geographically further away.

In order to dig a little deeper, we calculated the deviation in the number of calls compared to their regular daily and hourly patterns. We normalized this difference and in the following graph each spike shows the large deviations from the traffic we would expect for that time. In this case, we can see that there was a peak in each zone on the 29th.

In this type of disaster, when there is a flooding due to high rainfall or an earthquake, the reaction is usually immediate. On the 28th, the number of calls was largely in line with the average, and on the 29th there was a large deviation during one specific hour. In the days following, the situation become to return to normal.

In the following map (figure 4), we can see the evolution of the flood. The different colors represent the degree of deviation from the norm. Light green shows the smallest deviations and red represents the largest. We can appreciate the sudden change on the 29th March and how the situation stabilizes afterwards.

We can again go a bit further with the analysis by analyzing the behavior of each antenna and in this way look at the impacts of the flood in different zones of the same municipality.

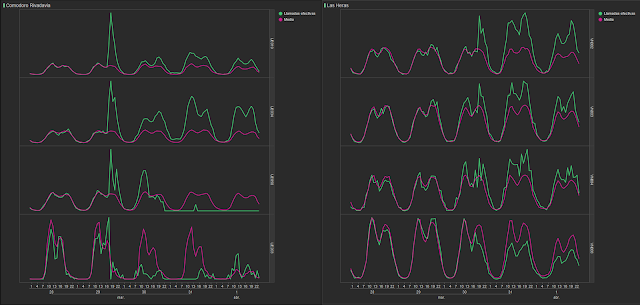

In figure 5, we represent the amount of calls from antennae in various areas, and we can see how some antennae show a larger spike than others. We can also see how some stopped working altogether, probably due to technical faults in the network as a results of the weather conditions.

As we can see in figure 6, if we analyze call data (the green line) compared to its normal behavior (the red line) we can also check the differences between the deviations for each municipality’s antennae. Furthermore, we see the different consequences in the affected zones. The graphs to the left represent antenna in Comodoro, showing a large spike at 6pm on the 29th. However, in the graphs on the right (antennae in Las Heras), the impact of the disaster in seen in the days following the event.

Thanks to our mobile network, we can not only see behavior through call traffic, but also mobility behavior by using anonymized and aggregated data. In this way, we can study how people move following a natural disaster.

We have create an “origin and destination matrix” for all the provinces in Argentina and especially the areas that we have been looking at up until now. We have also followed the same method to calculate deviations. In figure 7, you can see how we have applied a filter so that the only visible areas are those that showed large deviations during the period studies. You can see the different mobility profiles across the most affected origin and destination matrices.

We can observe a clear negative deviation in mobility on the 29th March. People move less, are isolated in the disaster zone or don’t travel to and from that area due to the meteorological conditions.

We can also observe a second drop in mobility across all origin-destination combinations. This happened on the 7th April and was caused by a new wave of storms and rainfall in the same areas of Argentina.

Without doubt, natural disasters greatly affect our behavior and we are left with a trail of data that, once anonymized and aggregated, we can use to respond better to such events.

One possible use case is the creation of alerts for emergency services, that would be able to direct efforts and resources to the most affected areas, or maybe anticipate when the storm’s effects will begin.

Another possibility is to develop an application that would include warnings for the users of the mobile network, which would be capable of alerting them of imminent danger in their area and offer advice regarding precautionary measures.

Clearly, it is important to discern whether the events that we register in this way are natural disasters or if the mobility patters are caused by other events such as concerts. It is possible to do this with the use of other sources and forms of analysis, such as the state of the network itself and Natural Language Processing (NLP) of Twitter activity.

With this analysis, we can continue to test the great potential that data has in such services aimed at social good. We are talking about data that is capable of improving, helping and preventing the possible effects of these catastrophes. For now, don’t forget to follow us on Twitter, LinkedIn and YouTube to keep up to date with all things LUCA.

Don’t miss out on a single post. Subscribe to LUCA Data Speaks.

|

| Figure 1. Unlike other sectors, construction has not fully embraced AI yet |

|

| Figure 2. With data, construction sites will become safer and make better use of equipment |

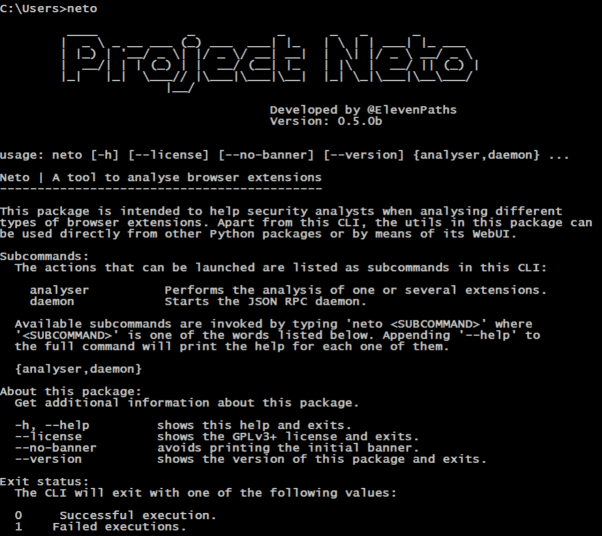

Why should we analyze extensions?

The extensions contain relevant information, such as the version, default language, permissions required for their correct operation or the URL addresses’ structures on which the extension will operate. At the same time, it contains pointers to other archives such as the relevant file path from the HTML file (which will load by clicking on their icon) or JavaScript file references which should run both in the background (background scripts) as with each page that the browser loads (content scripts).

However, the file analysis which make up an extension can also reveal the existence of files which should not be present in production applications. Amongst them, files could appear linked to the management of versions such as GIT or other temporary and backup files.

Of course, there are also extensions which are created as malware, adware, or to spy on the user. There are many and various examples, especially recently in Chrome (where it has already reached a certain level of maturity) and Firefox. Right now it is common for mining code to be hidden within the extensions.

The tool

It is a tool written in Python 3 and distributed as a PIP packet, which facilitates the automatic installation of the dependencies.

$ pip3 install neto

In systems in which they are not provided by the administration privileges, you can install the packet to the current user:

$ pip3 install neto --user

Once installed, it will create for us an entry point in the system, in which we can call the application command lines from any path.

|

| The main functionalities of the tool |

There are two functionalities which we have included in this first version:

The different analyzer options can be explored with neto analyser --help. In any case, Neto will allow us to process extension in three different ways:

-e), -d)-u).

In all of these cases, the analyzer will store the result as a JSON in a new file called ‘output’, although this path is also configurable with the command -o.

In order to interact with each other in different programming languages, we have created a daemon which runs a JSON-RPC interface. In this way, if we start it with neto daemon we can get the Python analyzer to perform certain tasks, such as the analysis of extensions stored locally (indicating the “local” method;) or which are available online at (indicating the “remote” method). In both cases, the parameters expected by the daemon correspond to the local or remote extension paths to be scanned. The available calls can be consulted with the “commands” method and can be carried out directly with curl as follows.

$ curl --data-binary '{"id":0, "method":"commands", "params":[], "jsonrpc": "2.0"}' -H

'content-type:text/json;' http://localhost:14041

Instead, if we are programming in Python, Neto has also been designed to function as a library.

$ Python 3.6.5 (v3.6.5:f59c0932b4, Mar 28 2018, 16:07:46) [MSC v.1900 32 bit (Intel)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> from neto.lib.extensions import Extension

>>> my_extension = Extension ("./sample.xpi")

In this way, we can Access the different analysis characteristics carried out against the extension, or by accessing the properties directly.…

$ >>> my_extension.filename

'adblock_for_firefox-3.8.0-an+fx.xpi'

>>> my_extension.digest

'849ec142a8203da194a73e773bda287fe0e830e4ea59b501002ee05121b85a2b'

>>> import json

>>> print(json.dumps(my_extension.manifest, indent=2))

{

"name": "AdBlock",

"author": "BetaFish",

"version": "3.8.0",

"manifest_version": 2,

"permissions": [

"http://*/*",

"https://*/*",

"contextMenus",

"tabs",

"idle",

…

Here is a short clip which shows its basic use.

Plugins and how you can contribute

As it is free software there is the possibility for those who want to contribute something to it through the Github repository. The plugin structure which we can find in the neto.lib.plugins allows for the addition of new static analysis criteria whilst taking into account the analyst’s needs. This becomes a Neto in an analysis suite which we expect to be powerful. Furthermore, the advantage of being distributed through PyPI as a packet is that whenever a new functionality is added, it can be installed with pip by indicating the 'upgrade' option.

$ pip install neto --upgrade

Soon we will have more ways to distribute it and information.

Last year’s release by ShadowBrokers about tools belonging to the National Security Agency continues to be a talking point. A new malware which utilizes the EternalRomance tool has appeared on the scene along with Monero-mining. According to the FortiGuard of Fortinet laboratory, the malicious code has been called PyRoMine as it was written in Python, and it has been discovered for the first time this month. The malware can download it as an executable compiled file with PyInstaller, thus, there is no need to install Python in the machine where PyRoMine will be run. Once installed, it silently steals CPU resources from the victims with the aim of obtaining Monero’s profits.

“We do not know with certainty how it gets into a system, but taking into account that this is the type of malware which needs to be widely distributed, it is safe to assume that it gets in through the spam or drive-by-downlod” said the security investigator from Fortiguard Jasper Manuel. In a worrying way, PyRoMine also configures a predetermined hidden account within the infected equipment through the system administrator’s privileges; utilizing the password “P@ssw0rdf0rme”. It is possible that this is utilized for reinfection and other attacks, according to Manuel.

PyRoMine is not the first miner to use these NSA tools. Other investigators have discovered more malware pieces which utilize EternalBlue for cryptocurrency mining with great success, such as Adylkuzz, Smominru and WannaMine.

More information available at Fortinet

In the first statement connected to this, the United States cyber-security authorities have issued a technical alert in order to warn users of a campaign being carried out by the Russian attackers who attack the network infrastructure. The targets are devices at all levels, including routers, switches, firewalls, network intrusion detection systems and other devices that support network operations. With the access which they have obtained, they are capable of masking themselves as privileged users, which permits them to modify the devices operations so that they can copy or redirect the traffic towards their infrastructure. This access also could allow them to hijack devices for other purposes or to shut down network communications completely.

More information available at US CERT

So Facebook has announced their latest steps taken in respect to user privacy, with the aim of granting themselves more control over their data as part of a General Data Protection Regulation (GDPR) from the EU, this includes updates of their terms and data policy. In this way, everyone, regardless of where they live, will be asked to review important information about how Facebook uses data and about their privacy. The topics to be reviewed will be about ads based on data from members, profile information, facial recognition technology, presentation of the best tools to access, delete and download information; as well as certain special aspects for the youths.

More information available at Facebook

They have identified that a 0-day in Internet Explorer (IE) is utilized in order to infect windows’ computers with malware. Qihoo 360 investigators confirm that they are utilizing it at a global scale by selecting targets through malicious Office documents loaded with what is called a “double-kill” vulnerability. The victims should open the Office document, in which will launch a malicious web page in the background to distribute malware from a remote server. According to the company, the vunerability affects the latest versions of IE and other applications that use the browser.

More information available at ZDNet

Barely hours after the Drupal team would publish the latest updates, they corrected a new remote code execution error in their system software from the content management; the attackers have already started exploiting this vulnerability on the Internet. The newly discovered vulnerability (CVE-2018-7602) affects the core of Drupal 7 and 8, and allows the attackers to remotely achieve exactly the same as what they would have discovered before in the error of Drupalgeddon2 (CVE-2018-7600), allowing them to compromise the affected websites.

More information available at The Hacker News

Last week Mozilla announced that the next version of Firefox 60 will implement new protection against Cross-Site Request Forgery (CSRF) attacks, providing support for the Same-Site cookie attribute. The experts will introduce the Same-Site cookie in order to prevent these types of attacks. These attributes can only have two values. When a user clicks on an incoming link in ‘strict’ mode from external sites from the application, they will initially be treated as ‘not logged in’, even if they are logged into the site. ‘Lax’ mode is implemented for applications that may be incompatible with strict mode.

In this way, the cookies from the same site will retain in the crossed domain’s sub-requests (for example, images or frames), they will send it provided that a user navigates from an external site, for example, by following a link.

More information available at Security Affairs

More information available at SC Magazine

More information available at Google

More information available at The Hacker News

More information available at Security Affairs

|

| Figure 1: Ready for a Wild World – Big Data for Social Good 2018 |

HSTS has some gaps

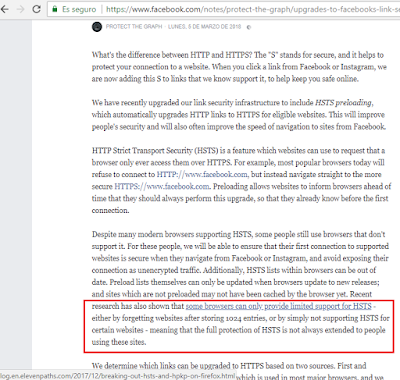

In its official security blog, Facebook announced a security update a few weeks ago from the Facebook links. So what did it consist of? In Jon Millican’s post (an engineer from Facebook’s data privacy team) he introduced the HSTS concept and following on from this, he announced a series of known HSTS weaknesses (they come as standard with the mechanism, practically), which they were going to try and cover up with this new approximation, which we can see here:

|

| Facebook mentions our research as part of their argument to implement this improvement |

With these arguments at hand, they proposed a solution from their side. What if they are the ones in the almighty position, who add the “S” to any HTTPS links to other sites on Facebook and Instagram?

HSTS… in both directions.

HSTS… for everyone

|

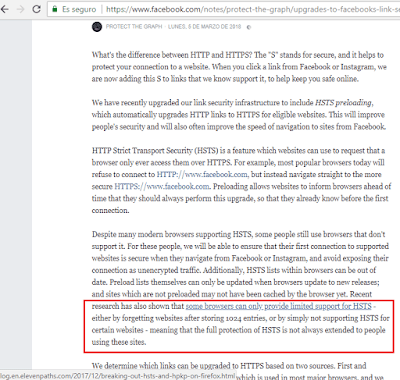

| In the end, whichever HTTP link will be marked as not secure |

New PinPatrol versions

Of course, speaking of HSTS, we have new PinPatrol versions for Chrome and Firefox; where you can control the HSTS and HPKP entries better from the browsers, also with usability and compatibility improvements.

Energy efficiency has become the ‘first fuel’ of the 30 countries that belong to the International Energy Agency (IEA), including Spain. This means that the energy saved by the members of this organization was higher in 2010 than the energy demand met by any source of energy, including oil, gas, coal, electricity or any other fuel.

Energy efficiency is a key lever in achieving the sustainability objectives which require lowering kT emissions of CO2 equivalent. Thus, the IEA modelled that 40% of the reduction in emissions needed to limit the increase in the global temperature to 2 degrees by 2050 could be achieved thanks to energy efficiency.

These figures endorse the additional benefits of energy efficiency, which are joined by other obvious advantages like savings for companies and industries. They are all part of the concept known as the ‘multiple benefits’ of efficient energy management, which was addressed by the IEA itself in a workshop held in Paris last March, where it updated and reflected on its progress.

This concept, which is still little-known, was presented in a 2014 report entitled ‘Capturing the Multiple Benefits of Energy Efficiency’, which described how investment in energy efficiency benefits the different stakeholders, identifying 12 key areas with a positive impact due to energy efficiency, including energy savings.

This list of advantages also includes environmental sustainability, active values, macroeconomic development, industrial productivity, energy security, access to energy, the cost of energy, public budgets, the available income, air pollution, and health and overall wellbeing.

Different kinds of solutions

Tools of this nature can be grouped into two kinds: remote metrics and remote control. The former primarily consists of implementing IoT sensors in the client’s facility which generate information on consumption and the variables on which it depends (outside temperature, inside temperature, humidity, etc.), which are then transmitted via mobile or stationary networks to a cloud platform which stores and processes it and provides a visualization environment. The latter add the deployment of actuators, which are managed remotely from the platform to allow for dynamic configuration and therefore achieve better optimization of consumption. Smart Energy IoT by Telefónica is an example of a specific solution to manage energy efficiency through the application of the IoT.

In short, by focusing on energy efficiency we get a threefold benefit, since it guarantees savings, sustainability and digitalization or “IoTization”, so it is a solution worth bearing in mind for companies and industries in all sectors.