Internet of Things has proven to be very useful in improving people’s lives in areas such as health and personal rest. This technology has also been proven to help your baby fall asleep. This very delicate and crucial task for the well-being of both parents and children can be much easier thanks to smart cribs, because they monitor babies’ sleep and improve the child´s quality of rest.

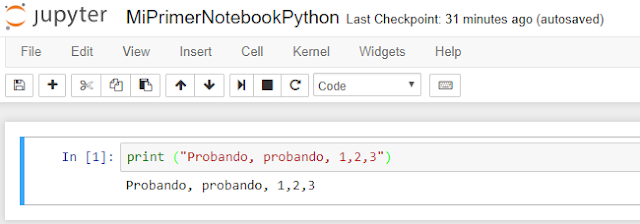

An example of IoT cradle is SNOO. It is designed by engineers from the prestigious Massachusetts Institute of Technology (MIT) and it is equipped with Wi-Fi, sensors, microphone and speakers to help babies bed down automatically. This device is connected to a mobile app that receives information from the sensors installed in the cradle. In this way, the movements, sounds and sleep patterns of the child are monitored, sending all the statistics to the smartphone for analysis.

When the cradle detects movements and / or cries, it activates the balancing mechanism to rock the baby, as well as the emission of a relaxing sound that simulates the beats that a fetus hears in the maternal womb during pregnancy. This is possible thanks to the fact that the crib has some circular plates underneath the mattress and an optimized motor to move them at a low speed.

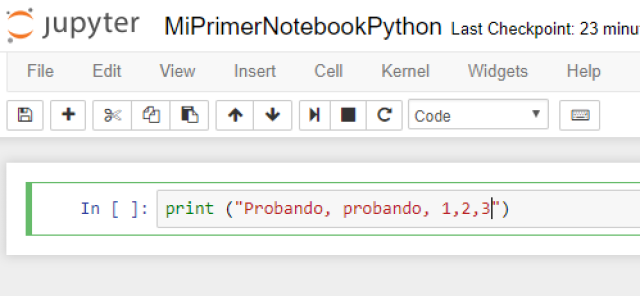

Internet of Things has also been proven to help your baby fall asleep

Another example of a smart crib is Max Motor Dreams, designed by the automobile company Ford, inspired by the tendency of babies to fall asleep when they drive. Therefore, to help induce sleep, this connected cradle prototype aims to emulate the experience that is felt inside a mooving vehicle.

Max Motor Dreams has an electric motor, a peripheral system of LED lights and a speaker. In parallel, the car is connected to a service that registers in the smartphone the parameters of movement, sound and lighting that take place during the course of a trip. In this way the cradle can reproduce the exact movements to which the child is accustomed in his usual trips. The crib vibrates and moves smoothly and imitates the sound and the level of light produced inside a car.

In addition to smart cribs, other connected devices specific to children are also available. For example, the sensors that are installed in the cribs and monitor the baby at all times, sending images and statistics about the child’s activity to the parents’ smartphone through an app.

In addition to smart cribs, other connected devices specific to children are also available

There are also intelligent pajamas with built-in graphene microsensors that monitor the baby´s vitals and store them in an app; as well as car seats connected to the smartphone that warn the driver when he or she leaves the vehicle if their baby is still in the car, preventing them from leaving them there.

Likewise, the installation of trackers in the baby carts can provide parents with real-time GPS tracking of their location. An example of smart trolley is Smartbe Stroller, which is connected to the smartphone to propel itself autonomously and has a built-in camera to see children at all times.

All these IoT devices, along with others such as mattresses or smart pillows designed to help users of all ages sleep better, are good examples of the Internet of Things’ ability to improve the lives of people of all ages.