As Artificial Intelligence (AI) continues to shape the world around us, in today’s post we explore the impact of AI on commercial Real Estate investment. To what extent is AI the key to investment success on the property market?

The Applications of AI on Real Estate Investment

Real estate investment can be highly lucrative if it is done right. But investors must be aware that, like any market, property markets do suffer from a degree of volatility and are vulnerable to demand and supply-side ‘shocks’.

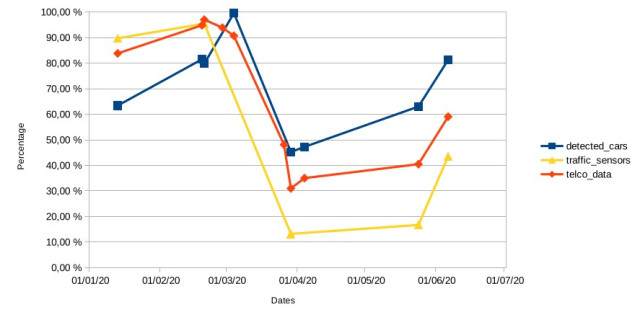

A clear example of such as ‘shock’ is the COVID-19 pandemic which saw net Real Estate investment volume drop dramatically in certain global markets in the first quarter of 2020, with the Spanish market alone suffering from a 40% reduction in sales. This, of course, will come as no surprise due to the inevitable decrease in wealth and access to finance arising from the complex financial side effects of state intervention.

As the CBRE (a leading commercial Real Estate services and investment firm) predicts, investment volume in the global Real Estate market will fall by 38% in 2020. The important question facing investors now is whether markets will crash or boom across the world as a result of the pandemic.

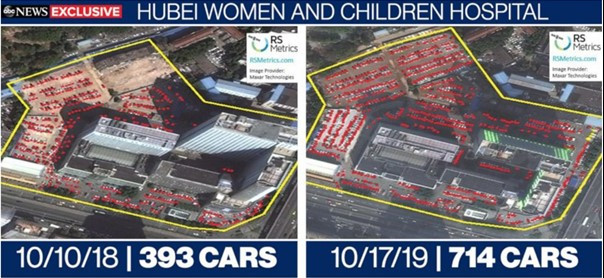

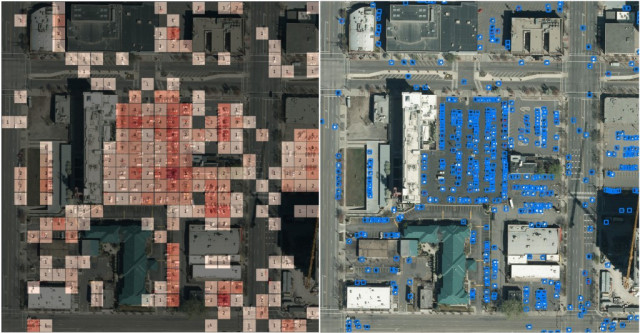

In answering this question, AI and Big Data can provide helpful insights. Data trends can help us predict the future state of Real Estate markets around the world to an increasingly accurate extent.

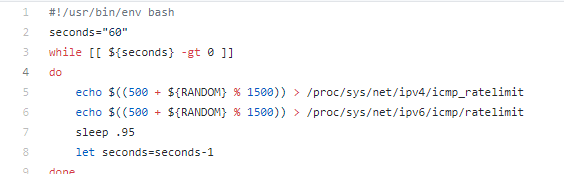

Skyline AI, a New York based property investment company, offers commercial investors the opportunity to make investment decisions with the help of unique software that compiles and analyses data on a broad set of market indicators. These indicators include interest rates, property data and stock market trends, to predict the future value of property investments in specific areas at specific times.

Skyline AI’s algorithms are also able to monitor potential off-market investment opportunities and predict when they will come to market. This gives investors the edge when it comes to accessing lucrative deals.

Other commercial property investment companies focus their analysis on social data such as neighbourhood crime rates, school ratings and accessibility of public transport to offer a similar analysis to both commercial investors and regular households who wish to make an informed property buying decisions.

So is AI the future of Real Estate?

Not exactly. Whilst AI can offer increased insights into the future state of property markets, and can be an extremely valuable tool for commercial investors, algorithms can only predict to a certain degree of accuracy the effects of shock events on markets, no algorithm could have predicted COVID-19. Markets are simply too unpredictable, and human intuition and evaluation of shock events remain fundamental. AI insights are only really valuable if combined with the expertise of analysts who may be able to predict more accurately the impact of ‘new events’ on markets.

We must also remember that humans are far better at selling than any bot or algorithm. The best salesman may be able to persuade even the most prudent investor to purchase a property.

Conclusion

The commercial Real Estate investment market has been and continues to be revolutionised by AI-driven insights. Investment companies are able to use Big Data to justify and influence purchasing decisions, almost guaranteeing a good investment return. However, we must remember that AI generated insights cannot always predict surprise shocks and they must be combined with analysts experience to provide a more accurate picture of future valuations. Furthermore, AI will never be able to replicate the selling powers of a human being.

To keep up to date with LUCA visit our website, subscribe to LUCA Data Speaks or follow us on Twitter, LinkedIn or YouTube .