|

| Figure 1. The first edition of the NID hosted over 15 speakers |

|

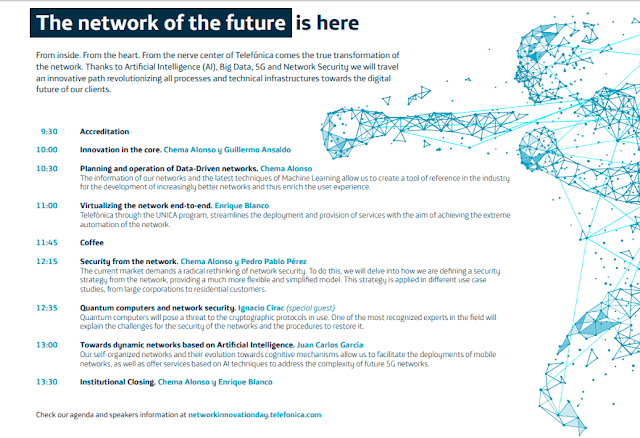

| Figure 2. The opening keynote by Chema Alonso and Guillermo Ansaldo focused on innovation and client centricity. |

You can also follow us on Twitter at: @Telefonica, @LUCA_D3, @ElevenPaths