In the world of Threat Intelligence, determining the attacker’s geographical location of is one of the most valuable data for attribution techniques, even if not perceived like that, this information may lead a research one way or another. One of the most wanted information is where the author comes from, where he lives in or where the computer was located at the time of an attack.

We focused our research in taking advantage of this kind of “time zone” bugs for tracking Android malware developers. We will describe two very effective ways to find out the developer’s time zone. We have also calculated if these circumstances has some real relation with malware, diving in our 10 million APKs database.

The Android app development kit (SDK for Android) comes with a tool called “aapt”. This program packs the files that are going to compose the application and generates an .apk file which basically corresponds to a zip format.

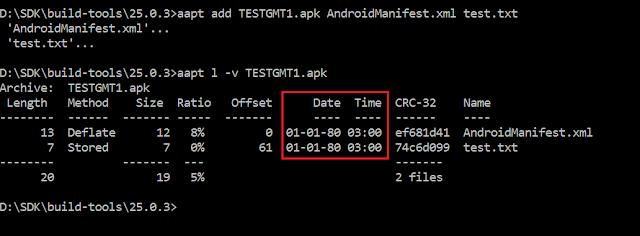

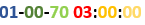

The figure represents a simple .apk generated by command line with aapt in a computer with time zone configured to GMT +3.

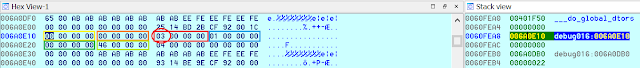

As observed, the modification time of the files is 01-01-80 and “03”, which corresponds to GMT +3. We observed this issue with different real apps and time zones. Why?

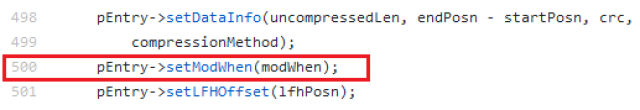

During the process where aapt adds a new file to an .APK, (ZipFile.cpp – line 358), you may observe in line 500 a call to “setModWhen”, using variable “modWhen” as an argument.

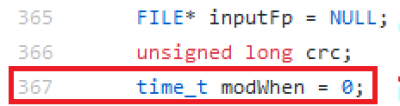

But when going back in the code, there is no part in the code where “modWhen” gets an useful value. It just keeps its “0” value, initially set in line 367 in the same file (ZipFile.cpp – line 367):

Function setModWhen will then be called (always) like:

Inside this function (ZipEntry.cpp – line 340), the modWhen variable (from now on referenced as “when”) is used in line 351 as part of this operation:

Which will be called like this, taking into account “modWhen” value:

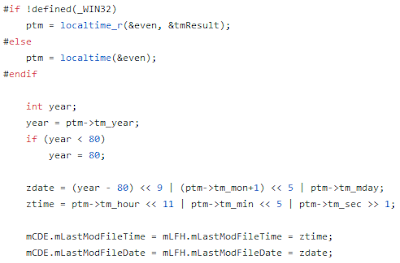

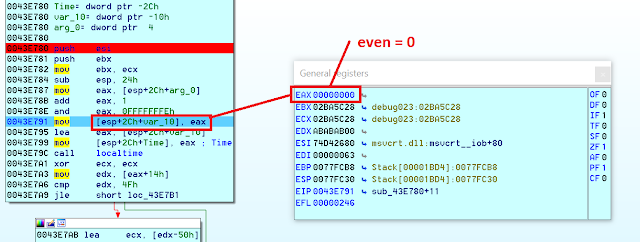

The result is (obviously) “0”. This value will be stored in the variable “even” that will be later on used as an argument for “localtime” function. This function allows to create the structure for “tm * ptm” date and will be used to set date an hour for the modified field in the files added to the .APK itself.

Because of the timestamp (“even” variable) used as an argument for localtime is not valid, the date generated for the files is not the real one, but 0. There is a correction for the years (it is set to 80 if lower) and it finally gets the format already described: “01-01-80 [offset_gmt]:00:00”.

Next figure shows how “even” is set to 0, just before localtime function receives it as an argument.

The code goes on, and now it splits the data (day, month, year, hours, minutes and seconds) so they can be used separately (in this case printed separately in the screen). The order in which localtime returns the result is: seconds, minutes, hours, day, month, and year. That is, for example, in the first position (0x006A0E10) you may find 4 bytes for the seconds, and in the last one, (0x006A0E24) we can find another 4 bytes for the year.

So definitely, localtime function is the one returning this offset (in this case: +3), taking it from the operative system. Aapt will later round up the numbers to 01-01-80 because this is the “Epoch” for PKZip standard. The reason may be that localtime times to adapt every date to your own time zone where the computer is supposed to be located.

Honoring the documentation of the localtime function, this should not happen because it is specified that if this function gets a null or “0” value as an argument, return value should be null. So, when is localtime getting the GMT offset and returning it? For Windows System, if TZ variable (time zone) is not set in the application itself, localtime function will try to extract time zone information from the system itself and the function will go for this data when receiving a (real or not) argument value. An invalid timestamp like “null” or “0”, will just be taken as a “0” hour and the returned value will contain the GMT offset, that ends up cleanly added to the place where the hours should be.

What to conclude then? Localtime is not handling errors as it should. When receiving a 0 or null argument, it should return null, not 0 plus whatever your GMT (TZ for Windows) is, added to this value. On the other hand, aapt makes a mistake using 0 as a “constant” argument for feeding this function.

GMT zone certificate calculation

As said, .APK (and jar, for this particular technique) follow the PKZIP standard. That is, they are .zip files for what is worth and share most of the PKZIP specifications. In the case the APK is built not directly using aapt, there will not be a chance to know the creator time zone and all the “modification time” fields for the files inside the zip should be the “right ones”. However (a few years ago), we have found another factor that will allow us to know the time zone where the developer compiled the application, just as interesting as the one mentioned and as a complementary method. The method is about calculating the difference between the right timestamp of the files and the timestamp of the certificate inside the APK to sign it (this date is stored in UTC, so we have references enough to calculate the time zone).

We tried to stablish a relation between:

- Malware/adware creators and the way APKs are compiled (using aapt in command line).

- Malware/adware creators and the way ad-hoc and disposable certificates are created.

For this experiment, we took 1000 files (unless stated otherwise) from the ones with the leakage in every flavor (1000 files leaking GMT+1, 1000 leaking GMT+2… etc) and checked for malware.

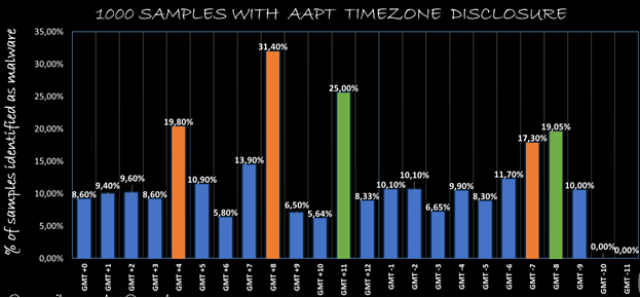

AAPT disclosure bug:

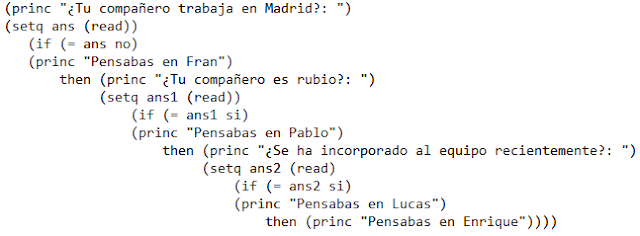

Hemos intentado establecer una relación entre:

With the AAPT bug:

Green cloumns are not representative because of using too few samples.

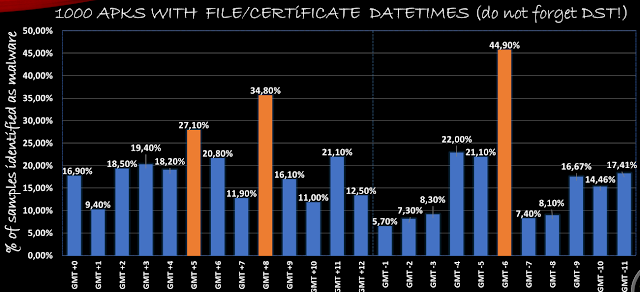

Leaking because of a disposable certificate:

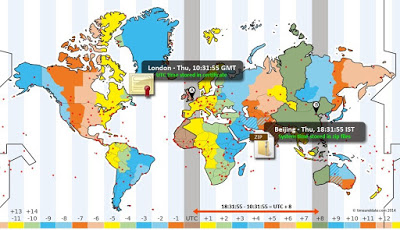

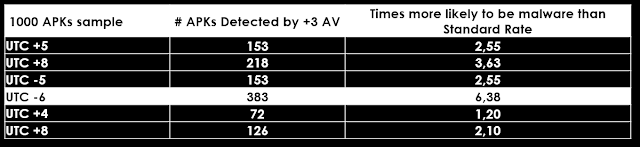

So we can conclude that, basically, GMT+4, GMT+5, GMT+8, GMT-6 and GMT-7 are the time zones producing more malware. Why this little difference between techniques? For example, with the first aapt bug, predominant time zones producing malware are: GMT+4, GMT+8 and GMT-7. With the certificate technique, GMT+5, GMT+8 and GMT-6 are the ones producing more malware. These GMTs correspond to some parts of Russia, China, and United States West Coast. We think that this difference is because of the Daylight Saving Time. These techniques are tied to DST so some countries may use +-1 hour difference depending of the season. China does not use DST (and Russia either since a few years ago).

Aside, we know our database contains about a 6% of malware in any set without these characteristics we may find. So, we will use this as a “correction factor” to compare, we finally get these numbers:

Metadata

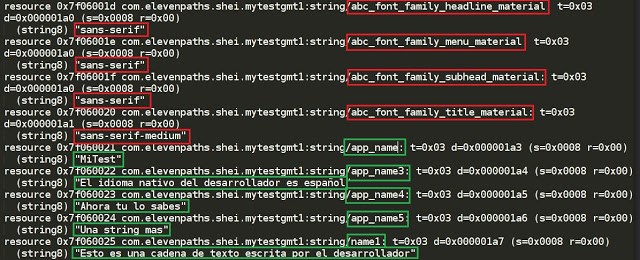

As one of the techniques related with metadata, we show how all the strings automatically generated by Android Studio are in specific components created by the IDE itself, while the text strings written by the developer are found in other files, not associated to a specific component. For example, when executing:

./aapt dump –values resources app.APK | grep ‘^ *resource.*:string/’ –after-context=1 > output.txt

We get the strings written by the developer directly that, very likely, will use his/her native language.

Conclusions and future work

We have presented two techniques to leakage time zone from an app. One of them, related to an aapt bug, does not only shows a bug in the way dates are handled, but a possible problem of a system function (localtime) not honoring the specifications. This may affect other programs in some other ways.

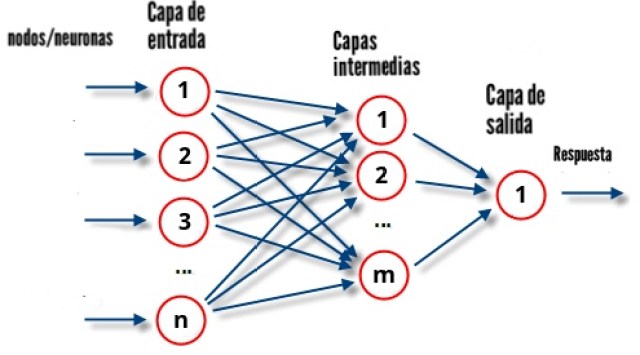

By studying these techniques, we have a new way of possibly detecting automated malware creation by analyzing when and how certificates are created to sign these apps. Aside of the statistics about where the malware comes from analyzing its time zones, this may be used as an important feature in machine learning systems to early detect Android malware.

Aside, we have shown some tools and tricks for a quick view of all this useful information around APKs metadata.

Future work should be more accurate about DST, taking the season into account to classify malware, and maybe using more samples to get better conclusions.

This is just a briefing of the complete paper, that you may find here:

This is just a briefing, the whole paper is here: