Join us for the first Big Data for Social Good in Action event

AI of Things 4 April, 2017

|

| Figure 2: The event’s agenda is geared towards facilitating conversation around using Big Data for good. |

Visit the event page to register your interest in attending. Space is limited, so we will get back to you about whether your request was successful.

New developments in fitness and health data

AI of Things 3 April, 2017

The current situation is not as glamorous and user friendly as you would expect with our physical and health data management, there is a high reliance upon user proactivity and device syncing. If you have tried to use Apple Health without any connected devices you know it can be a less than intuitive experience.

For that reason, Mywellness, an app (yes, another one) powered by Technogym that helps you reorganize and measure your physical activity data synchronized with your gym performance. How is that done? By gathering data directly from your activity using the gyms equipment such as connected bicycles, treadmills, and weightlifting machines? First, users take a medical examination with the trainer at the gym and baseline vital signs are uploaded to the cloud. Users are allowed to modify them with further measurements. Then, users follow a workout plan using gym equipment that tracks their progress towards personal goals. To provide a bigger picture of individual health, Mywellness also includes other health data collected on a user’s phone.

Here at LUCA we are eager to see how this trend develops so we can make data application and analysis to health and fitness more efficient. As more companies become interested in analysing their employee health, equipment like VI are likely to increase in importance. This is definitely a trend that is worth watching because one day our salary may well be connected to how well we perform and take care of ourselves physically.

Could Big Data solve the music industry’s problems?

AI of Things 30 March, 2017

|

| Figure 3: Founder of Kobalt Music, Willard Ahdritz |

4 companies using Big Data to address Water Scarcity

AI of Things 29 March, 2017

1. GE

|

| Figure 2: GE is focusing on expanding its digital water management profile (image source: GE) |

|

| Figure 3: WaterSmart Software uses Big Data to help utilities better manage their water systems. |

3. TaKaDu

|

| Figure 4: TaKaDu leaders at a World Economic Forum conference. |

4. Imagine H2O

|

| Figure 5: The latest cohort from Imagine H20’s Water Data Challenge. |

Hackathons are not just for developers

Ana Zamora 28 March, 2017

By Glyn Povah, Head of Global Product Development at LUCA.

Actually this post isn’t about hackathons at all really. The central theme is about a collaborative approach to product development that puts customers heart and centre, not as an afterthought.

|

| The Smart Digits team in one of the product sessions. |

Customer-centric product development

“We innovate by starting with the customer and working backwards. That becomes the touchstone for how we invent”. Jeff Bezos, CEO Amazon

- Fostering the right culture. The goal is to foster a spirit of collaboration in the team where everyone is equal regardless of their role and everyone can have their say and input. All team members across all functions are encouraged to challenge and be challenged. Robust and challenging conversations deliver great outcomes and decisions. Most of all this approach fosters trust and buy-in from the whole team which results in a highly collaborative approach from the start of a new product development project. The same principle applies to involving customers right from start too. This approach engenders trust and high levels of engagement from the get-go.

- Execution excellence. We believe co-creating with customers gets fast, high quality results that meet customer needs from day one. We have plenty of examples of going from a working prototype as a result of a one day hackathon to a Minimum Viable Product (MVP) we can share with our customer in less than two weeks.

|

| Collaborative teamwork at the offices of Wayra UK. |

Think big, start small

4 Data Enthusiasts changing the world as we know it

AI of Things 27 March, 2017

It is becoming increasingly apparent that NGOs and governments are creating more roles in the Data Science discipline. Using Big Data for social good is all about coming up with new data-driven ways to solve the most pressing problems in our society. Today, we decided to take a look at some of the trailblazers in this space, focusing on four Data Scientists and Enthusiasts who really are changing the world with their work.

1. Miguel Luengo-Oroz

|

| Figure 1: Miguel Luengo-Oroz has a very impressive career background. |

2. Bruno Sanchez Andrade Nuño

|

| Figure 2: Bruno Sánchez-Andrade Nuño has focused on using Big Data for social good. |

3. Jake Porway

|

| Figure 3: Jake presenting at the National Geographic series. |

data to see the good values in data and harnessing this information. He remains

an active data scientist even after setting up DataKind which aims to give

every social organization access to data capacity to better serve societies

across the world. He graduated from Columbia University and shortly after

started working as a Data Scientist at The New York Times. He has been a TV

host for the National Geographic as they promoted a game show using data to

create interactive gameplay. DataKind’s global presence allows it to target a

range of global issues and have a more effective response from their various

offices. He wants to promote the use of data not only just inform trivial

decisions but also how to actually improve the world we live in.

4. Christopher Fabian

|

| Figure 4: Passionate from the beginning about the potential of data |

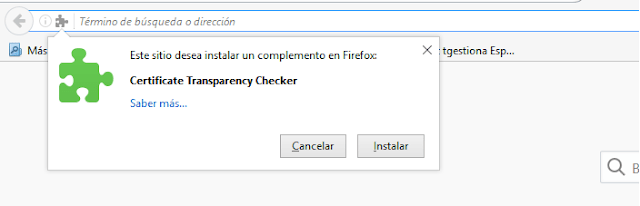

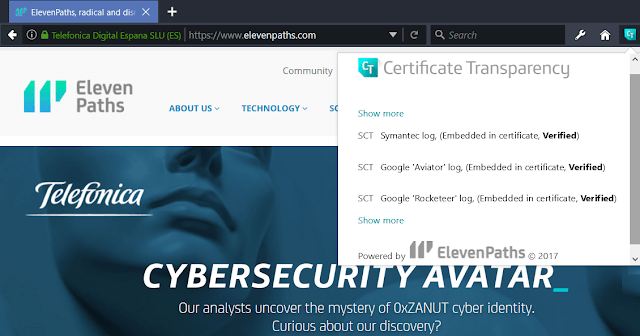

ElevenPaths creates an addon to make Firefox compatible with Certificate Transparency

ElevenPaths 27 March, 2017

|

| Checking the SCT embedded in our certificates |

Certificate Transparency is a new layer of security on top of TLS ecosystem. Sponsored by Google, it basically makes all the issued certificates to be logged (in some special servers), so if an eventual attacker would want to create a rogue one, it would face a dilemma: If the rogue certificate is not logged, that would rise up some eyebrows… if logged, that would allow a faster detection.

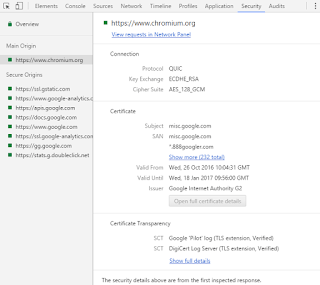

A certificate is considered “logged” if it counts with a SCT (Signed Certificate Timestamp). This SCT is given to the owner of the certificate when logged, and the browser has to verify it is real and current. This is exactly what Chrome has been doing for a while now. Now Firefox, thanks to this plugin, is able to check the SCT for certificates. But there are some good news and bad news:

|

| This is how Chrome checks the SCT |

The good news

Our addon, created in cooperation with our lab in Buenos Aires, works with most of known logs. It means that it does not matter from which log the SCT comes from, we will be able to check it because we have introduced the public key and address of basically all known logs so far:

Google ‘Pilot’, Google ‘Aviator’, DigiCert Log Server, Google ‘Rocketeer’, Certly.IO, Izenpe, Symantec, Venafi, WoSign, WoSign ctlog, Symantec VEGA, CNNIC CT, Wang Shengnan GDCA , Google ‘Submariner’, Izenpe 2nd, StartCom CT, Google ‘Skydiver’, Google ‘Icarus’ , GDCA, Google ‘Daedalus’, PuChuangSiDa, Venafi Gen2 CT, Symantec SIRIUS and DigiCert CT2.

This makes our solution quite complete but…

The bad news

SCT may be delivered by three different ways:

- Embedded in the certificate.

- As a TLS extension.

- In OCSP.

It is not easy from a plugin technical perspective to get to TLS or OCSP extensions layer and check the SCT. So our plugin so far checks for SCT embedded in the certificate itself. Although not ideal, this is the most common scenario so most of certificates distribute its SCT embedded.

Another bad news is that plugins have to be validated by Mozilla to be published in its addons store. Once uploaded the plugin gets in a queue. If it contains “complex code” it may be there for longer, so Mozilla can make a better work reviewing and checking its security and quality. After waiting for more than two months, we have decided not to wait anymore. The queue seems to be stuck for days and days and the is no hope to make it work faster. Mozilla reviewers are working as much as they can, but they can not deal with so many addons as fast as they would like to. We thank them anyway. That is why we have decided to distribute it outside addons store. Once it gets reviewed released, we will let you know.

The addon is available from here.

To install it, just drag and drop the file into a new tab.

Or, from the extensions menu, settings, install from a file.

When Big Data meets art

AI of Things 24 March, 2017

In this world of ours, it is normal to feel overwhelmed by the amount of information, facts and data we encounter everyday – and the quantity we are generating every second continues to grow on an exponential basis. However, perhaps the problem isn’t actually about the amount we have to digest, but rather the way in which we digest that information. Maybe we are expecting the world to follow this frenetic rhythm of data consumerism without taking into account what the human brain is actually capable of processing.

Nevertheless, we shouldn’t lose hope. There are many people out there thinking about how this problem can be solved. And it is not necessarily what you might be thinking. What if we present data in a way that people really engage with it? Because, after all, let’s not forget that in this cold and empirical world, emotions make it easier to establish bonds with people – and art is a key way to forget these emotional connections.

Connecting Big Data with Art, cannot be done by merely Data Scientists or Artists flying solo – but rather a unique and potentially beautiful partnership between both parties.

This new trend came about with movements such as net.art, the first group of artists that used Internet for their creations. But who is leading new revolution of bringing Big Data Art right now? Here are four examples of artists and projects that use Big Data:

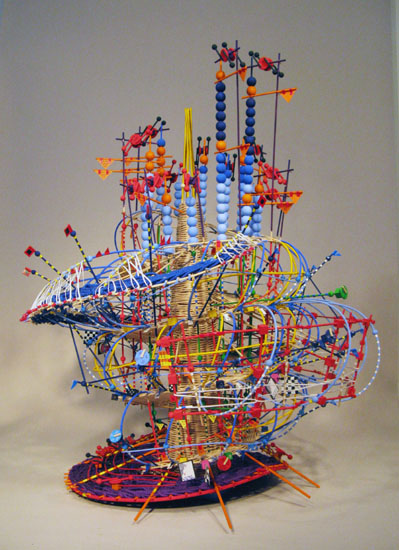

1. Nathalie Miebach

Nathalie Miebach is the woman who inspired this blogpost. She has had a unique career path in which she blends arts and science, studying art in great detail, but also she has taking courses in physics and astronomy. The result of this combination of studies is quite remarkable. For ten years she has been creating beautiful sculptures which represent weather elements data. How? She measures data by herself with basic instruments and checks that information with internet data on weather. She then combines this data and chooses two or three variables and starts to translate that data into creating the sculpture which has a basket base, and each element represents a bean and colour thread. An example of the result can be seen below:

Nathalie also converts this “data visualization-art work” into a musical score as well. She works with musicians in the translation of this data into musical notes and composes a musical piece.

Her artistic statement is a powerful one: Nathalie challenges how the visualization of science can be approached. At first, you can see this sculpture can be viewed as a data visualization. But if placed in a museum, it could be seen as a piece of art. If it were placed in a concert hall, then it would also be a musical piece. In summary, with her art work you can see, feel and hear science – which is extremely well explained in this Ted Talk and her portfolio.

2. Maotik

Mathieu Le Sourd (also known as Maotik) is using more technical tools to create innovative art that represent data visualizations. The Montreál based artist is well-known for his impressive art installations.

FLOW is the name of the beautiful installation that represents real-time nature data. Taking into account the variables of the moon, temperature, humidity and the position of spectators, this interactive installation offers a sensorial experience of weather, showed as waves reflected into the installation. Want to see it in action? Check out the video below:

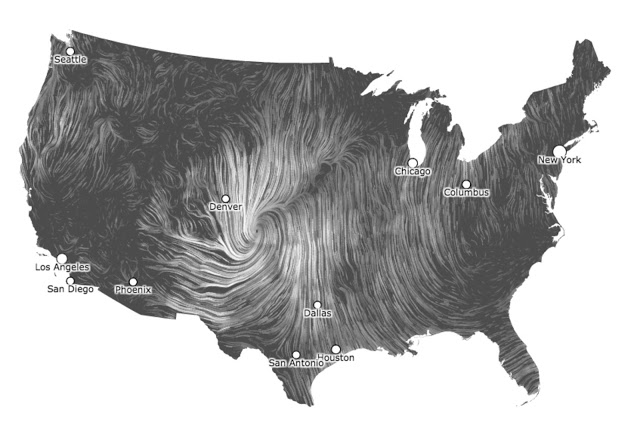

3. Fernanda B. Viegas and Martin Wattenberg

Big Data and art are also being combined by technical profiles doing beautiful visualizations. This is the case of Fernanda Viegas, a computational designer whose career has focused on data visualization, specifically on its social, collaborative and artistic aspects. She has done many interesting things, such as the project with IBM in which she created a public visualization tool named Many Eyes. You can see more of her projects on her website. One highlight is this wind map, an amazing visualization of wind real time data in the US done in collaboration with Martin Wattenberg:

Fernanda and Martin have done many other projects with data together, which you can check out here.

4. Dear Data Project

And last but not least, another example is this unusual visualization in form of daily mail. This idea was shaped by Georgia Lupi and Stefanie Posavec, two friends separated by the Atlantic Ocean that sent each other visualizations of their daily data drawn on a postcard sent through mail post. This mail chain has been composed on a book named Dear Data Project, which is full of their beautiful handmade visualizations and is available here. To see the whole idea behind the project, watch the video below:

So fear no more, Big Data friends. There are many ways to engage with non-specialized audiences to make science not only interesting but also fun to consume. Art is key in processing all of the information around us, and an opportunity to creatively represent our world. We hope that this discussion of art and Big Data will help you start to start to rethink the way we view data.

How we’re mapping Climate Change with Big Data

AI of Things 23 March, 2017

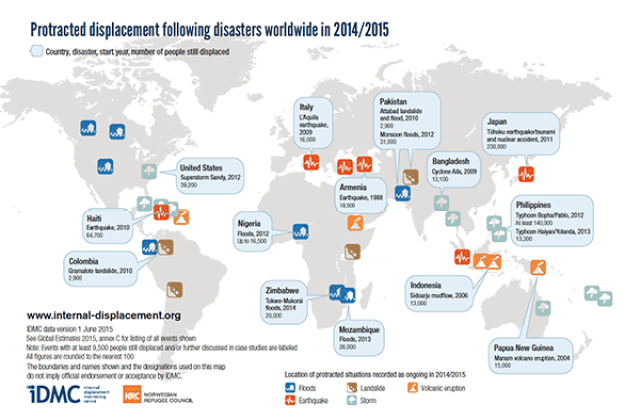

|

| Figure 2: Environmental migration occurs worldwide after natural disasters, but the problem is worsening with climate change. |

Environmental migration is nothing new, as it occurs almost every time there is a natural disaster, such as a hurricane or a tsunami. However, the volatility and severity of weather-related incidents are increasing due to climate change. This means that people are being compelled to move more frequently, and the duration of their displacement is usually longer, if they are able to return home at all.

Would you like to know more about this study? Check out the paper here.