Throughout its history, Artificial Intelligence (AI) has experienced ups and downs: periods when it received more attention and resources, and periods of disillusionment and stagnation.

These periods of waning interest in Artificial Intelligence are known as AI-winters. Two AI winters have been identified in the past 50 years, and we are faced with the challenge of avoiding a third one.

Consequences of an AI-winter

During AI-winters, disillusionment with Artificial Intelligence translates into widespread disinterest. This disinterest leads to a reduction in attention and funding for research and development.

In those periods that last years, there are few significant advancements. And the few that do occur are disappointing. Even after a moment of high enthusiasm, in an AI winter, Artificial Intelligence fades away from conversations and media coverage.

Currently, Artificial Intelligence is experiencing a “Cambrian explosion” that some describe as hype or even a bubble. In any case, we are in a period of high expectations, the complete opposite of an AI winter. And precisely because it is a known pattern, the inevitable question arises: Are we on the verge of a third AI winter?

AI-winters throughout history

Experts have identified two AI-winters. Both occurred after notable advancements and moments of industry, media, and public excitement:

- First AI-winter, late 1970s and early 1980s: During this period, expectations for Artificial Intelligence were also high. However, the advancements did not live up to the exaggerated promises made by science fiction. As a result, there was a significant decrease in funding and interest in research and development of Artificial Intelligence.

- Second AI-winter, late 1980s and early 1990s: After the first AI winter, interest in Artificial Intelligence resurfaced. Once again, expectations exceeded achievements. The lack of significant progress and the gap between expectations and reality led to another period of disillusionment and disinterest.

In 1996, IBM’s supercomputer Deep Blue defeated the reigning world chess champion, Gary Kasparov, for the first time.

What is AI-winter and how to avoid it

Factors that could lead to a ‘Third AI Winter’ Some skeptics do not rule out the possibility of a third AI winter occurring after the current period of high expectations. This is mainly because new variables come into play this time, including those related to privacy, security, and ethics.

This is because Artificial Intelligence is not only finding applicability in businesses this time. It is also proving useful for the general public, such as in the case of digital assistants or Generative Artificial Intelligence, two accessible forms of AI for end users.

The widespread applicability of Artificial Intelligence should mitigate the occurrence of the next AI winter, but there are factors that could contribute to it. And the first one is fear.

- Fear: As Artificial Intelligence becomes more advanced and capable, fears arise, and news spreads regarding concerns and fears about its impact on society: fear of uncontrolled AI, job loss, invasion of privacy, lack of transparency… and the ever-present dystopian scenario.

Mistrust and skepticism regarding technology can arise from these fears. However, there are more factors that could lead to a third AI winter:

- Restrictive legislation around Artificial Intelligence: If excessively restrictive or ill-conceived regulations are implemented, it could hinder research and development of Artificial Intelligence, limiting innovation and progress.

- Scarcity of high-quality data: Artificial Intelligence relies on large amounts of data, which are used to train and “teach” algorithms. If there is a lack of relevant and high-quality data in certain domains, or if the data does not consider demographic and social differences, it could hinder the development of reliable Artificial Intelligence models.

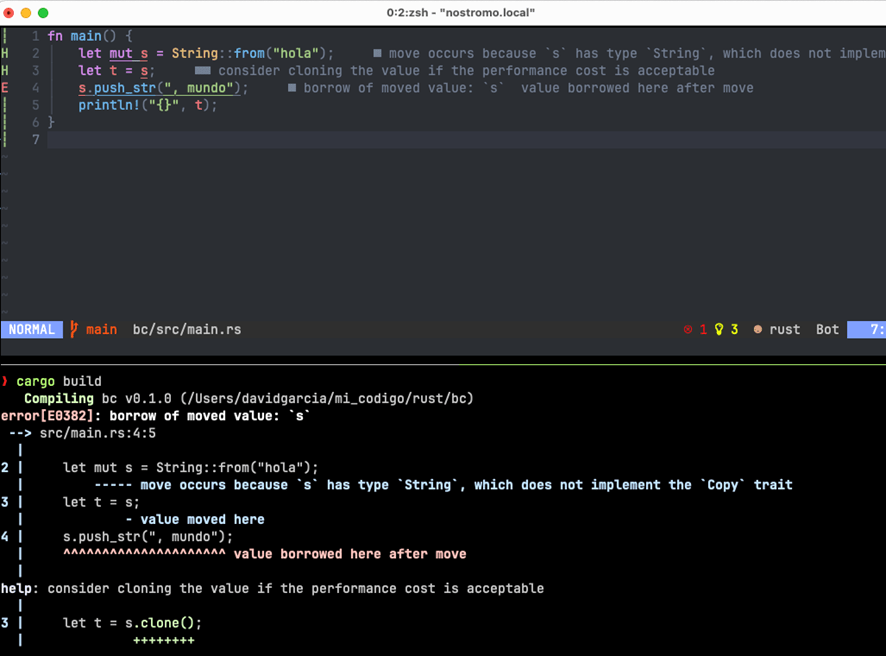

- Technical limitations: Limited computational power, poor energy efficiency, lack of scalability of algorithms, or technical phenomena such as hallucinations in Artificial Intelligence could slow down its progress.

Keys to avoid AI-winter

- Balanced legislation: Regulations should address legitimate concerns around Artificial Intelligence (including those related to privacy, security, and non-discrimination) without hindering its development and potential benefits. Collaboration between lawmakers, AI experts, and the industry is essential to achieving this balance.

- Support education and technological advancements: Investing in research and development to drive significant technological advancements requires fostering academic research and collaboration between industry and institutions. It is also critical to educate children and young students.

- Promote trustworthy Artificial Intelligence: It is essential to address concerns regarding privacy, security, and social impact. Ethics, transparency, explainability, along with responsibility and proper governance, are indispensable principles to avoid an AI-winter.

Although predicting the next AI winter is difficult, it is necessary to learn from the past and take measures to maintain sustainable progress in Artificial Intelligence.

Only in this way can we avoid a third AI winter and harness the full potential of the progress offered by this technology.

Featured image generated with Bing.