Digital transformation brings us new opportunities, it is changing our lives both in the way we communicate and in the way we produce.

Digitalisation of the industrial fabric in particular is key to ensuring new business opportunities, enhancing competitiveness, efficiency and guaranteeing the sustainability of the industry.

Nowadays we can already see how companies in the industrial sector and their processes are transforming at great speed to adapt to the needs of this new digital revolution. All of this, thanks to technologies such as the Internet of Things, Big Data, Artificial Intelligence and Blockchain.

Automation and productivity improvement

Automation in Industry 4.0 continues to gain relevance, as it allows routine and repetitive processes to be carried out quicker, more comfortably and efficiently, thus increasing productivity significantly.

One of the most important points to achieve this improvement in productivity is the monitoring and control of processes, a task that can nowadays be carried out by machines in a practically infallible way and in real time. This enables the end-to-end control of the production process, facilitating the integration and interoperability of the different equipment and sensors.

Such control also allows the identification of links with low productivity, facilitating modifications as soon as possible and thus avoiding loss of profits or investments in unsuitable elements.

In addition to these advantages, the automation and robotisation of processes reduces production and error detection times during the process, improves product quality and reduces production and maintenance costs.

Connectivity

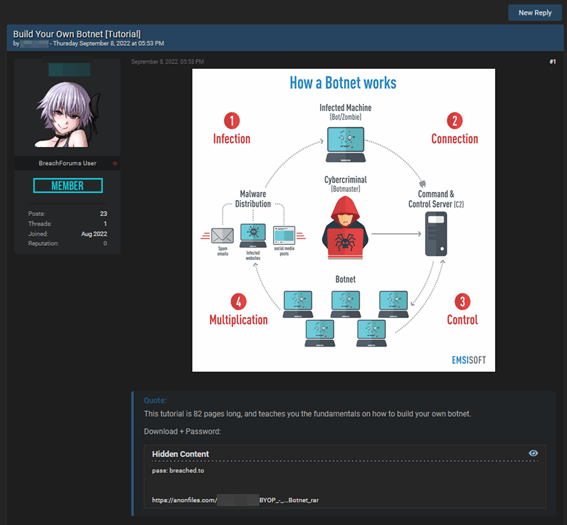

In this digital revolution, where a multitude of devices will be interconnected in real time for instant decision making, it is vital to have a good connectivity in factories.

5G networks are the wireless connectivity solution that connects all components of the industrial sector, optimising internal processes, in environments such as manufacturing, mining, ports and airports, petrochemicals, and logistics.

It opens the door to more efficient, flexible and autonomous production plants. They make production processes more mobile and flexible, eliminating wiring and enabling production lines to adapt quickly to orders.

Today, there are 5G solutions that offer dedicated mobile connectivity in the industrial environment, with synergistic technologies in the same infrastructure enabling public/private connections. IIoTNs (Industial IoT Networks, LTE or 5G networks) facilitate the paradigm shift that Industry 4.0 is implementing in its production chains from being static to virtual and 100% configurable in real time depending on the demand.

Data analytics and new business models

All the information generated in Industry 4.0 processes can be studied to improve productivity. This is possible thanks to Big Data Analytics processes, which can provide reports related not only to productivity, but also to the probability of success, points of improvement or modifications in real production time.

Enabling advanced analytics in the industrial environment comes through the creation of an integrated operations environment that facilitates the collection and analysis of data from different processes with Big Data, Machine Learning and AI techniques.

The rapid response and planning enabled by Big Data tools make it possible to carry out predictive actions in the industrial environment, such as predictive maintenance of machines and equipment, which reduces their downtime, increases their useful life, reduces maintenance costs, reduces the waste of manufactured products and reduces the environmental impact..

In short, the digitalisation of the industrial environment allows the factories of the future to work more efficiently, with better results in terms of quality and availability, but, above all, greater flexibility. A flexibility that allows them to adapt to changes in demand and to the growing trend of customisation of manufactured products, which will consequently enable manufacturers to respond better and even new business models with a focus on the end client and on sustainability.

Industrial and Energy Symbiosis (or Industry and Energy Symbiosis)

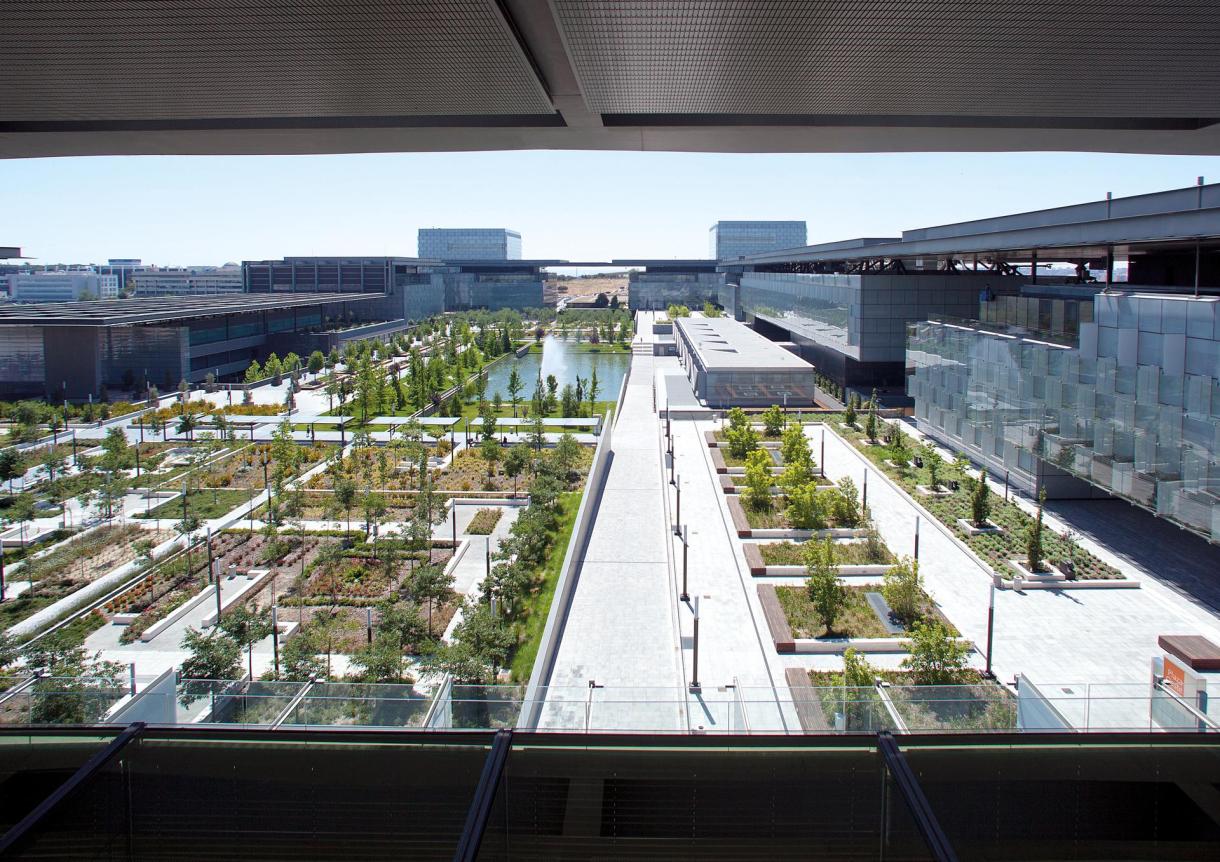

We are currently facing a global climate emergency where reducing greenhouse gas emissions into the atmosphere is a major challenge, a task in which we must all be involved.

Industries seek to become more sustainable and reduce the environmental impacts derived from all stages of the value chain of their production, logistics and commercial processes. It is essential that in the industrial environment it is possible to carry out technological developments aimed at the improvement and efficiency of these processes and the optimisation of energy consumption in all factories and companies’ facilities.

This can be achieved through more digitalised and automated solutions, using connectivity, sensorisation, Big Data, Machine Learning and AI technologies that enable factories to work more efficiently in terms of both production and energy. Here are some examples: reducing the number of machine and equipment downtime through predictive maintenance; reducing wastage; optimising the routes of vehicles used in internal and external logistics and in fleet management; or with modular, scalable and adaptable energy efficiency solutions that control and manage the energy consumption of the facilities.

It is therefore very important to maintain this symbiosis between the industrial environment and energy efficiency, as the efficiency of industrial, distribution and logistics processes and assets, as well as commercial ones, can substantially improve not only the savings in production and maintenance costs, but also the energy savings of factories and facilities, increasing sustainability and care for the environment.

In conclusion, we highlight the fundamental role that each of these subjects plays in the industry:

- Automation in Industry 4.0 to improve productivity.

- 5G networks as enablers for efficient connectivity in factories.

- Analytical tools enabling predictive actions.

- Reducing environmental impact through technological developments.

Today, more than ever, we can say that the world is moving in a clear direction: digitalisation and sustainability.