We are already familiar with the ATT&CK project of the MITRE corporation. It is a de facto standard that helps us to characterise threats based on the techniques and tools used by cybercrime, which is essential when planning or modelling threats. It is based on the military-rooted concept of TTP or tactics, techniques and procedures. In the end, it aims to model the various actors: how they do it, who does it and why they do it. With this information, it is possible to realistically simulate attacks, improve the detection of malicious activity on the network, etc. But today we will talk about D3FEND.

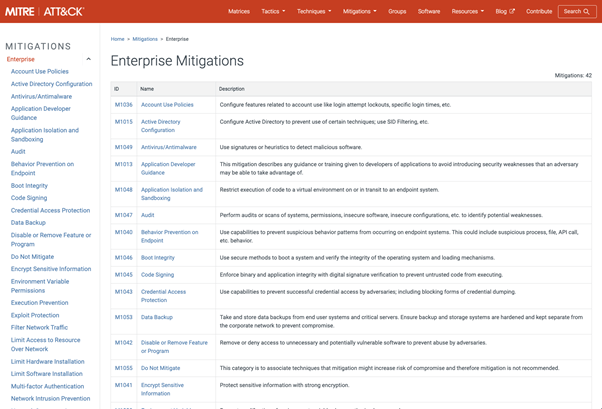

Although ATT&CK has a chapter on mitigations, the arrangement of mitigations did not allow for a common naming to facilitate the creation of relationships. Basically, they were defensive strokes of a couple of lines to a paragraph in length with no concordance between them.

Seeing this need, MITRE itself embarked on a project (in beta phase) to categorise and build a common language regarding possible defensive capabilities and countermeasures. D3FEND was born with this premise in mind: to create a knowledge base in the form of an ontology that includes all countermeasures and capabilities. Moreover, it does not detach itself from ATT&CK, but also maps on top of it by adding or extending the chapter on mitigations.

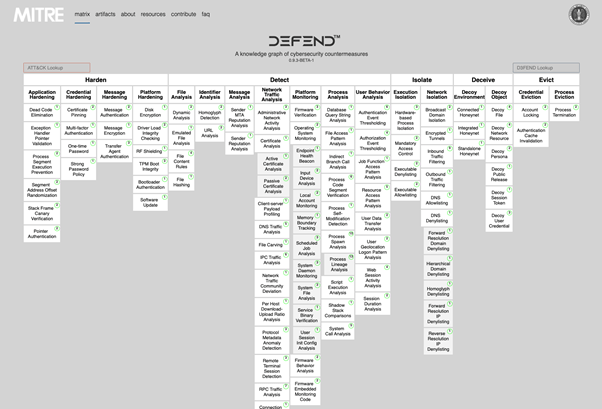

The framework is presented in the form of a matrix (like the ATT&CK matrices). Here we can see the general classification: Harden, Detect, Isolate, Deceive and Evict. Different defence fundamentals that are developed in several columns.

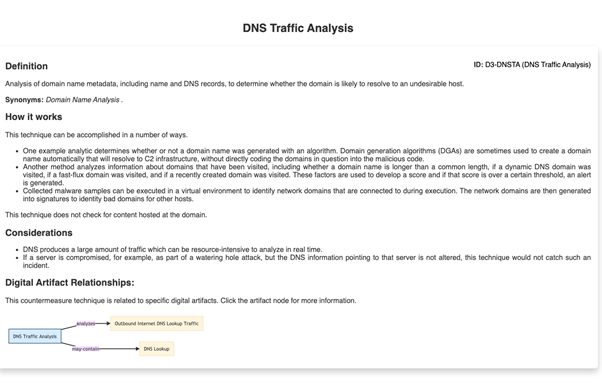

For example, extremely common is the detection of malware based on the analysis of DNS traffic, either in the surrounding network or during the detonation of a sample or executable. In such a case, this is an item in the detection area and network traffic analysis sub-area. As we can see, within this we find the item “DNS Traffic Analysis” which contains a complete definition and relations with other artefacts of the framework, plus (and this is especially useful) the corresponding mapping to ATT&CK.

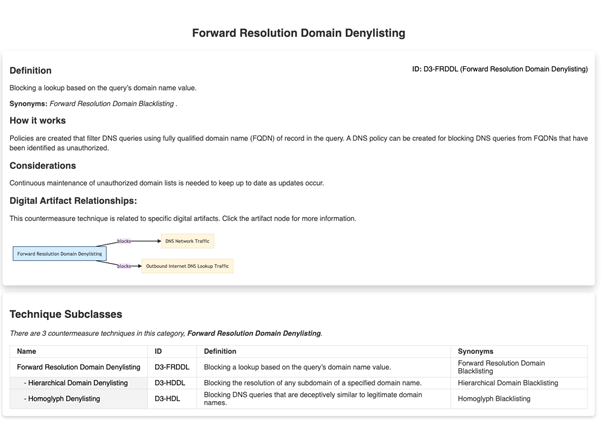

Once we obtain the definition and relationships with ATT&CK, we can expand the relationships with the rest of the artefacts by clicking on the graph in the Digital Artifact Relationships section:

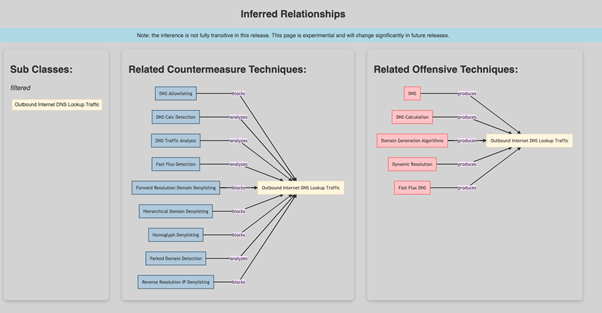

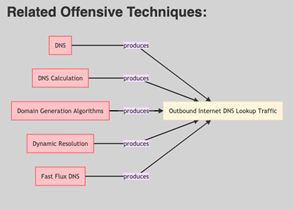

Here we can see in a practical way the relationships both with other defensive techniques and with the mapping to the ATT&CK framework. In this aspect it is particularly useful. For example, if we zoom in on the image, we can see that the offensive techniques described in ATT&CK that produce outgoing DNS traffic are grouped and identified:

It is not now a matter of adopting countermeasures for all the techniques identified, but rather that all these techniques derive from the concept of Outbound Internet DNS Lookup Traffic or, in other words, performing a DNS lookup.

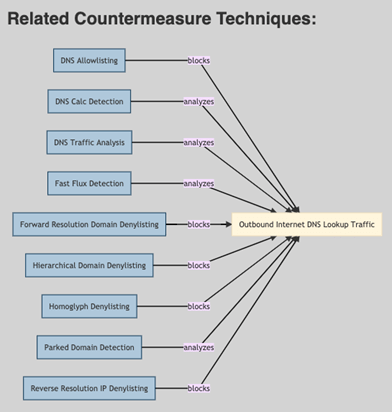

This is where we should place our emphasis and focus on the countermeasures that will facilitate the adoption of possible controls and defences, or by correspondence, the defensive side of the tree:

What do we do to detect and prevent the resolution of malicious domains? That question would be answered by D3FEND here, at this point.

For example, one of the countermeasures is the classic list of blocked domains. Something that is usually fed through a feed of domains classified as malicious or similar categories: spam, porn, gambling, etc.

As we can see, defending is an art and here is the science, which comes to classify, document and interrelate every concept so that we can have a tactical vision and help us in the arduous task of building walls and digging trenches.

D3FEND is in beta, as we have already mentioned, and much content has yet to be detailed and expanded. Nevertheless, we can see that it has potential and above all that it complements its twin, ATT&CK, very well.