|

| Figure 1: Could Artificial Intelligence be a game changed in the world of online dating? |

|

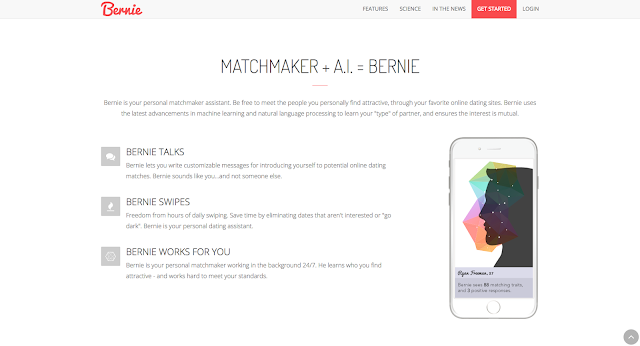

| Figure 2: An overview of Bernie’s functionalities in the online dating world. |

|

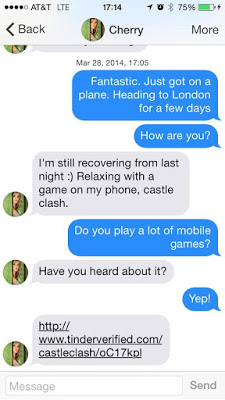

| Figure 3: An example of a Tinder Bot tricking a user to visit a gaming website. |