When malware is analyzed, it is usual to study network traffic to better understand how it communicates with some external server, what information it downloads, and what commands it receives or sends. Usually, this kind of malware uses http or https to communicate, and the tools to actually get to “see” that traffic are well known (acting as a man in the middle). However, more sophisticated malware may use its own protocol encapsulated under SSL, even checking the server’s certificate (if it doesn’t get to communicate with a server that holds a specific certificate that is embedded in its code, it refuses to establish connection). Analyzing in a more comfortable way this kind of malware was the motivation to create this tool, but it may be useful for some scenarios, for instance:

- Analyzing and modifying network protocols.

- Application firewall (thanks to “on the fly” filters that it supports).

- A tool for post-explotation and creating backdoors (injecting malware over the network protocol in a certain application).

API Hooking

When hooking any call, different techniques may be used. The most used are “AIT Hooking” and “InLine Hooking”. The latest is the one used by HookMe. It consists in modifying the code of the function that is going to be hooked, and jump to another portion of code before executing the original one. This modification consists in an unconditional jump (JMP) that points to some other memory address where actions by the hook itself are implemented.

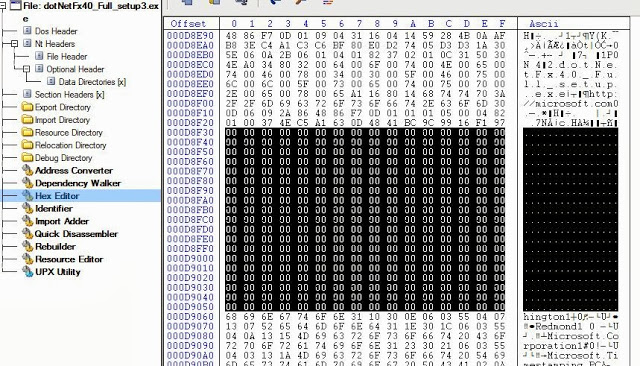

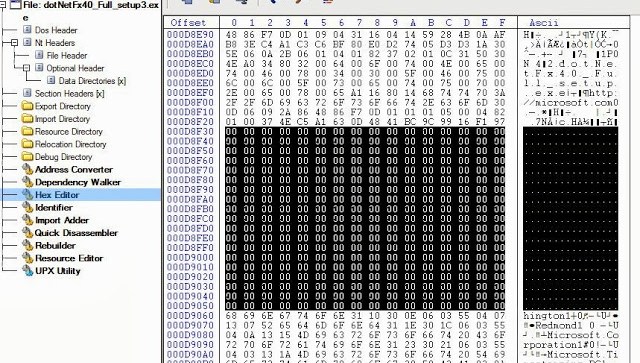

The following figures show the before and after look of hooking a call to the “send” API in WS2_32.dll.

|

| “Send” function code before and after adding the hook |

As observed, the memory address where the function “send” starts is 0x71A34C27 and implements a MOV EDI,EDI command. This instruction is replaced by an unconditional jump (JMP) to 0x0576000 address. This address will implement hook instructions code. The program, to solve potential errors because of functions changing addresses in different operative systems or versions, uses the well known Nektra Deviare2 DLL.

- send (WS2_32.dll)

- sendto (WS2_32.dll)

- recv (WS2_32.dll)

- recvfrom (WS2_32.dll)

- WSASend (WS2_32.dll)

- WSARecv (WS2_32.dll)

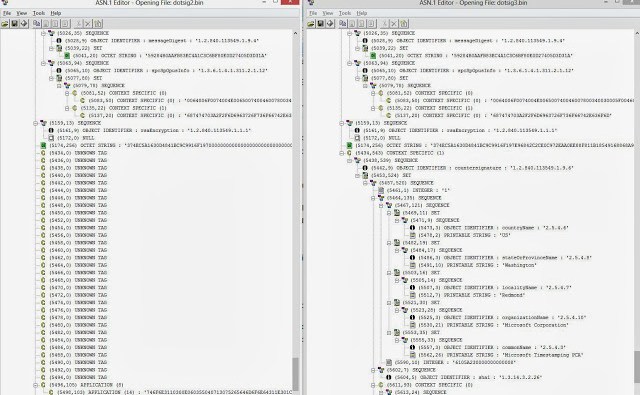

- EncryptMessage (Secur32.dll)

- DecryptMessage (Secur32.dll)

The first six ones are responsible for sending and receiving data over the network, in different ways. The two others (EncryptMessage and DecryptMessage) have a different goal. They are used by applications to cipher and decipher data in an easy way, supporting different cryptographic algorithms. These two APIs are hooked to get access to clear text data directly even if they are going to be sent over a secure channel (like SSL). Hookme, when intercepting these calls, allows the user to see and modify the input and output of these functions, even clear text data of SSL connections using Windows CryptoAPI. This is possible because data is intercepted just before it is ciphered and just after they are decrypted.

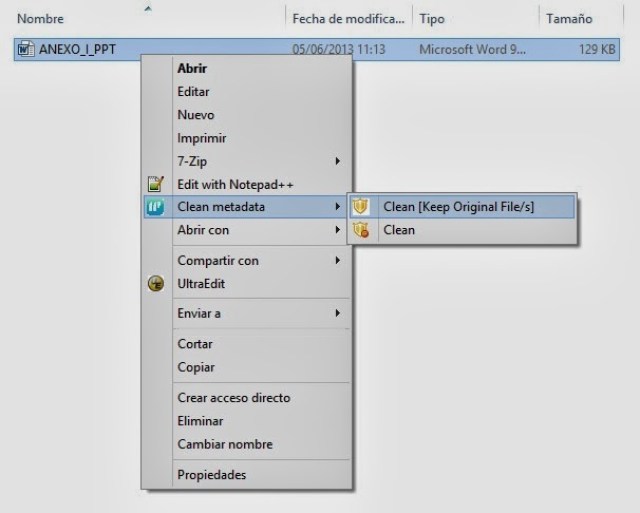

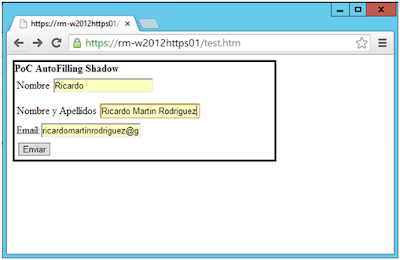

Once a call is “hooked”, from the user interface it’s possible to intercept calls, or “let them go” with Intercepting is ON / Intercepting is OFF button. If intercepted, the program will show its content in hexadecimal and text (ANSI).

From this window, the content may be modified before it is sent to the API or before data is returned back to the application (when the API returns something). In the screenshot above, the communication of HeidiSQL (a Windows graphical client for MySQL) is being intercepted. The screenshot shows the exact authentication packet between client and server. In 0x24 offset ‘root’ user is shown, and in 0x29 the hash of the password that has been introduced.

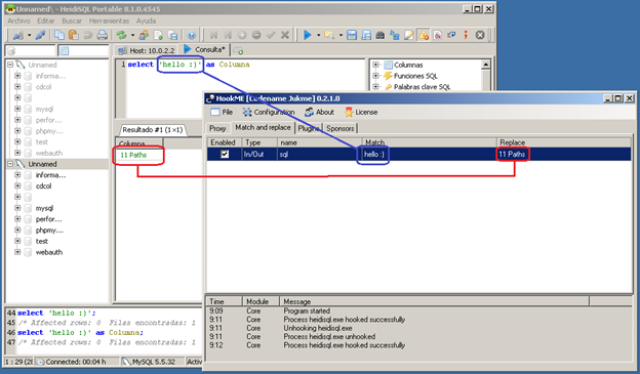

Once the replacement rule is applied, in the figure below you can see how the SQL Select ‘hello 🙂’ statement is received as an answer to “11 Paths” string. With these changes, firewall applications could be implemented, filtering some parameters that received over the network by the applications could represent a risk.

An important feature is that HookMe supports plugins developed in Python. These plugins may be created with different goals, like saving communications in a file, modifying traffic, certificates, application firewalls, etc. The following screenshot shows the interface where plugins are loaded, and a simple Python interface inside the application itself.