Banking trojan I+D: 64 bits versions and using TOR

Florence Broderick 8 January, 2014

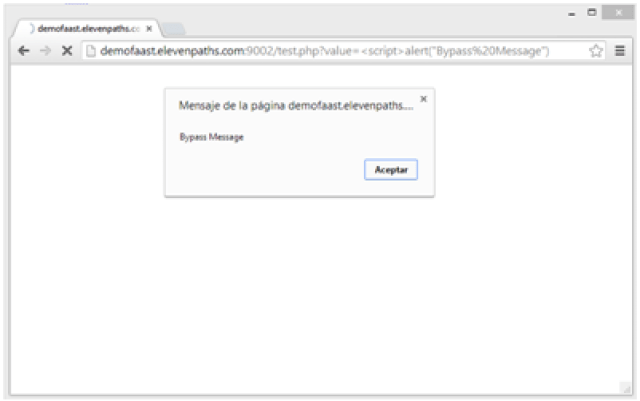

- It injects itself in the browser, so it can modify what the user actually sees in the screen. It injects new fields or messages and modifies the behavior or sends the relevant data to the attacker.

- If not injecting anything, it captures and sends all the outgoing https traffic to the attacker.

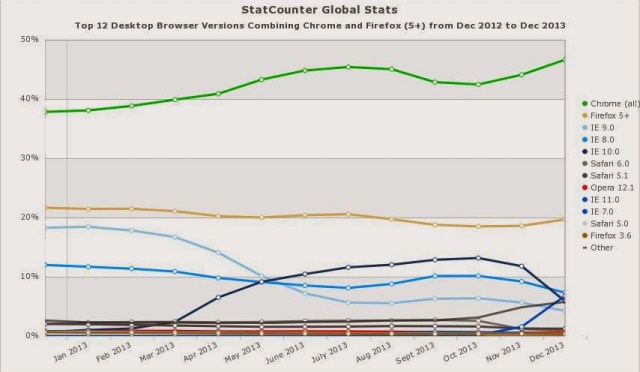

|

| Browser usage. 64 bits Chrome version is under developing in Windows. 64 bits version for Firefox for Windows was even cancelled for a while. |

One of the weak points (and advantages, in a way) of ZeuS (and malware in general these days) is that it strongly depends on external servers. Once these servers are down, the trojan becomes mostly useless. To solve this, so far they have used dynamic domains, domain random generator, fast flux playing with DNS, bulletproof hosting… and all these techniques are ok but they result expensive. Using TOR and .onion domains gives them “inexpensive” strength. Shutting down this servers will be very difficult for the good guys, and easier for the attacker to keep than any other “resilient” infrastructure used so far.

Accessing (and hacking) Windows Phone registry

Florence Broderick 30 December, 2013

Dependencies

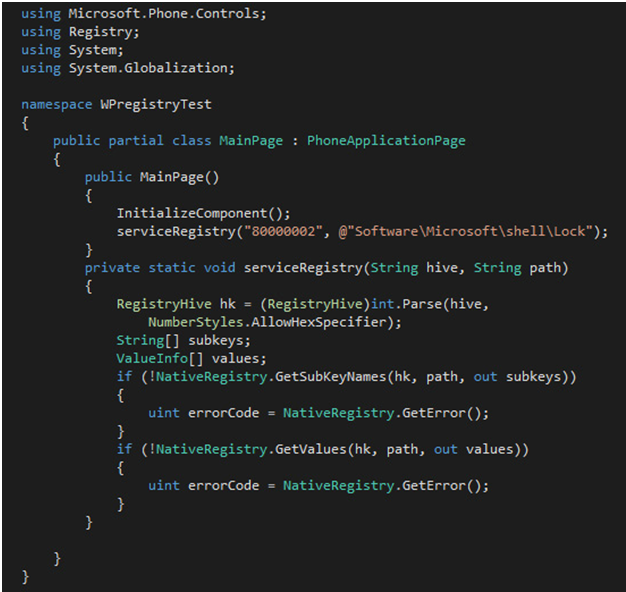

A real example

- 80000000 -> HKEY_CLASSES_ROOT

- 80000001 -> HKEY_CURRENT_USER

- 80000002 -> HKEY_LOCAL_MACHINE

- 80000003 -> HKEY_USERS

- 80000004 -> HKEY_PERFORMANCE_DATA

- 80000005 -> HKEY_CURRENT_CONFIG

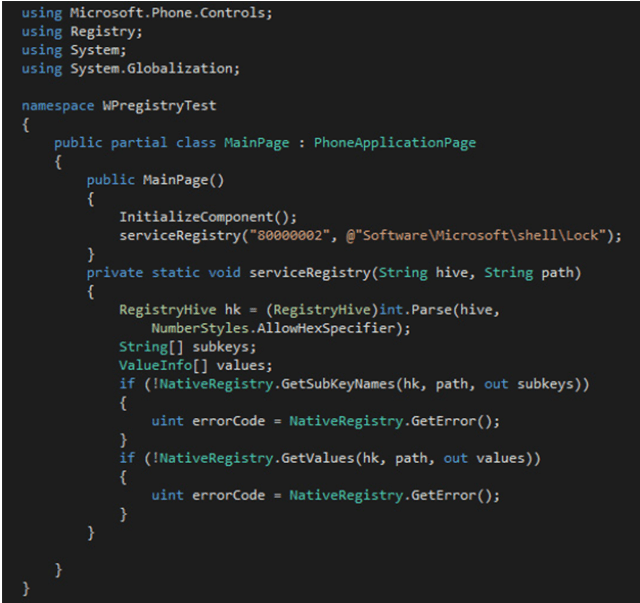

|

| Example code to access registry in Windows Phone 8 |

For some registry locations that are highly sensitive, or for writing or creating keys, you need to add special Capabilities to your app. This will require an interop-unlock that has currently been achieved only in Samsung devices by taking advantage of Samsung’s “Diagnosis tool”.

FOCA Final Version, the ultimate FOCA

Florence Broderick 16 December, 2013

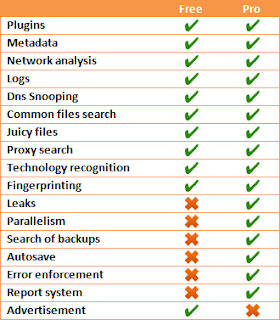

|

| FOCA Free vs. FOCA Pro |

|

| FOCA Final Version |

Latch, new ElevenPaths' service

Florence Broderick 12 December, 2013

We think that users do not use their digital services (online banking, email, social networks…) 24 hours a day. Why would you allow an attacker trying to access them at any time then? Latch is a technology that gives the user full control of his online identity as well as better security for the service providers.

Latch, take control of when it’s possible to access your digital services.

Latch’s approach is different. Avoiding authentication credentials ending in wrong hands is very difficult. However, it’s possible for the user to take control of his digital services, and reduce the time that their are exposed to attacks. “Turn off” your access to email, credit cards, online transactions… When they’re not being used. Block them even when the passwords are known. Latch offers the possibility for the user to decide when his accounts or certain services can be used. Not the provider and, of course, nor the attacker.

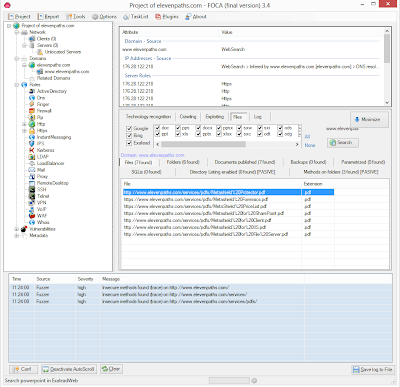

Latch’s scheme

|

| Latch’s general work scheme |

How it works in practice

- Create a Latch user account. This account will be the one used by the user to configure the state of the operations (setting to ON or OFF his services accounts).

- Pairing the usual account with the service provider (an email account or a blog, for example) that the user wants to control. This step allows Latch to synchronize with the service provider and to provide the adequate responses (defined by the user) depending on which operation is tried to be used. The service provider must be compatible with Latch, of course. This allows the users to decide whether to use Latch or not. Latch is offered but not imposed.

Latch for the service providers

The integration is easy and straightforward, giving the service provider a great opportunity to improve the security offered to its users, and therefore, their online identity.

Latch offers more ways to protect users, their credentials, online services and online identities. We will introduce them soon. Stay tuned.

EmetRules: The tool to create "Pin Rules" in EMET

Florence Broderick 6 December, 2013

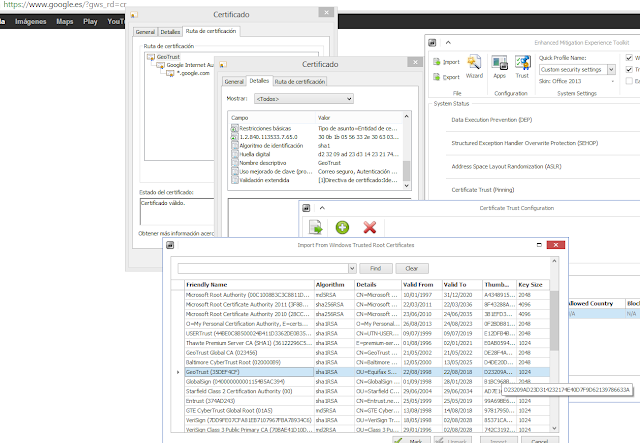

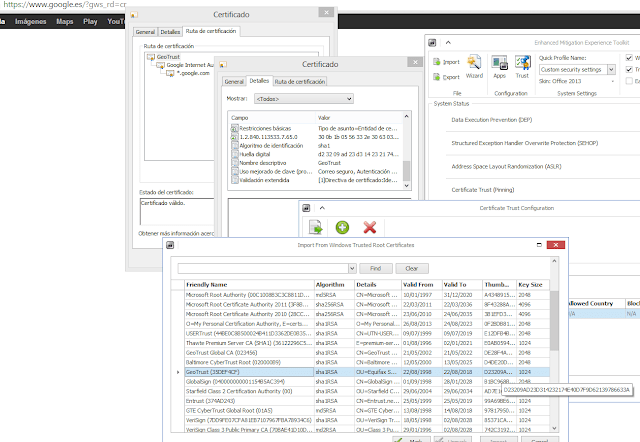

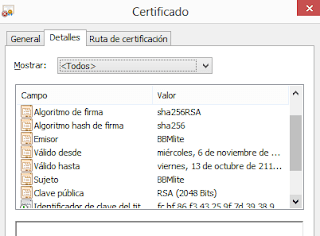

- Check the certificate in that domain

- Check its root certificate

- Check its thumbprint

- Create the rule locating the certificate in the store

- Pin the domain with its rule

Steps are summarized in this figure:

It is quite a tedious process, much more if your target is to pin a big number of domains at once. In Eleven Paths we have studied how EMET works, and created EmetRules, a little command line tool that allows to complete all the work in just one step. Besides it allows batch work. So it will connect to domain or list indicated, will visit 443 port, will extract SubjectKey from its root certificate, will validate certificate chain, will create the rule in EMET and pin it with the domain. All in one step.

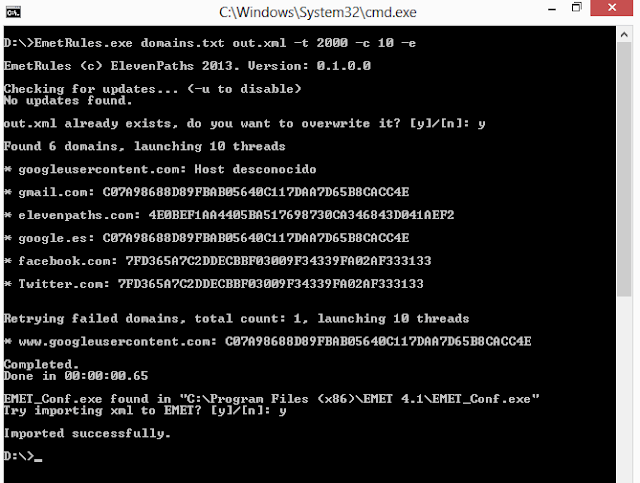

| EmetRules de ElevenPaths |

The way it works is simple. The tools needs a list of domains, and will create its correspondent XML file, ready to be imported to EMET, even from the tool itself (command line).

- “urls.txt”: Is a file containing the domains, separated by “n”. Domains may have “www” on them or not. If not, EMET will try both, unless stated in “d” option (see below).

- “output.xml” specifies the path and filename of the output file where the XML config file that EMET needs will be created. If it already exists, the program will ask if it should overwrite, unless stated otherwise with “-s” option (see below).

Options:

- t|timeout=X. Sets the timeout in milliseconds for the request. Between 500 and 1000 is recommended, but it depends on the trheads used. 0 (by default) states for no timeout. In this case, the program will try the connection until it expires.

- “s”, Silent mode. No output is generated or question asked. Once finished it will not ask if you wish to import the generated XML to EMET.

- “e”, This option will generate a TXT file named “error.txt” listing the domains that have generated any errors during connection. This list may be used again as an input for the program.

- “d”. This option disables double checking, meaning trying to connect to main domain and “www” subdomain. If the domain with “www” is used in “url.txt”, no other will be connected. If not, both will be connected. With this option, it will not.

- c|concurrency=X. Sets the number of threads the program will run with. 8 are recommended. By default, only one will be used.

- “u”. Every time the program runs, it will contact central servers to check for a new version. This option disables it.

December 12h: Innovation Day in Eleven Paths

Florence Broderick 29 November, 2013

On December 12th, 2013, in Madrid, Eleven Paths will come out in society in an event we have named Innovation Day. In this event Eleven Paths will introduce old an new services, besides some surprises. Registration is necessary to assist, from this web.

Eleven Paths started working inside Telefónica Digital six months ago. After quite a lot of hard word, it is time to show part of the effort we have been trough during this time. Besides Eleven Paths, Telefónica de España and Vertical de Seguridad de Telefónica Digital will present their products and services as well, in this Innovation Day.

We will talk about Teléfónica CiberSecurity services, Faast, MetaShield Protector family products, Saqqara, antiAPT services… and, finally, about a project that has remained secret so far and dubbed “Path 2” internally. December 12th and later on, this technology will be revealed step by step. For Eleven Paths, it has been a real challenge to deploy it during this period. But right now, it is a reality. It is already integrated in several sites and world level patented.

Clients, security professionals and systems administrators… they are all invited. The event will occur on Thursday, December 12th during the afternoon (from 16:00) in the central building Auditorio of campus DistritoTelefónica in Madrid. Besides announcing all this exiting technology, we will enjoy live music concerts. Finally, there will be a great party, thanks to all security partners in Telefónica.

Registration is limited, so a pre-registering form is available. Once filled up, a confirmation email will be sent (if it is still possible to assist).

The "cryptographic race" between Microsoft and Google

Florence Broderick 21 November, 2013

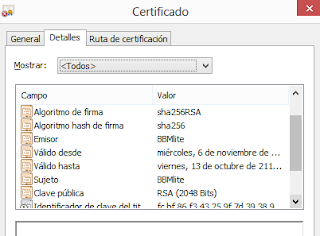

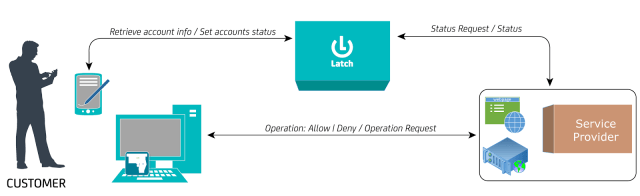

|

| 2048 bits certificate using SHA2 (SHA256). |

|

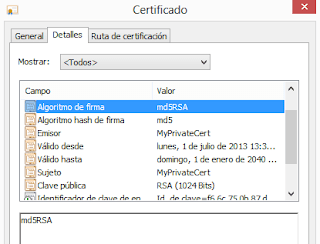

| Selfsigned certificate created in Windows 8 and using MD5 and 1024 bits. |

- BEAST, in 2011. The problem was based in CBC and RC4. It was really solved with TLS 1.1 and 1.2. But both sides (server and browser) have to be able to support these versions.

- CRIME: This attack allows to retrieve cookies if TLS compression is used. Disabling TLS compression solves the problem.

- BREACH: Allows to retrieve cookies, but is based on HTTP compression, not TLS, so it may not be “disabled” from the browser. One is vulnerable whatever TLS version is being used.

- Lucky 13: Solved in software mainly and in TLS 1.2.

- TIME: A CRIME evolution. It doesn’t require an attacker to be in the middle, just JavaScript. It’s a problem in browsers, not TLS itself.

|

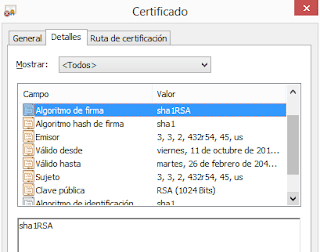

| A very common certificate yet, using SHA1withRSAEncryption and 1024 keys |

Fokirtor, a sophisticated? malware for Linux

Florence Broderick 18 November, 2013

Responsible full disclosure… por ambas partes

Florence Broderick 13 November, 2013

We take these reports seriously and will respond swiftly to fix verifiable security issues. […] Any Cisco Meraki web service that handles reasonably sensitive user data is intended to be in scope. This includes virtually all the content under *.meraki.com. […] It is difficult to provide a definitive list of bugs that will qualify for a reward: any bug that substantially affects the confidentiality or integrity of user data is likely to be in scope for the program.

La revelación de información se les notificó a principios de noviembre. Dos días después la respuesta de Meraki fue peor de lo esperado:

I have looked into your report and, unfortunately, this was first reported to us on 9/23/13, with a resolution still pending from our engineers.

Esto implica que se alegaba que un tercero lo había descubierto previamente y lo que es peor, que el problema les era conocido desde hacía al menos cinco semanas y aún no lo habían (y no lo han) resuelto. Simplemente se trata eliminar una página de un servidor o protegerla con contraseña.

Otros problemas y vulnerabilidades descubiertos por nuestro equipo, bastante más complejos, han sido resueltos en mucho menos tiempo. Los problemas que se pueden zanjar eliminando una página, prácticamente suelen solucionarse en el mismo día que se detectan.

Salvando absolutamente todas las distancias, otros usuarios que han participado en los “bounty programs” de Facebook, PayPal (especialmente torpe con su programa) o Google, se quejan de que han recibido, en “demasiadas” ocasiones, la respuesta de que ya alguien les había alertado previamente. Incluso afirma que al pedirle a PayPal pruebas de que alguien se les había adelantado, nunca contestaron. Es incluso más común oír que los fabricantes tardan demasiado en corregir cualquier error cuando se alerta de ellos de forma privada.

Antecedentes

Al margen de la anécdota introductoria, la “divulgación responsable” es un viejo debate, y existen muchos ejemplos con los que periódicamente se ha reabierto. En resumen: al divulgar una vulnerabilidad, en cierta manera, se ataca directamente al crimen en la red donde más le duele, devaluando un valor muy apreciado. También, hacer público cualquier error hace que su prevención y detección sea mucho más generalizada y por tanto, previene futuros ataques. Incluso, podría llegar a “detener” ataques que hipotéticamente se estuviesen realizando aprovechando un fallo antes de que el investigador lo hiciese público.

En la parte negativa, cuando se hace público, otros atacantes lo incorporan a su arsenal y pueden aprovechar esa vulnerabilidad para lanzar nuevos ataques. Aunque a su vez, ataques de difusión masiva siempre van acompañados de defensas mucho más variadas y asequibles. Por último también es cierto que el hecho de hacer públicos los fallos acelera el proceso de corrección en el fabricante.

El “full disclosure” en realidad, no elimina el impacto de una vulnerabilidad sino que lo transforma: desde un hipotético uso exclusivo del fallo en el mercado negro, inadvertido y muy peligroso, con objetivos muy concretos y valiosos donde pocos ataques dan un alto beneficio; hasta ataques indiscriminados aunque cazados en mayor medida por los sistemas de detección.

Otras responsabilidades

Ahora que están de moda los programas de recompensa, son muchas las compañías que han intentado motivar la revelación responsable premiando económicamente a los que descubren vulnerabilidades y problemas de seguridad. Pero es necesario recordar que desde la posición del afectado, también se crean nuevas responsabilidades. Es muy habitual que se hable de que, por ejemplo, el descubrimiento se atribuirá al primero que escribe al equipo de seguridad. Pero, ¿cómo se demuestra esto? ¿Cómo podría acreditar alguien que ha enviado un correo describiendo un fallo o vulnerabilidad antes que nadie? Los fabricantes podrían obligar a utilizar PGP firmado con timestamp o incluso servicios gratuitos como eGarante para alertar y garantizar los avisos, pero no parece que ninguno recomiende explícitamente su uso. Las empresas que ofrecen recompensas, sugieren en el mejor de los casos el cifrado en las comunicaciones, pero por confidencialidad, más que por la demostración de autoría.

También debemos atender a la responsabilidad de arreglar en un tiempo razonable una vulnerabilidad o fallo. ¿Cuánto tiempo es aceptable? En agosto de 2010 Zero Day Initiative de TippingPoint, harto de vulnerabildiades que se eternizaban, impuso una nueva regla destinada a presionar a los fabricantes de software para que solucionen lo antes posible sus errores: si pasados seis meses desde que se les avise de un fallo de seguridad de forma privada, no lo han corregido, lo harían público. Para fallos web triviales, como el caso de la anécdota, deberían darse menos tiempo, incluso. Lo que añade un nuevo problema: ¿existe alguna forma “justa” o responsable de evaluar la gravedad de un problema y por tanto, su valor económico a la hora de recompensarlo? En el caso de las compañías que hacen de intermediarias, el precio suele estar más o menos fijado, pero en los bounty programs “privados” de cada empresa, este puede ser un valor muy subjetivo.

Los programas de recompensa pretenden premiar a los investigadores, motivarlos para que las vulnerabilidades salgan del circuito del mercado negro, y además obtener una “auditoría” avanzada a un precio que consideren justo. También pretenden ofrecer una imagen de compañía responsable y que premia el trabajo de los profesionales de la seguridad… pero deben estar preparados para responder diligentemente y actuar de forma tan profesional y responsable como exigen a los investgadores, para mantener esa imagen que se pretende proyectar. Si no, que se lo digan a Yahoo!, que a mediados de año, fue ridiculizada tras ofrecer 12.50 dólares (canjeables en productos de la propia Yahoo!) a una compañía que descubrió serios problemas de seguridad en su red.