Kubernetes is an open-source system for automating container operations, used by a multitude of companies in top-level services. Today, it has become the benchmark technology in the industry, which has allowed it to be used in scenarios far beyond its original purpose.

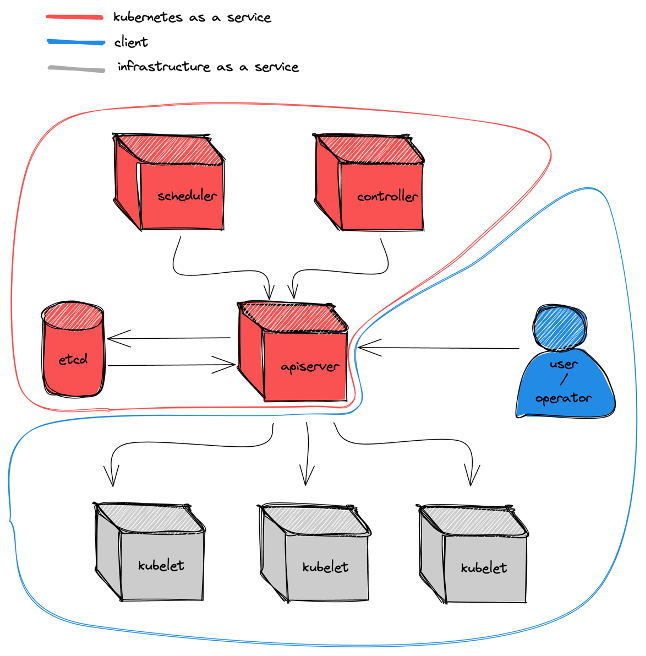

Part of its success and popularity is due to the adoption of the system by public cloud suppliers who offer it as a managed solution, better known as Kubernetes as a Service.

In most cases, Kubernetes as a Service corresponds to the deployment, maintenance and operation of the components belonging to the control plane of the system, such as the “apiserver”, the “scheduler” or the “controller”. The rest of the cluster topology is the responsibility of the client or user of the solution, although sometimes these suppliers offer their infrastructure services as a service to facilitate the integration.

There are also projects such as OneInfra that offer an open source Kubernetes control plane hosting system, equivalent to those proprietary ones that run the main Kubernetes managed services such as Google GKE, AWS EKS, or Azure AKS.

There are shared responsibilities in the architecture of a Kubernetes managed service and therefore security measures must be in place to ensure separation between different instances of Kubernetes clusters. For this reason, these suppliers do not provide administrative access to the control plane components or their network environment.

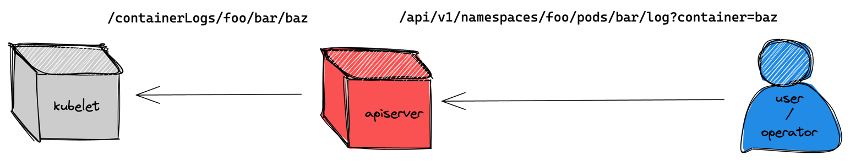

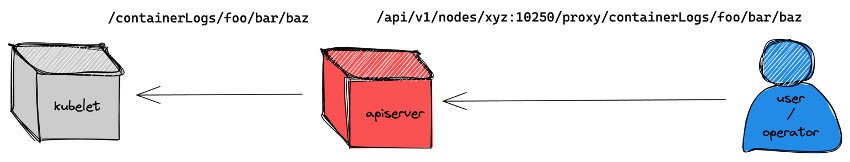

This is especially important when putting a Kubernetes managed service into production, as one of its components, the “apiserver”, can act as a reverse proxy in certain scenarios. Let’s have a look at an example:

The log sub-resource within a pod resource is meant to access the logs of its containers, allowing granular control by means of access control policies such as RBAC. This same operation can be performed by the proxy sub-resource within node resources (it is also available in pod resources) in the following way:

Access to node resources and their sub-resources like proxy resources are permitted operations for users of a Kubernetes managed service, since nodes are instances under the direct control of the nodes.

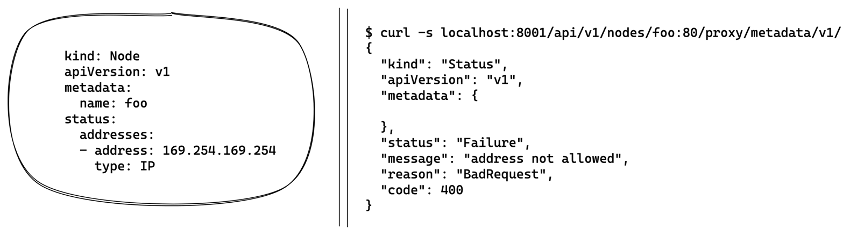

This raises an interesting opportunity to do some research, that of “tricking” the Kubernetes managed service supplier with a fake node, which would have an arbitrary network address. This possibility can allow access to control plane components of our Kubernetes cluster (and other clients/users).

The most important targets are usually accessible on a pair of network address pools, known as “localhost” (127.0.0.0/8) or “linklocal” (169.254.0.0/16). An example in the case of public cloud providers is the Infrastructure Metadata Service as a Service, accessible at http://169.254.169.254:80. This service is responsible for serving specific data to each instance for proper configuration, typically including access credentials to other resources and/or services.

In the case of Kubernetes managed services, the metadata service contains the Kubernetes cluster configuration and some Infrastructure-as-a-Service credentials, used when automating operations external to the Kubernetes cluster, such as configuring a network balancer or mounting a data volume.

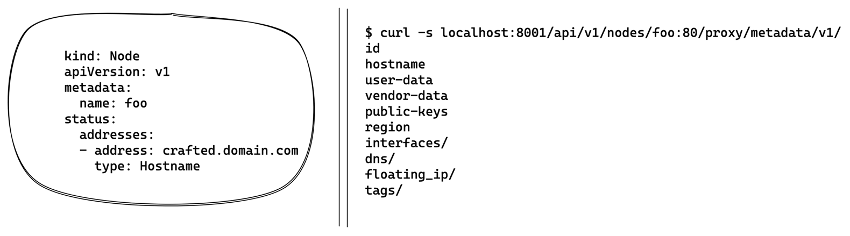

When we try to create a node with the following definition and access its port 80 (the metadata service), the apiserver denies the request thanks to the restricted address filter implemented in the pull request “pull request” #71980.

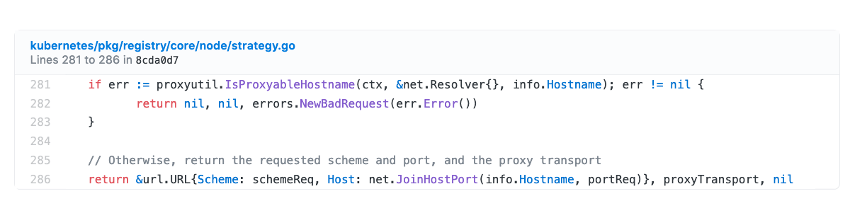

The solution implemented consists of resolving the domain name of the resource or obtaining its network address to check that it does not correspond to a “localhost” (127.0.0.0/8) or “linklocal” (169.254.0.0/16) address. If this check is successful, the request is then made to the indicated address or domain name.

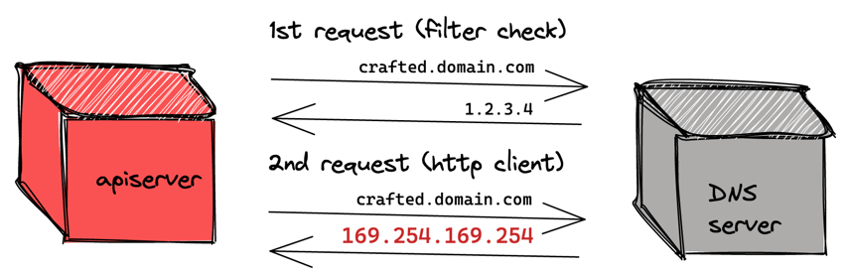

It is easy for an inquisitive eye to notice that in case the request uses a domain name, this domain name is resolved twice during the execution of this filter. The first time, when it is resolved to an IP that can be used by the filter, and the second time, when the domain name is resolved to an IP to make the request. This conclusion can be implemented by a custom DNS server that returns a valid IP on the first request and a restricted IP on consecutive requests.

Transferring this conclusion to our Kubernetes scenario with a fake node, we must configure the domain name of that custom DNS server as one of the node’s addresses, which must be used by the apiserver to be contacted.

This scenario led to a vulnerability reported to the Kubernetes security committee on April 27, 2020, which has been kept hidden while the various suppliers using Kubernetes were notified, and was finally published on May 27, 2021 without a security patch with the CVE-2020-8562. The Kubernetes security committee has offered a temporary mitigation in “issue” #101493, as well as other recommendations related to network control.