Google and Microsoft are taking bold steps forward to improve the security of cryptography in general and TLS / SSL in particular, raising standards in protocols and certificates. In a scenario as reactive as the security world, these movements are surprising. Of course, these are not altruistic gestures (they improve their image in the eyes of potential customers, among other things). But in practice, are these movements useful?

Google: what have they done

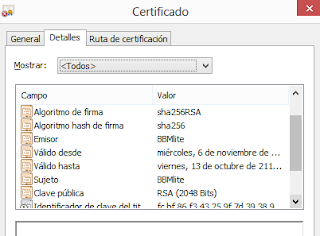

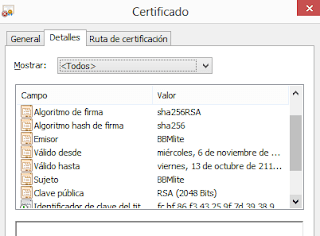

Google announced months ago that it was going to improve the security in certificates using 2048 bits RSA keys as minimum. They have finished before they thought. They want to remove 2014 bit certificates from the industry before 2014 and create all of them with 2048 bit key length from now on. Something quite optimistic, keeping in mind that they are still being used a lot. Beginning 2014, Chrome will warn users when certificates don’t match their requisites. Rising the key bits up to 2048 in certificates involves that trying to break the cipher by brute forcing it becomes less practical with current technology.

Besides, related with this effort towards encrypted communications, since October 2011, Google encrypts traffic for logged users. Last September, it started to make it in every single search. Google is trying as well to establish “certificate pinning” and HSTS to stop intermediate certificates when browsing the web. If that wasn’t enough, their certificate transparency project goes on.

Seems like Google is particularly worried about its users security and, specifically (although it may sound funny for many of us) for their privacy. In fact, they asset that“the deprecation of 1024-bit RSA is an industry-wide effort that we’re happy to support, particularly light of concerns about overbroad government surveillance and other forms of unwanted intrusion.”.

Microsoft: what have they done

In the latest Microsoft update, important measures to improve cryptography in Windows were announced. In the first place, it will no longer support RC4, very weak right now (it was created in 1987) and responsible for quite a lot of attacks. Microsoft is introducing some tools to disable it in all their systems and wants to eradicate it soon from every single program. In fact, in Windows 8.1 with Internet Explorer 11, the default TLS version is raised to TLS 1.2 (that is able to use AES-GMC instead of RC4). Besides, this protocol also uses SHA2 usually.

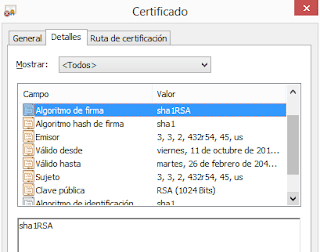

Another change in certificates is that it will no longer allow hashing with SHA1 for certificates used in SSL or code signing. SHA1 is an algorithm that produces a 160 bits output, and is used when generating certificates with RSA protocol to hash the certificate. This hash will be signed by the Certificate Authority, showing its trust this way. It has been a while since NIST encouraged to stop using SHA1, but fewer cared about that claim. It looks like a quite proactive for Microsoft, that got us used to an exasperate reactive behavior.

Why all this? Is this useful?

Microsoft and Google are determined to improve cryptography in general and TLS/SSL in particular. With these measures adopted between both of them, the security of the way traffic is encrypted, is substantially raised.

|

| 2048 bits certificate using SHA2 (SHA256). |

Certificates that identify public keys calculated with 512 bits RSA keys, were broken in practice in 2011. In 2010, a 768 bits number (212 digit) was factored with a general purpose algorithm and in a distributed way. The higher known. So, in practice, using a 1024 bits number is “safe”, although it could be discussed if it represents a threat in near future. Google is playing safe.

But there are some other problems to focus on. Using stronger certificates in SSL, is not the main obstacle for users. In fact, introducing new warnings (Chrome will warn about 1024 bit certificates) may just make the user even more confused: “What does using 1024 bits mean? Is it safe or not? is this the right place? what decision should I take?“. Too many warnings just relaxes security (“which is the right warning when I am warned about safe and unsafe sites?”). The problem with SSL is that it’s socially broken, and is not understood… it’s not about the technical standpoint but from the users. Users will be happy that their browser of choice uses stronger cryptography (so the NSA can’t spy on them…), but it will be useless if, confused, accepts an invalid certificate when browsing, not being aware that it’s introducing a man-in-the-middle.

If we adopt the theory that NSA is able to break into communications because it already has adequate technology as to bruteforce 1024 bits certificates, this is very useful. There would be a problem if it wasn’t necessary to break or brute force anything at all, because the companies were already cooperating to give NSA plain text traffic… We could dismiss that NSA had already their own advanced systems ready to break 2048 bit keys, and that is why they “allow” its standardization… couldn’t we? We just have to look back a few years to remember some conspiracy tales like these in the world of SSL.

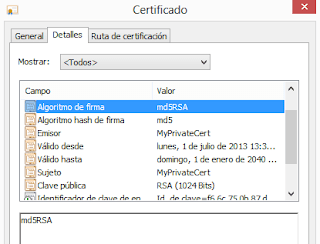

|

| Selfsigned certificate created in Windows 8 and using MD5 and 1024 bits. |

The case of Microsoft is funny, too. Obviously, this movement in certificates is motivated because of TheFlame. Using MD5 with RSA played a bad trick, allowing the attackers to sign code in its name. It can’t happen again. This puts Microsoft ahead of deprecating SHA1 for certificates, because the industry will follow. But if RC4 is really broken, SHA1 health is not that bad. We have just started getting rid of MD5 in some certificates, when Microsoft claims the death of SHA1. This leaves as just with the possibility of using SHA2 (sha256RSA or sha256withRSAEncryption normally in certificates, although SHA2 allows the use from 224 to 512 bits output). It’s the right moment, because XP is dying, and SHA2 wasn’t even natively supported (just from service pack 3). There is still a lot of work to be done, because SHA1 is very extended (Windows 7 signs most of its binaries with SHA1, Windows 8, with SHA2), that is why deadline is 2016 in signing certificates and 2017 for SSL certificates. The way Certification Authorities will react… is still unknown.

On the other hand, regarding the user of mandatory TLS 1.2 (related in a way, because it’s the protocol supporting SHA2), we have to be aware of the recent attacks against SSL to know what it’s really trying to solve. Very briefly:

- BEAST, in 2011. The problem was based in CBC and RC4. It was really solved with TLS 1.1 and 1.2. But both sides (server and browser) have to be able to support these versions.

- CRIME: This attack allows to retrieve cookies if TLS compression is used. Disabling TLS compression solves the problem.

- BREACH: Allows to retrieve cookies, but is based on HTTP compression, not TLS, so it may not be “disabled” from the browser. One is vulnerable whatever TLS version is being used.

- Lucky 13: Solved in software mainly and in TLS 1.2.

- TIME: A CRIME evolution. It doesn’t require an attacker to be in the middle, just JavaScript. It’s a problem in browsers, not TLS itself.

|

| A very common certificate yet, using SHA1withRSAEncryption and 1024 keys |

We are not aware of these attacks being used in the wild by attackers. Imposing 1.2 without RC4 is a necessary movement, but risky yet. Internet Explorer (until 10) supports TLS 1.2 but is disabled by default (only Safari enables it by default, and the others just started to implement it). Version 11 will enable it by default. Servers have to support TLS 1.2 too, so we don’t know how they will react.

To summarize, it looks like these measures will bring technical security (at least in the long term). Even if there are self interests to satisfy (avoiding problems they already had) and an image to improve (leading to the “cryptographic race”), any enhancement is welcome and this “war” to lead the cryptography, (that fundamentally means being more proactive that your competitors), will raise up the bar.

Sergio de los Santos